Recent advancements in optimizing compound AI systems highlight challenges in integrating various components, with an emphasis on natural language feedback methods for non-differentiable systems.

Recent advancements in large language models (LLMs) and AI systems have led

to a paradigm shift in the design and optimization of complex AI workflows. By

integrating multiple components, compound AI systems have become increasingly

adept at performing sophisticated tasks. However, as these systems grow in

complexity, new challenges arise in optimizing not only individual components

but also their interactions. While traditional optimization methods such as

supervised fine-tuning (SFT) and reinforcement learning (RL) remain

foundational, the rise of natural language feedback introduces promising new

approaches, especially for optimizing non-differentiable systems. This paper

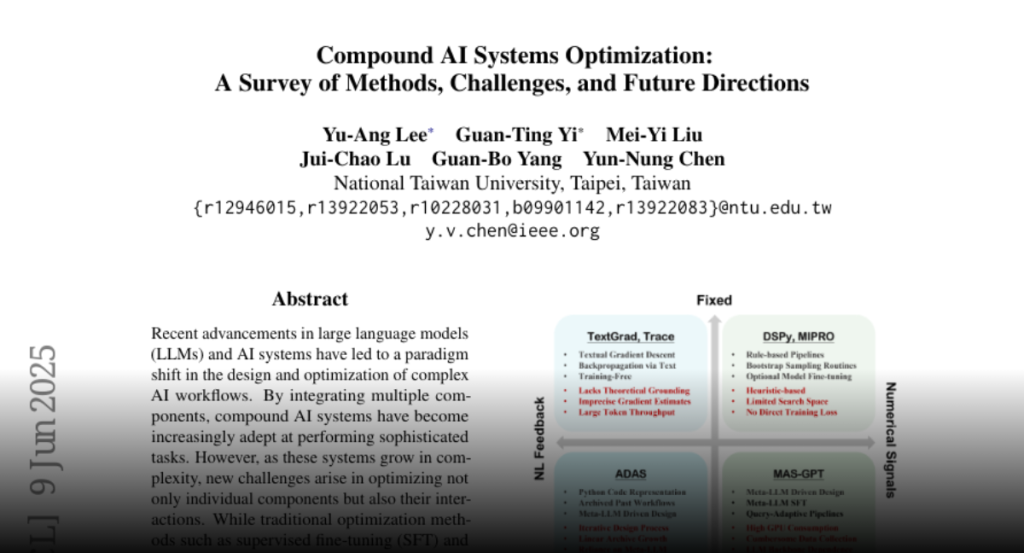

provides a systematic review of recent progress in optimizing compound AI

systems, encompassing both numerical and language-based techniques. We

formalize the notion of compound AI system optimization, classify existing

methods along several key dimensions, and highlight open research challenges

and future directions in this rapidly evolving field. A list of surveyed papers

is publicly available at https://github.com/MiuLab/AISysOpt-Survey.