ChartLens enhances multimodal language models with fine-grained visual attributions, improving the accuracy of chart understanding by 26-66%.

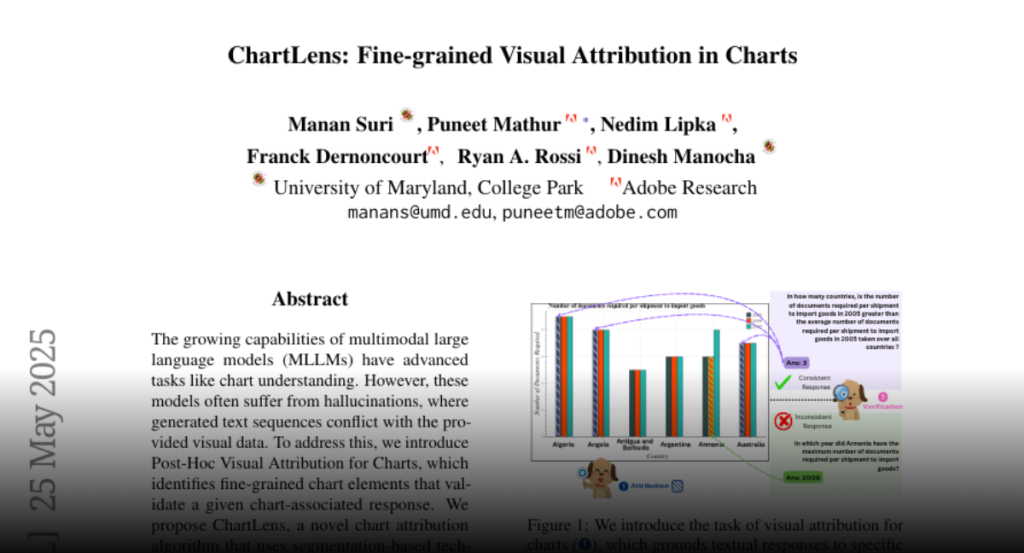

The growing capabilities of multimodal large language models (MLLMs) have

advanced tasks like chart understanding. However, these models often suffer

from hallucinations, where generated text sequences conflict with the provided

visual data. To address this, we introduce Post-Hoc Visual Attribution for

Charts, which identifies fine-grained chart elements that validate a given

chart-associated response. We propose ChartLens, a novel chart attribution

algorithm that uses segmentation-based techniques to identify chart objects and

employs set-of-marks prompting with MLLMs for fine-grained visual attribution.

Additionally, we present ChartVA-Eval, a benchmark with synthetic and

real-world charts from diverse domains like finance, policy, and economics,

featuring fine-grained attribution annotations. Our evaluations show that

ChartLens improves fine-grained attributions by 26-66%.