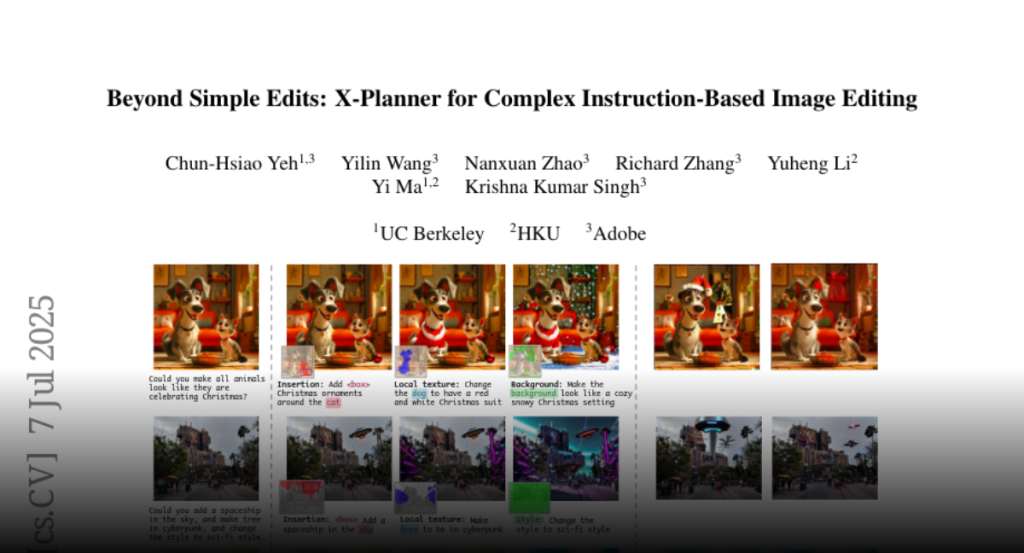

X-Planner, a planning system utilizing a multimodal large language model, decomposes complex text-guided image editing instructions into precise sub-instructions, ensuring localized, identity-preserving edits and achieving top performance on established benchmarks.

Recent diffusion-based image editing methods have significantly advanced

text-guided tasks but often struggle to interpret complex, indirect

instructions. Moreover, current models frequently suffer from poor identity

preservation, unintended edits, or rely heavily on manual masks. To address

these challenges, we introduce X-Planner, a Multimodal Large Language Model

(MLLM)-based planning system that effectively bridges user intent with editing

model capabilities. X-Planner employs chain-of-thought reasoning to

systematically decompose complex instructions into simpler, clear

sub-instructions. For each sub-instruction, X-Planner automatically generates

precise edit types and segmentation masks, eliminating manual intervention and

ensuring localized, identity-preserving edits. Additionally, we propose a novel

automated pipeline for generating large-scale data to train X-Planner which

achieves state-of-the-art results on both existing benchmarks and our newly

introduced complex editing benchmark.