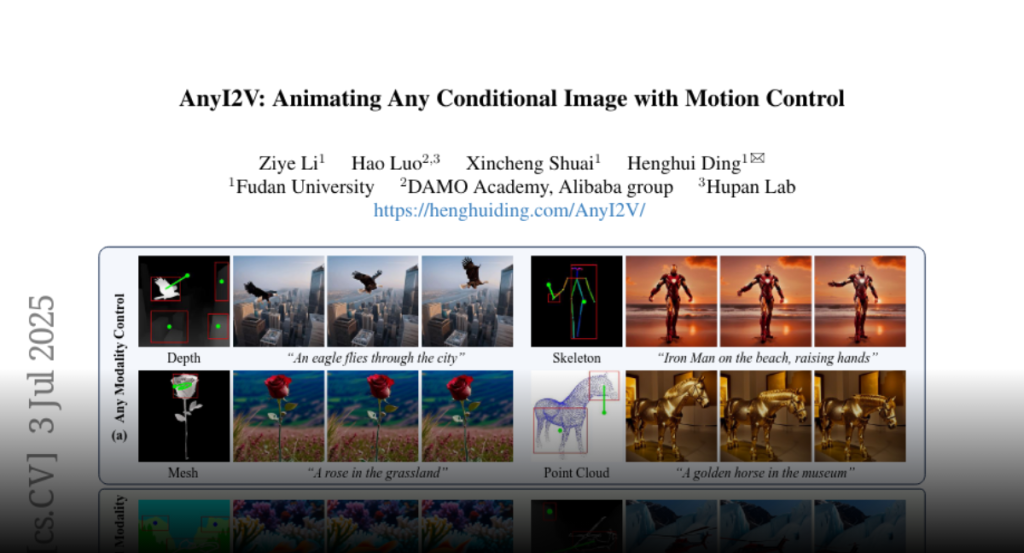

AnyI2V is a training-free framework that animates conditional images with user-defined motion trajectories, supporting various data types and enabling flexible video generation.

Recent advancements in video generation, particularly in diffusion models,

have driven notable progress in text-to-video (T2V) and image-to-video (I2V)

synthesis. However, challenges remain in effectively integrating dynamic motion

signals and flexible spatial constraints. Existing T2V methods typically rely

on text prompts, which inherently lack precise control over the spatial layout

of generated content. In contrast, I2V methods are limited by their dependence

on real images, which restricts the editability of the synthesized content.

Although some methods incorporate ControlNet to introduce image-based

conditioning, they often lack explicit motion control and require

computationally expensive training. To address these limitations, we propose

AnyI2V, a training-free framework that animates any conditional images with

user-defined motion trajectories. AnyI2V supports a broader range of modalities

as the conditional image, including data types such as meshes and point clouds

that are not supported by ControlNet, enabling more flexible and versatile

video generation. Additionally, it supports mixed conditional inputs and

enables style transfer and editing via LoRA and text prompts. Extensive

experiments demonstrate that the proposed AnyI2V achieves superior performance

and provides a new perspective in spatial- and motion-controlled video

generation. Code is available at https://henghuiding.com/AnyI2V/.