The study explores the structural patterns of knowledge in large language models from a graph perspective, uncovering knowledge homophily and developing models for graph machine learning to estimate entity knowledge.

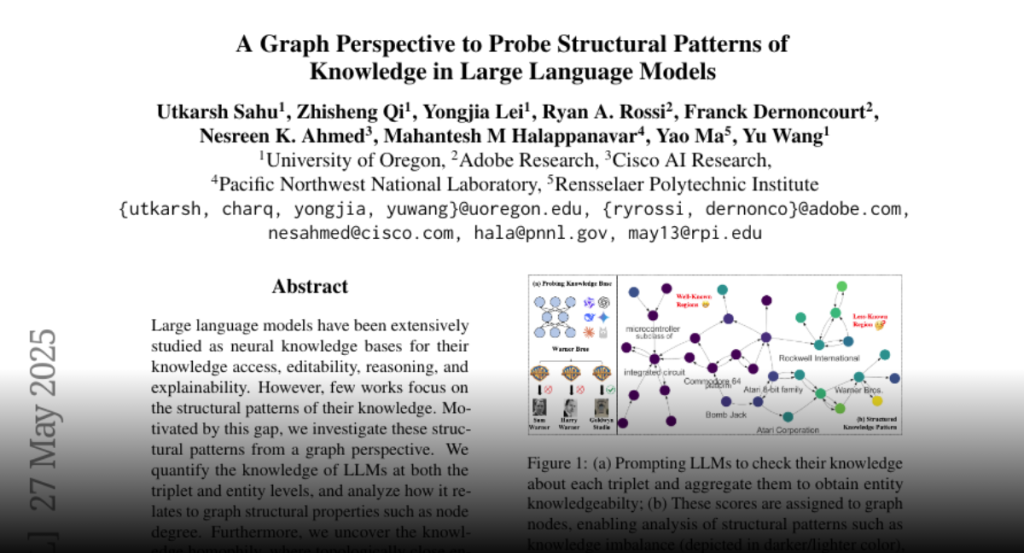

Large language models have been extensively studied as neural knowledge bases

for their knowledge access, editability, reasoning, and explainability.

However, few works focus on the structural patterns of their knowledge.

Motivated by this gap, we investigate these structural patterns from a graph

perspective. We quantify the knowledge of LLMs at both the triplet and entity

levels, and analyze how it relates to graph structural properties such as node

degree. Furthermore, we uncover the knowledge homophily, where topologically

close entities exhibit similar levels of knowledgeability, which further

motivates us to develop graph machine learning models to estimate entity

knowledge based on its local neighbors. This model further enables valuable

knowledge checking by selecting triplets less known to LLMs. Empirical results

show that using selected triplets for fine-tuning leads to superior

performance.