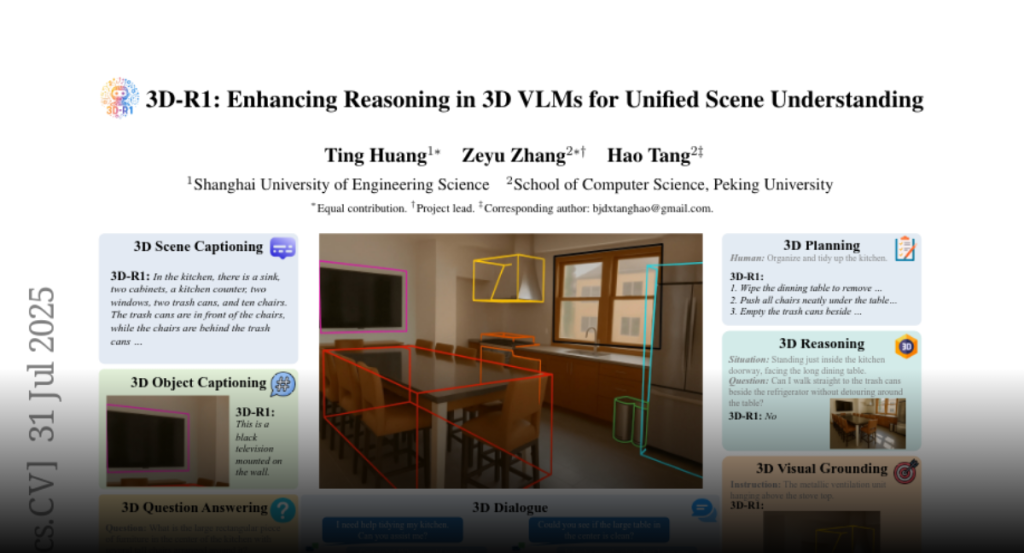

3D-R1 enhances 3D scene understanding through a high-quality synthetic dataset, reinforcement learning with GRPO, and dynamic view selection, achieving significant improvements in reasoning and generalization.

Large vision-language models (VLMs) have made significant strides in 2D

visual understanding tasks, sparking interest in extending these capabilities

to 3D scene understanding. However, current 3D VLMs often struggle with robust

reasoning and generalization due to limitations in high-quality spatial data

and the static nature of viewpoint assumptions. To address these challenges, we

propose 3D-R1, a foundation model that enhances the reasoning capabilities of

3D VLMs. Specifically, we first construct a high-quality synthetic dataset with

CoT, named Scene-30K, leveraging existing 3D-VL datasets and a data engine

based on Gemini 2.5 Pro. It serves as cold-start initialization data for 3D-R1.

Moreover, we leverage RLHF policy such as GRPO in the reinforcement learning

training process to enhance reasoning capabilities and introduce three reward

functions: a perception reward, a semantic similarity reward and a format

reward to maintain detection accuracy and answer semantic precision.

Furthermore, we introduce a dynamic view selection strategy that adaptively

chooses the most informative perspectives for 3D scene understanding. Extensive

experiments demonstrate that 3D-R1 delivers an average improvement of 10%

across various 3D scene benchmarks, highlighting its effectiveness in enhancing

reasoning and generalization in 3D scene understanding. Code:

https://github.com/AIGeeksGroup/3D-R1. Website:

https://aigeeksgroup.github.io/3D-R1.