For the last several years, OpenAI has received more press than God.

GPT-3, which most people hardly remember, “wrote” an oped in The Guardian in September 2020 (with human assistance behind the scenes) and ever since media coverage of large language models, generally focusing on OpenAI, has been nonstop. A zillion stories, often fawning, have been written about Sam Altman the boy genius and how ChatGPT would usher in some kind of amazing new era in science and medicine (spoiler alert: it hasn’t, at least not yet) , and how it would radically increase GDP and productivity, ushering in age of abundance (that hasn’t happened yet, either). For a while (mercifully finally over) every journalist and their cousin seem to think that the cleverest thing in the world was to open or close their essay with a quote from ChatGPT. Some people used to it write their wedding vows.

As anyone who reads this knows, I have never been quite so positive. Around the same time as the Guardian Oped, Ernest Davis I warned that GPT0-3 was “a fluent spouter of bullshit.”. In 2023, shortly after ChatGPT was launched, I doubled down, and told 60 Minutes that LLM output was “authoritative bullshit”. (In order to accommodate the delicate ears of network television the expletive was partly bleeped out.( I railed endlessly here and elsewhere about hallucinatioms and warned people to keep their expectations about GPT-4 and GPT-5 modest. From the first day of this newsletter, in May 2022, my theme was that scaling alone would not get us to AGI.

Without a doubt, that left me cast as hater (when in truth I love AI and want it to succeed) and a villain. I was, almost daily, mocked and ridiculed for my criticism of LLMs, including by some of the most powerful people in the tech, from Elon Musk to Yann LeCun (who to his credit, eventually saw for himself the limits of LLMs) to Altman himself, who recently called me a “troll” on X (only to turn tail when I responded in detail).

I endlessly challenged these people to debate, to discuss the facts at hand. None of them accepted. Not once. Nobody ever wanted to talk science.

And they didn’t need to, not with media folks that I won’t name often acting like cheerleaders, and even sometimes personally taking a shots against me. For a long time, the strategy of endless hype and only occasional engagement with science worked like a charm, commercially if not scientifically.

But media coverage is not science, and braggadacio alone cannot yield AGI. What is shocking is that suddenly, almost everywhere all at once, the veil has begun to lift. Over the last week the world woke up to the fact Altman wildly overpromised on GPT-5, with me no longer cast as villain but as hero, which is probably the stuff of Sam’s worst nighmares.

To regular readers of this newsletter, the underwhelming delivery of GPT-5 in its itself should not have been surprising. But what is startling (and frankly satisfying) is how rapidly and radically the narrative has changed.

You can see that narrative flip all over the place. CNN, for example,

Which read in part (highlighting add by the reader who passed the story to me):

Futurism had this to say:

writing in part, name checking me and with a supportive quote from Edinburgh researcher:

Though Marcus’s more realistic view of AI made him a pariah in the excitable AI community, he’s no longer standing alone against scalable AI. Yesterday, University of Edinburgh AI scholar Michael Rovatsos wrote that “it is possible that the release of GPT-5 marks a shift in the evolution of AI which… might usher in the end of creating ever more complicated models whose thought processes are impossible for anyone to understand.”

And my own essay on all this, GPT-5: Overdue, overhyped and underwhelming, went viral, with over 163,000 views.

Meanwhile, to my immense satisfaction, The New Yorker landed firmly on team Marcus, with computer scientist Cal Newport adding in car metaphor I wish I had coined myself:

In the aftermath of GPT-5’s launch, it has become more difficult to take bombastic predictions about A.I. at face value, and the views of critics like Marcus seem increasingly moderate… Post-training improvements don’t seem to be strengthening models as thoroughly as scaling once did. A lot of utility can come from souping up your Camry, but no amount of tweaking will turn it into a Ferrari.”

Even better Newport wound up with this, echoing a passage in my notorious Deep Learning is Hitting a Wall, in which I warned that scaling was not a physical law::

As if Sam’s week couldn’t get worse, columnist Steve Rosenbush at The Wall Street Journal gave me further props, on the topic of neurosymbolic AI, the alternative to LLMs that I long advocated, describing how Amazon was now putting neurosymbolic AI into practice and closing his essay with a pointer to my views and a link to another of this newsletter’s essays.

Gartner, meanwhile, as reported today in the NYT, is forecasting an “trough of disillusionment”

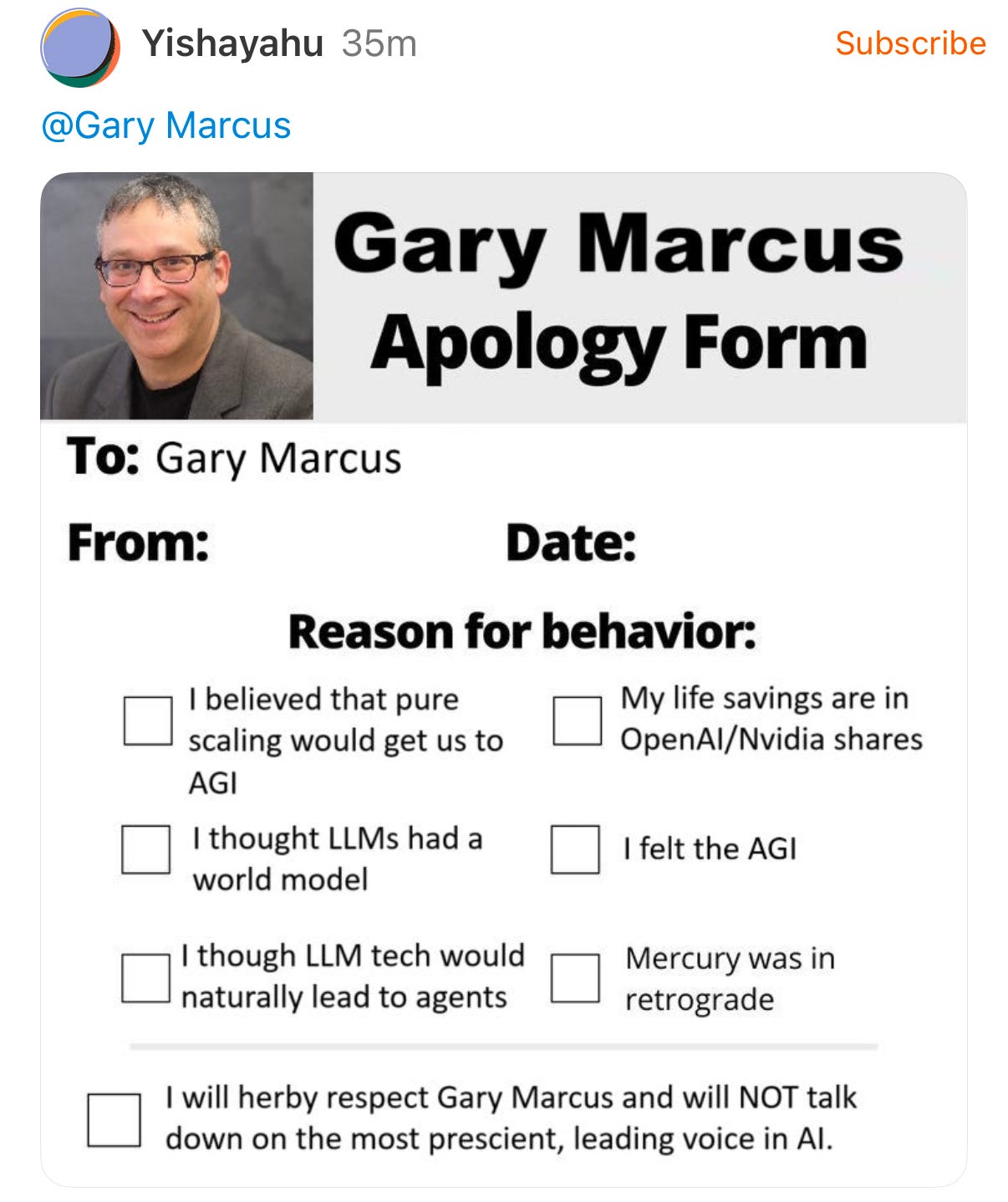

“Gary Marcus was right” memes, none of which reflect well on OpenAI, were everywhere. A reader of this Substack even went to so far to an invent a hilarious Gary Marcus Apology Form.

I have rarely seen a double reversal of fortune (OpenAI down, me up) so swift.

All that said, I was never in reality “standing alone”; I surely took more heat than anyone else but dozens if not hundreds of others spoke out against the scaling-über-alles hypothesis over the years. Indeed, although it did not get the press it deserved, recent AAAI survey showed that the vast majority of academic AI researchers doubted that scaling would get us all the way to AGI. They were right.

At last this is more widely known.

§

I would never count Sam Altman fully out, and increasingly skeptical mainstream media coverage is not the same as, for example a change in investor opinion dramatic enough to cause a bubble to deflate. Time will tell how this all plays out. But already some are wondering whether GPT-5’s disappointments could spark a new AI winter. There is a real sense in which GPT-5 is starting to look Altman’s Waterloo. (Or if it is Moby Dick you prefer, GPT-5 may be his white whale).

Three years of hype add up, and Altman simply could not deliver what he (over)promised. GPT-5 was his primary mission as a leader, and he couldn’t get there convincingly. In no way was it AGI. Some customers even wanted the old models back. Almost nobody felt satisfied.

This raises questions about the technology, about the company’s research prowess, and about Altman himself. Was he bullshitting all this time that he told us that the company knew how to build AGI?

Altman didn’t look a lot better telling CNBC that AGI “is not a super useful term” days after the disappointments of GPT-5, when he had been hyping AGI for years and — literally just a few days earlier—he said claimed that GPT-5 was a “significant step along our path toward AGI”. Two years ago, many treated Altman like an oracle; in the eyes of many, Altman now looks more like a snake oil salesman.

And of course, it is not just about Altman; others have been stalking the same whale; and nobody has yet delivered. Models like Grok-4 and Llama-4 have also underwhelmed.

§

What’s the moral of this story?

Science is not a popularity contest; you can’t bully your way to truth. And you can’t make AI better if you drown out the critics and keep pouring good money after bad. Science simply cannot advance without people sticking to the truth even in the face of opposition.

The good news here is that science is self-correcting; new approaches will rise again from the ashes. And AGI—hopefully safe, trustworthy AGI– will eventually come. Maybe in the next decade.

But between the disappointments of GPT-5 and a new study from METR that shows that LLMs do markedly better on coding benchmarks than in real-word practice, I think it is safe to say that LLMs won’t lead the way. And at last that fact is starting to become widely understood.

As people begin to recognize that truth (the bitter lesson about limits of Sutton’s The Bitter Lesson), scientists will start to charts new paths. One of the new paths may even give rise to what we so desperately need: AI that we can trust.

I can’t wait to see a whole new batch of discoveries, with minds at last wide open.

Gary Marcus appreciates the support of loyal readers of this newsletter, who stood behind him during darker times. Thank you!

![OpenAI’s Waterloo? [with corrections] – Marcus on AI](https://advancedainews.com/wp-content/uploads/2025/08/https3A2F2Fsubstack-post-media.s3.amazonaws.com2Fpublic2Fimages2F785d2d1c-dcf1-4296-8148-12286-1024x512.jpeg)