#transformer #nystromer #nystromformer

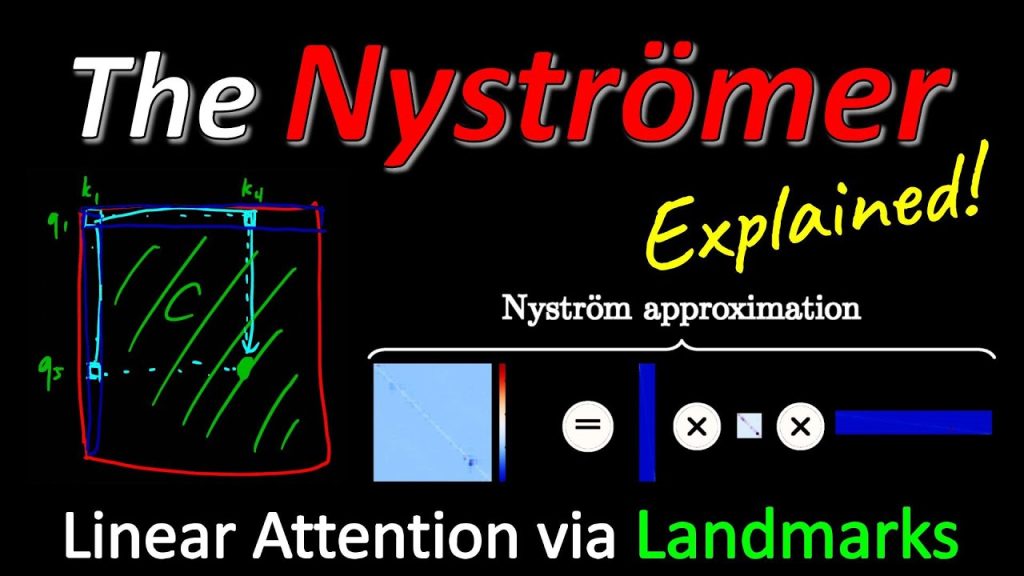

The Nyströmformer (or Nystromformer, Nyströmer, Nystromer), is a new drop-in replacement for approximating the Self-Attention matrix in Transformers with linear memory and time requirements. Most importantly, it uses the Nystrom-Method to subselect (or segment mean) queries and keys as so-called landmarks and uses those to reconstruct the inherently low-rank attention matrix. This is relevant for many areas of Machine Learning, especially Natural Language processing, where it enables longer sequences of text to be processed at once.

OUTLINE:

0:00 – Intro & Overview

2:30 – The Quadratic Memory Bottleneck in Self-Attention

7:20 – The Softmax Operation in Attention

11:15 – Nyström-Approximation

14:00 – Getting Around the Softmax Problem

18:05 – Intuition for Landmark Method

28:05 – Full Algorithm

30:20 – Theoretical Guarantees

35:55 – Avoiding the Large Attention Matrix

36:55 – Subsampling Keys vs Negative Sampling

43:15 – Experimental Results

47:00 – Conclusion & Comments

Paper:

Code:

Appendix:

LRA Results:

Twitter lucidrains w/ author:

Twitter lucidrains w/ _clashluke:

Abstract:

Transformers have emerged as a powerful tool for a broad range of natural language processing tasks. A key component that drives the impressive performance of Transformers is the self-attention mechanism that encodes the influence or dependence of other tokens on each specific token. While beneficial, the quadratic complexity of self-attention on the input sequence length has limited its application to longer sequences — a topic being actively studied in the community. To address this limitation, we propose Nyströmformer — a model that exhibits favorable scalability as a function of sequence length. Our idea is based on adapting the Nyström method to approximate standard self-attention with O(n) complexity. The scalability of Nyströmformer enables application to longer sequences with thousands of tokens. We perform evaluations on multiple downstream tasks on the GLUE benchmark and IMDB reviews with standard sequence length, and find that our Nyströmformer performs comparably, or in a few cases, even slightly better, than standard Transformer. Our code is at this https URL.

Authors: Yunyang Xiong, Zhanpeng Zeng, Rudrasis Chakraborty, Mingxing Tan, Glenn Fung, Yin Li, Vikas Singh

Links:

TabNine Code Completion (Referral):

YouTube:

Twitter:

Discord:

BitChute:

Minds:

Parler:

LinkedIn:

BiliBili:

If you want to support me, the best thing to do is to share out the content 🙂

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar:

Patreon:

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

source