HKU Business School today released the “Large Language Model (LLM) Hallucination Control Capability Evaluation Report.” The Report describes the evaluation of selected AI LLMs regarding their ability to control “hallucinations.” Hallucinations are when LLMs produce outputs that appear reasonable but are contradictory to facts or deviate from the context. Currently, LLMs are increasingly used in professional domains such as knowledge services, intelligent navigation, and customer service, but hallucinations have been limiting the credibility of LLMs.

This study was carried out by the Artificial Intelligence Evaluation Laboratory (https://www.hkubs.hku.hk/aimodelrankings_en), led by Professor Jack JIANG, Padma and Hari Harilela Professor in Strategic Information Management at HKU Business School. The research team conducted specialised assessments of the hallucination control capabilities of 37 LLMs, including 20 general-purpose models, 15 reasoning models, and 2 unified systems. The study aimed to reveal how effectively different models avoid factual errors and maintain contextual consistency.

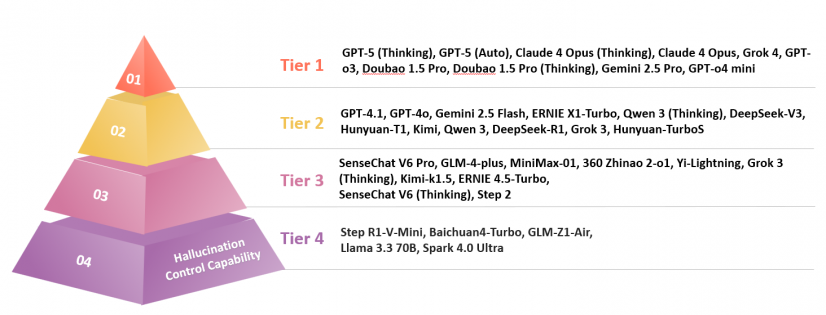

The Evaluation Result shows that GPT-5 (Thinking) and GPT-5 (Auto) ranked first and second place, respectively, with Claude 4 Opus series following closely behind. Among Chinese models, ByteDance’s Doubao 1.5 Pro series performed very well, but it still had significant gaps compared to the leading international LLMs.

Professor JIANG said, “Hallucination control capability, as a core metric for evaluating the truthfulness and reliability of model outputs, directly impacts the credibility of LLMs in professional settings. This research provides clear direction for future model optimisation and advancing AI systems from simply being ‘capable of generating’ outputs to being more reliable.”

Evaluation Methodology

Based on problems in LLM-generated content concerning factual accuracy or contextual consistency, the study categorises hallucinations into two types:

Factual Hallucinations: When a model’s output conflicts with real-world information, including incorrect recall of known knowledge (e.g., misattributions and data misremembering) or the generation or fabrication of unknown information (e.g., invented unverified events or data). The assessment involved detecting factual hallucinations through information retrieval questions, false-fact identification, and contradiction-premise identification tasks.

Faithful Hallucinations: When a model fails to strictly follow user instructions or produces content contradictory to the input context, including omitting key requirements, over-extensions, or formatting errors. The evaluation used instruction consistency and contextual consistency assessments.

Hallucination Control Performance and Rankings

From the study results, GPT-5 (Thinking) and GPT-5 (Auto) ranked first and second, respectively, with Claude 4 Opus series closely behind. The Doubao 1.5 Pro series from ByteDance performed best among the Chinese LLMs, showing balanced scores in factual and faithful hallucination control. However, their overall capabilities still lagged behind top international models like GPT-5 and the Claude series.

Rank

Model Name

Factual Hallucination

Faithful Hallucination

Final Score

1

GPT-5 (Thinking)

72

100

86

2

GPT-5 (Auto)

68

100

84

3

Claude 4 Opus (Thinking)

73

92

83

4

Claude 4 Opus

64

96

80

5

Grok 4

71

80

76

6

GPT-o3

49

100

75

7

Doubao 1.5 Pro

57

88

73

8

Doubao 1.5 Pro (Thinking)

60

84

72

9

Gemini 2.5 Pro

57

84

71

10

GPT-o4 mini

44

96

70

11

GPT-4.1

59

80

69

12

GPT-4o

53

80

67

12

Gemini 2.5 Flash

49

84

67

14

ERNIE X1-Turbo

47

84

65

14

Qwen 3 (Thinking)

55

76

65

14

DeepSeek-V3

49

80

65

14

Hunyuan-T1

49

80

65

18

Kimi

47

80

63

18

Qwen 3

51

76

63

20

DeepSeek-R1

52

68

60

20

Grok 3

36

84

60

20

Hunyuan-TurboS

44

76

60

23

SenseChat V6 Pro

41

76

59

24

GLM-4-plus

35

80

57

25

MiniMax-01

31

80

55

25

360 Zhinao 2-o1

49

60

55

27

Yi- Lightning

28

80

54

28

Grok 3 (Thinking)

29

76

53

29

Kimi-k1.5

36

68

52

30

ERNIE 4.5-Turbo

31

72

51

30

SenseChat V6 (Thinking)

37

64

51

32

Step 2

32

68

50

33

Step R1-V-Mini

36

60

48

34

Baichuan4-Turbo

33

60

47

35

GLM-Z1-Air

32

60

46

36

Llama 3.3 70B

33

56

45

37

Spark 4.0 Ultra

19

64

41

Table 1: Ranking of Hallucination Control Capability

The scores and rankings across the 37 models reveal significant differences, with distinct performance characteristics in controlling factual versus faithful hallucinations. Overall, current large models showed strong control over faithful hallucinations but still faced challenges in managing factual inaccuracies. This indicates a tendency among models to strictly follow instructions but they tend to fabricate facts.

Furthermore, reasoning models such as Qwen 3 (Thinking), ERNIE X1-Turbo and Claude 4 Opus (Thinking) are better at avoiding hallucinations compared to general-purpose LLMs. In the Chinese segment, Doubao 1.5 Pro was best with balanced performance in both factual and faithful hallucination controls, delivering strong hallucination management, though still trailing GPT-5 series and the Claude series in overall capabilities. In contrast, the DeepSeek series delivered relatively weaker hallucination control and has room for improvement.

Click here to view the complete “Large Language Model Hallucination Control Capability Evaluation Report.”

Moving forward, AI trustworthiness will require a balanced enhancement of control capabilities in both factual and faithful outputs, in order to produce more reliable content.