French AI lab Mistral has significantly upgraded its Le Chat chatbot, rolling out a powerful new “Deep Research” mode to directly challenge rivals like OpenAI and Google. The new feature, launched Thursday, transforms the assistant into a research partner that can plan, search the web, and generate structured, sourced reports.

The update also introduces a “Voxtral” voice mode for hands-free interaction and “Magistral” for advanced multilingual reasoning. With this launch, Mistral aims to capture both consumer and enterprise users. It offers unique on-premises data integration for businesses with sensitive information.

This positions Le Chat as more than just a model demo but a full-stack contender in the AI productivity space. All new features are now live on Le Chat’s web and mobile apps, available across all tiers from Free to Enterprise.

Deep Research Mode Enters a Crowded Field

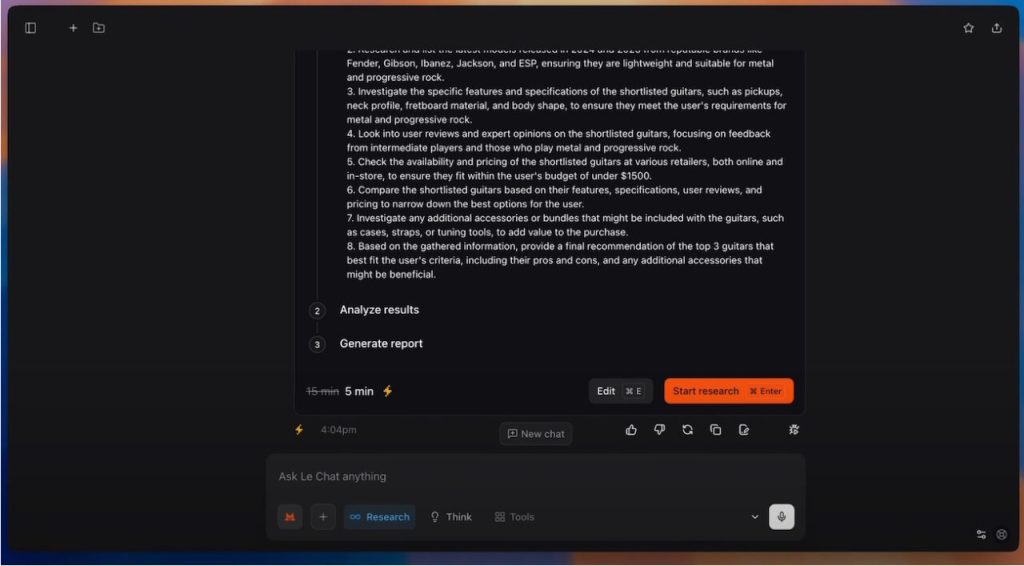

Mistral’s new Deep Research mode turns Le Chat into a coordinated research assistant. It breaks down complex questions, gathers credible sources, and builds a reference-backed report.

This launch places Mistral in a competitive arena that has matured rapidly in 2025. OpenAI introduced its Deep Research tool for ChatGPT Pro users in February, after Google first implemented Deep Research mode in its Gemini assistant last year. Perplexity AI then entered the fray with an accessible, low-cost alternative.

The competitive pressure has been relentless. Google later made its Gemini Deep Research feature entirely free for all users in March. OpenAI responded by adding a lightweight research option for its free tier in April. This dynamic makes Mistral’s entry both a necessary and strategic move to stay competitive.

While Mistral catches up, its rivals continue to advance. OpenAI has enhanced its tool with a GitHub connector for code analysis, and Google is adding file upload capabilities and a more powerful “Deep Search” for subscribers.

A Full Productivity Suite: From Voice to Organization

Beyond research, the update delivers a full suite of productivity tools designed to create a seamless workflow. A new voice mode, powered by the recently released Voxtral audio model, allows users to interact with Le Chat by speaking naturally.

When a user activates Deep Research, the experience shifts from a simple Q&A to a collaboration. The AI agent first breaks down the complex query into a multi-step research plan. It then autonomously executes this plan, searching across a wide variety of credible web sources to gather information.

The final output is not a simple chat response but a formal, structured report. Mistral’s examples show reports with an introduction, detailed sections with data tables, a summary, and a conclusion. Crucially, every key finding is backed by numbered citations, providing transparency and verifiability.

This agentic approach is designed for “meaty” questions where a single search is insufficient. Mistral highlights use cases from in-depth market analysis for enterprise work to creating exhaustive travel plans for consumers. The goal is to provide a comprehensive overview that saves hours of manual work.

This follows the launch of Mistral’s Magistral reasoning models in June. Mistral’s models now power multilingual reasoning directly within the chatbot. This enables it to handle complex queries in languages like French, Spanish, and Japanese, and even code-switch mid-sentence. This capability is a significant step towards a more globally accessible and intuitive AI assistant.

To help users manage this new power, Mistral also has introduced “Projects.” This feature lets users group related chats, files, and settings into focused workspaces, keeping long-running tasks organized. The update is rounded out with advanced in-chat image editing, allowing for consistent character and detail preservation across multiple images.

Magistral represents Mistral’s strategic entry into the advanced reasoning space, executed with a signature dual-release approach. For the open-source community, the company released Magistral Small, an efficient 24-billion-parameter model under a permissive Apache 2.0 license. It is designed to run on consumer-grade hardware, ensuring wide accessibility for developers and researchers.

For corporate clients, Mistral offers the more powerful Magistral Medium. This proprietary model is marketed with features like “traceable reasoning,” a key capability for compliance in regulated industries such as finance and law. The company emphasizes that its models are built from the ground up using its own infrastructure, rather than being distilled from rival AIs, a process they claim enhances generalization.

While Magistral marks a significant step for the company, initial benchmarks revealed a performance gap compared to the top-tier reasoning models from competitors. For instance, its score on the AIME 2024 math and science benchmark lagged behind newer models from rivals. In response, Mistral is strategically focusing on other strengths, such as superior multilingual reasoning and a “Flash Answers” feature in Le Chat that prioritizes speed over raw power.

The Enterprise Edge: On-Premises Data and Sovereignty

Mistral is clearly targeting the enterprise market, with a strategy that plays to its strengths as a European AI leader. The company emphasizes its ability to connect Le Chat to a company’s internal data on-premises. This is a critical differentiator from cloud-native rivals like OpenAI and Google. This on-premises capability is a core part of Mistral’s value proposition, especially for sectors like banking, defense, and government.

This focus on data sovereignty aligns with Mistral’s broader “AI for Citizens” initiative, which helps public institutions build their own secure AI solutions. Salamanca confirmed this direction, saying, “we’re building these connectors internally because we believe this is going to be a key for using Le Chat as a productivity enabler in the business context.”

This strategy reinforces the independent identity cultivated by CEO Arthur Mensch, who famously declared, “we are not for sale.” The approach appears to resonate with those wary of Big Tech dominance. Perplexity AI’s CEO, Aravind Srinivas, previously criticized the high cost of enterprise AI, arguing, “Knowledge should be universally accessible and useful. Not kept behind obscenely expensive subscription plans that benefit the corporates, not in the interests of humanity!” Mistral’s blend of open-source models and secure enterprise solutions offers a compelling alternative in a rapidly evolving market.