Mistral AI, the French AI rival to the likes of OpenAI, DeepSeek and others, has now introduced a new enterprise coding assistant named ‘Mistral Code’. This product marks Mistral AI’s foray into the highly competitive and kind if first-choice, software development market, competing with the likes of Microsoft GitHub Copilot and other Silicon Valley rivals.

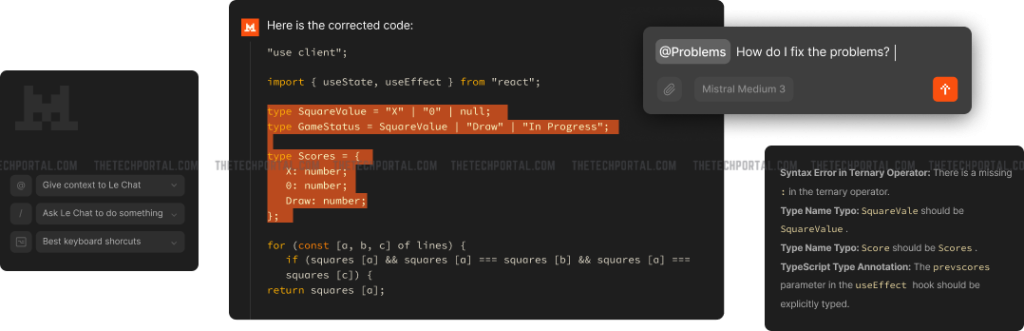

Mistral Code integrates the company’s AI models with integrated development environment (IDE) plugins and offers on-premise deployment options. This design addresses the requirements of large enterprises concerning security and data handling. The company states that its offering provides a degree of customization and data sovereignty not widely available in existing coding assistants. Mistral Code utilizes four AI models – Codestral is used to compelet code, Codestral Embed helps in code search and retrieval operations, Devstral aids multi-task coding workflows, while Mistral Medium helps provide conversational assistance with programming queries. The system supports over 80 programming languages and can process various forms of developer inputs, including files, Git differences, terminal output, and issue tracking systems.

“Software engineering teams in enterprises can finally bring frontier-grade AI coding into their workflow in a secure, compliant manner. Mistral Code is an AI-powered coding assistant that bundles powerful models, an in-IDE assistant, local deployment options, and enterprise tooling into one fully supported package, so developers can 10X their productivity with the full backing of their IT and security teams. Mistral Code builds on the proven open-source project Continue, reinforced with the controls and observability that large enterprises require; private beta is open today for JetBrains IDEs and VSCode. Mistral Code is a continuation of our efforts to make developers successful with Al, following last month’s releases of Devstral and Codestral Embed,” the startup announced in an official statement.

Baptiste Rozière, a research scientist at Mistral AI and a former Meta researcher involved in the development of the Llama language model, indicated that key features include enhanced customization capabilities and the ability to serve models directly on customer hardware. This allows for specialization of AI models to a client’s specific codebase, which can improve the relevance of code completions for unique workflows. Deploying the entire AI stack within a company’s own infrastructure ensures that proprietary code remains on corporate servers, addressing data confidentiality and security standards.

The product launch addresses identified barriers to enterprise AI adoption – the barriers were revealed after Mistral conducted a survey, revealing the recurring challenges: limited connectivity to proprietary code repositories, insufficient model customization, restricted task coverage for complex programming workflows, and fragmented service-level agreements across multiple technology vendors. Mistral Code aims to resolve these concerns through a “vertically-integrated offering.” This package includes AI models, IDE plugins, administrative controls, and continuous support under a single contract. The platform builds upon the open-source “Continue” project but incorporates enterprise-grade features such as granular role-based access control, comprehensive audit logging, and detailed usage analytics. A private beta for Mistral Code is currently available for JetBrains development platforms and Microsoft’s VS Code.