It is a now well-known fact in the datacenters of the world, which are trying to cram ten pounds of power usage into a five pound bit barn bag, that liquid cooling is an absolute necessity for the density of high performance computing systems to be increased to drive down latency between components and therefore drive up performance.

Air cooling using zillions of tiny fans and hot aisle containment to make the datacenter more efficient no longer cuts it, and for many different reasons. Let’s just compare water and air as cooling media to get a sense of it; obviously there are other dielectric liquids that are even better than water for liquid cooling systems. When it comes to heat conduction, water is around 23X better than air at absorbing heat and it can absorb more than 4X the heat per unit of mass, and that has to do mostly with water being around 830X more dense than air at room temperature. For heat convection, depending on the technology and specifically for the kind of forced convection that is used in current cold plate technologies (as well as in the thermal conduction modules that IBM created for its mainframe processors four decades ago) and including all of those whining fans blowing air over components), water can be 10X to 100X better at cooling components. (See The Engineering ToolBox if you want to get into the particulars.)

To put it succinctly: Water might suck, but air blows.

Liquid cooling takes less energy to run (pumps versus fans) and removes heat faster. And, as it turns out, when you get very precise with liquid cooling using microfluidics – literally the technology of creating tiny fluid management systems that are common in biosciences and that might become common for high wattage computing elements in the near term, you can run chips at higher speeds and more reliably cool them.

Microsoft is catching all the press this week for its microfluidics research and development, which we presume it very much wants to add to its homegrown Maia family of XPU accelerators for AI workloads as well as for its “Cobalt family of Arm server CPUs. The blog post that Big Bill Satya put out this week outlining (in the broadest of senses) its microfluidics chip cooling efforts did not confirm this, but did remind everyone that cold plate cooling, which has brought the IT industry pretty far, is not the end of the innovation road. Which is a good thing because air cooling as we knew it tops out at around 15 kilowatts per rack and liquid cooling as we are doing it today is somewhere in the range of 145 kilowatts per rack in a world that is trying to push up to maybe 1 megawatt per rack by 2030.

You read that right. What used to be a datacenter is now a rack.

Big Blue Leads The Way

For many technologies that we commonly use (whether we know it or not), the hyperscalers have been great innovators. But when it comes to chip cooling, IBM’s genius for electromechanical devices and clever packaging is the foundation on which today’s microfluidics research is based.

Way back in June 2008, IBM’s Zurich Research Laboratory was showing off 3D packages of chips and memory that had water cooling both vertically and horizontally within those stacks. And in April 2013, the US Defense Advanced Research Projects Agency, which used to fund a lot more research and development related to high performance computing, tapped Big Blue to participate in its Intra Chip Enhanced Cooling (ICECool) project. We have a memory wall in the datacenter, but we also have a cooling wall, where circuits are getting smaller and running faster (thereby increasing heat density) faster than cooling technologies can handle it. With ICECool, IBM came up with ways of manufacturing 3D chip stacks with integrated water cooling.

IBM Zurich is still promoting the embedded liquid cooling technologies it developed a decade ago, which you can check out here. As far as we know, none of the datacenter products we think about every day have ever used any of them. (Military uses may abound and be secret, of course.) For all we know, Microsoft or others have licensed some of IBM’s technology. We found a paper that surveyed the state of microfluidics cooling for chips as of June 2018, which was pretty thin. An overview of the ICECool work that was done between 2012 and 2015 was published in October 2021. Georgia Tech is a hot bed of microfluidic cooling, and worked with Microsoft on a project to mod an Intel Core i7-8700 CPU with microfluidic pins and directly cool it and overclock it. Researchers at the Watson School of Engineering – named after IBM’s founder, Thomas Watson – at Binghampton University in New York (just down the road from Endicott, where Big Blue was founded in 1911), did a survey of embedded cooling technologies in an October 2022 paper. Researchers at Meta Platforms, KLA Instruments, SEMI, and other institutions looked at the need for microfluidics and its challenges in a paper that came out in June 2024.

By the way, if you think Darwin Microfluidics or Parallel Fluidics are doing chip cooling, they are not – they provide microfluidics components and design tools for the life sciences market.

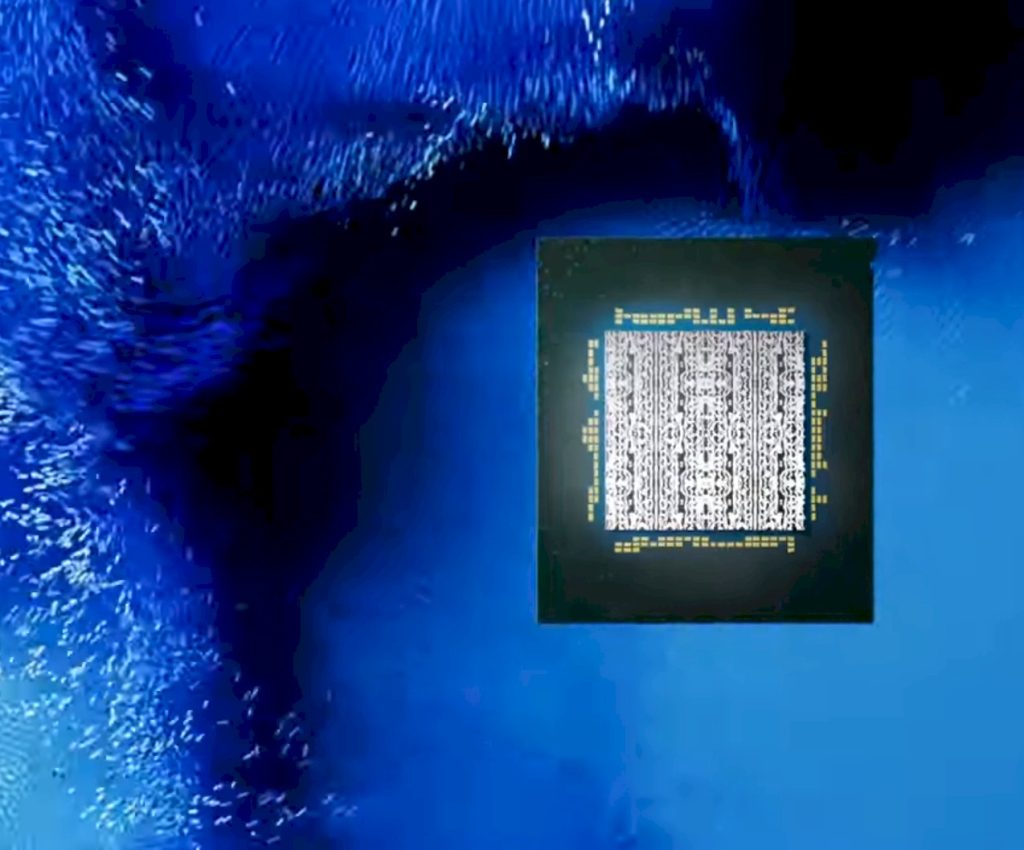

To create its microfluidics chip cooling prototype, the package of which is shown above and the etched conduits for coolant are shown in the feature image at the top of this story, Microsoft worked with Corintis, a company that was founded in January 2022 and that has created an AI-infused design tool called Glacierware that can be used to map tubules of liquid cooling etched into chips to provide more coolant to hot spots and less to other area of the chip rather than the brute force, linear cooling tracks that are used in cold plates and other kinds of direct liquid cooling.

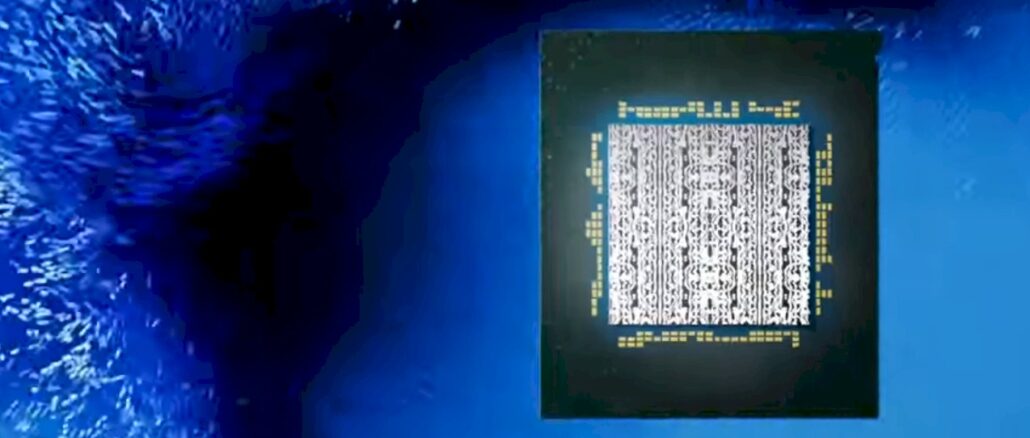

This image shows multiple cooling layers being overlaid on top of the research chip created by Microsoft, which was not identified:

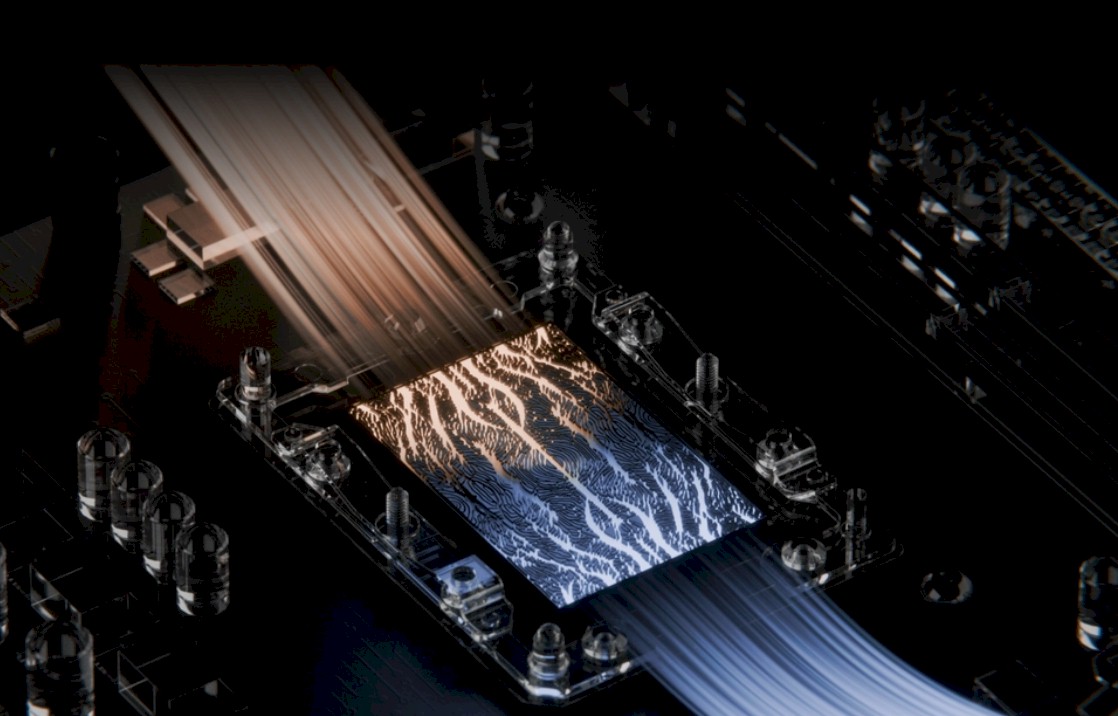

And this image from Corintis shows how the cold coolant, shown in blue, flows through the microfluidic channels and then takes the heat away in the channels at the top of the chip, shown in red:

The image of the chip and its fluid flows does not do it justice, but this image from Microsoft does:

Corintis is based in Lausanne, Switzerland, a mere three hour drive southwest from IBM’s Zurich. Both are based on Alpine mountain lakes – the former on Lake Geneva, the latter on Lake Zurich – so maybe there is something in the water being near snow-capped Alps peaks that makes researchers think about water cooling.

The company was founded in January 2022 by Remco van Erp, its chief executive officer and a PhD from Ecole Polytechnique Fédérale de Lausanne, which is one of two Swiss Federal Institutes of Technology. Elison Matioli, who is a professor of electrical engineering at EPFL and director of its PowerLab, which specializes in research on power, thermal management, and radio frequency electronics, is also a co-founder. Sam Harrison, who was a product manager at Swiss satellite operator Astrocast, which provides satellite IoT communications, is the final co-founder and the company’s chief operating officer.

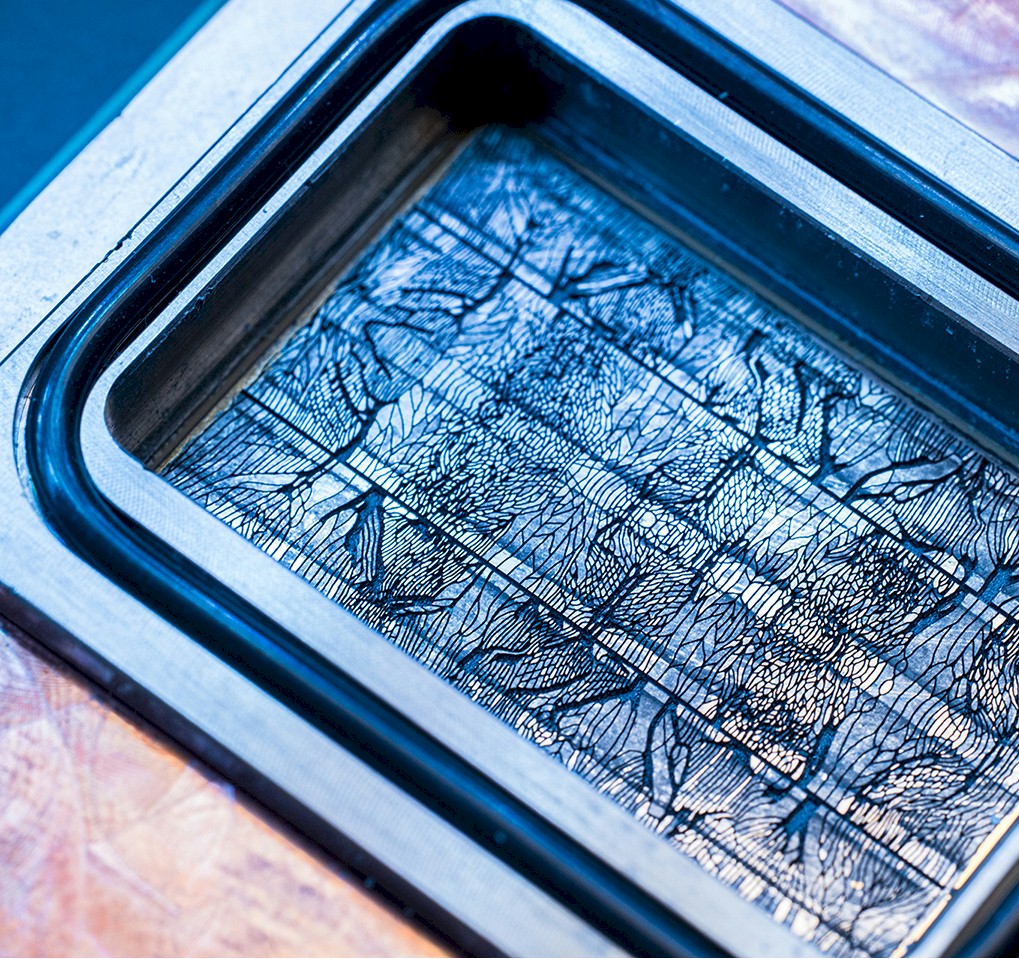

Corintis published a paper about Glacierware and microfluidic cooling on a theoretical chip back in August 2024, and has also created a white paper to describe what it does. The images of the cooling channels that are used to cool the chip emerge iteratively and, this being Philosophical Phriday and all here at The Next Platform, they bore a resemblance to the Shroud of Turin. Take a look:

This microfluidics cooling technique looks organic – and that is no accident. The brains in our heads have fat-wrapped neurons immersed in a water cooled noggin (which also provides shock absorption), and you can’t have the water shorting out the neurons or the blood providing the power doing it, either.

So how did the microfluidics do? It is early days, but the technology looks promising, according to Microsoft and Corintis.

With heat sinks used today, you can have extruded, bonded and folded, or brazed folded metal heat sinks, but the best ones are skived – a word that is new to us – that means shaving very thin fins out of a single block of metal and bending each fin up vertically so it is one piece of metal and therefore more thermally efficient. The cold plates can also be skived, meaning shaved out of a single piece of metal. In general, says Corintis, the microfluidics approach can deliver greater cooling with lower flow rates of coolant, and importantly can deliver higher cooling rates to hot spots on the chip instead of using a geometrically linear approach with standard cold plates that doesn’t really respect hot spots (or cold spots, for that matter) at all. When a spot gets hot, the chip has to clock down to keep from burning up. With microfluidics, you can overclock the chip compared to baseline because you can precisely cool a hot spot.

This is an important idea for the future of compute engines in the datacenter.

With the Corintis prototype, the microfluidics on the test chip allows for the intake fluid temperature to be dropped by 13 percent and the pressure drop across the fluidics was 55 percent lower than with the standard cold plates using parallel microchannels.

Looking further out, Corintis says that its approach to custom microfluidics can drive a 10X improvement in chip cooling (we are not certain what metric this is gauged with). Microsoft is being more conservative and has said that microfluidics as embodied in its test chip could cool chips “up to three times better.” (We think Microsoft tested a CPU, given that it was simulating Teams conferencing software on its test chip.) To be precise, Microsoft said that microfluidics were up to 3X better at removing heat than cold plates, depending on the workload and configuration of the system, and that on a GPU (which was not named) it reduced the maximum temperature of the device running a workload by 65 percent.

Considering that chips are separated from cold plates by layers of stuff, while microfluidics carves channels directly into the metal tops of chips and runs liquid though it, it stands to reason that this approach is better. Because of the efficiency in cooling – it is really direct liquid cooling – the intake temperature of the coolant used in microfluidics can be lower, which also lowers energy consumption. And, as IBM has shown, you can interleave microfluidics with layers of silicon, such as compute, cache, and main memory, that is in a single complex.

The question you might ask, then, is: Much cost will microfluidics cooling add?

The question to ask back is: Well, is it less than underclocking a $50,000 GPU? Our guess is yes it is.