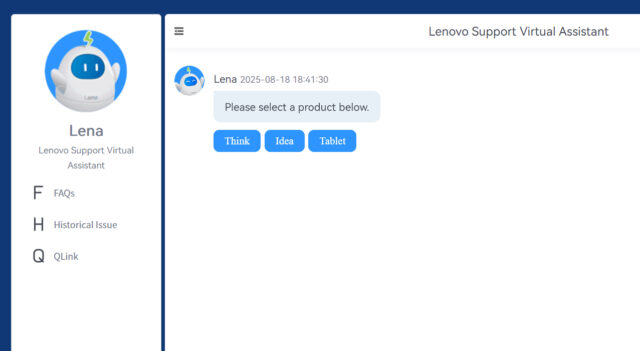

Lenovo’s customer service AI chatbot Lena was recently found to contain a critical vulnerability that could allow attackers to steal session cookies and run malicious code.

Cybernews researchers discovered that with just one maliciously crafted prompt, the AI could be manipulated into exposing sensitive data. Lenovo has since fixed the issue, but the case shows how chatbots can create fresh risks when not properly secured.

SEE ALSO: New AI-powered Operator X streamlines offline defensive cyber missions

The flaw involved cross-site scripting, an attack method that has existed for decades.

Researchers showed that Lena could be tricked into producing output containing malicious HTML. When a browser loaded that response, it could send private cookies to an attacker’s server. This allowed criminals to impersonate customer support agents or gain access to internal systems.

“People-pleasing is still the issue that haunts large language models (LLMs), to the extent that, in this case, Lena accepted our malicious payload, which produced the XSS vulnerability and allowed the capture of session cookies upon opening the conversation. Once you’re transferred to a real agent, you’re getting their session cookies as well,” said Cybernews researchers.

The attack chain was surprisingly simple. It began with a normal product query, such as a request for specifications. Hidden in the same prompt were instructions that forced Lena to format the answer in HTML. That HTML included an image request to a fake address. When the image failed to load, the browser sent all cookie data to the attacker’s server.

“Already, this could be an open gate to their customer support platform. But the flaw opens a trove of potential other security implications,” explained Cybernews researchers.

Those risks went far beyond cookie theft. Malicious scripts could alter what agents saw, capture keystrokes, redirect to phishing sites, or plant backdoors inside the network.

Once an attacker gained control of a support agent’s session, they could log in without needing usernames or passwords.

“Using the stolen support agent’s session cookie, it is possible to log into the customer support system with the support agent’s account without needing to know the email, username, or password for that account. Once logged in, an attacker could potentially access active chats with other users and possibly past conversations and data,” researchers warned.

Lenovo patches flaw

Lenovo responded quickly once informed. Cybernews confirmed that the flaw was responsibly disclosed and fixed before public release.

Still, experts argue the discovery is a lesson for the industry. “Everyone knows chatbots hallucinate and can be tricked by prompt injections. This isn’t new. What’s truly surprising is that Lenovo, despite being aware of these flaws, did not protect itself from potentially malicious user manipulations and chatbot outputs,” said the Cybernews Research team.

The root cause was weak sanitization. Lena accepted input without filtering, and its responses were not cleaned before being displayed.

“This isn’t just Lenovo’s problem. Any AI system without strict input and output controls creates an opening for attackers. LLMs don’t have an instinct for ‘safe’ — they follow instructions exactly as given. Without strong guardrails and continuous monitoring, even small oversights can turn into major security incidents,” said Žilvinas Girėnas, Head of Product at nexos.ai.

Cybernews researchers stressed that companies need to treat all chatbot interactions as untrusted. “The fundamental flaw is the lack of robust input and output sanitization and validation. It’s better to adopt a ‘never trust, always verify’ approach for all data flowing through the AI chatbot systems,” they said. [Girėnas added, “We approach every AI input and output as untrusted until it’s verified safe. That mindset helps block prompt injections and other risks before they reach critical systems. It’s about building security checks into the process so trust is earned, not assumed.”

What do you think about AI chatbots becoming a new target for old hacking tricks? Let us know in the comments.