Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

If the AI industry had an equivalent to the recording industry’s “song of the summer” — a hit that catches on in the warmer months here in the Northern Hemisphere and is heard playing everywhere — the clear honoree for that title would go to Alibaba’s Qwen Team.

Over just the past week, the frontier model AI research division of the Chinese e-commerce behemoth has released not one, not two, not three, but four (!!) new open source generative AI models that offer record-setting benchmarks, besting even some leading proprietary options.

Last night, Qwen Team capped it off with the release of Qwen3-235B-A22B-Thinking-2507, it’s updated reasoning large language model (LLM), which takes longer to respond than a non-reasoning or “instruct” LLM, engaging in “chains-of-thought” or self-reflection and self-checking that hopefully result in more correct and comprehensive responses on more difficult tasks.

Indeed, the new Qwen3-Thinking-2507, as we’ll call it for short, now leads or closely trails top-performing models across several major benchmarks.

The AI Impact Series Returns to San Francisco – August 5

The next phase of AI is here – are you ready? Join leaders from Block, GSK, and SAP for an exclusive look at how autonomous agents are reshaping enterprise workflows – from real-time decision-making to end-to-end automation.

Secure your spot now – space is limited: https://bit.ly/3GuuPLF

As AI influencer and news aggregator Andrew Curran wrote on X: “Qwen’s strongest reasoning model has arrived, and it is at the frontier.”

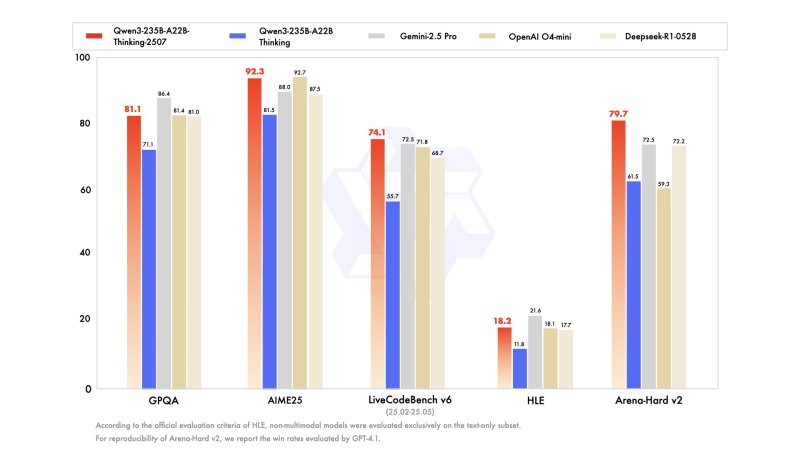

In the AIME25 benchmark—designed to evaluate problem-solving ability in mathematical and logical contexts — Qwen3-Thinking-2507 leads all reported models with a score of 92.3, narrowly surpassing both OpenAI’s o4-mini (92.7) and Gemini-2.5 Pro (88.0).

The model also shows a commanding performance on LiveCodeBench v6, scoring 74.1, ahead of Google Gemini-2.5 Pro (72.5), OpenAI o4-mini (71.8), and significantly outperforming its earlier version, which posted 55.7.

In GPQA, a benchmark for graduate-level multiple-choice questions, the model achieves 81.1, nearly matching Deepseek-R1-0528 (81.0) and trailing Gemini-2.5 Pro’s top mark of 86.4.

On Arena-Hard v2, which evaluates alignment and subjective preference through win rates, Qwen3-Thinking-2507 scores 79.7, placing it ahead of all competitors.

The results show that this model not only surpasses its predecessor in every major category but also sets a new standard for what open-source, reasoning-focused models can achieve.

A shift away from ‘hybrid reasoning’

The release of Qwen3-Thinking-2507 reflects a broader strategic shift by Alibaba’s Qwen team: moving away from hybrid reasoning models that required users to manually toggle between “thinking” and “non-thinking” modes.

Instead, the team is now training separate models for reasoning and instruction tasks. This separation allows each model to be optimized for its intended purpose—resulting in improved consistency, clarity, and benchmark performance. The new Qwen3-Thinking model fully embodies this design philosophy.

Alongside it, Qwen launched Qwen3-Coder-480B-A35B-Instruct, a 480B-parameter model built for complex coding workflows. It supports 1 million token context windows and outperforms GPT-4.1 and Gemini 2.5 Pro on SWE-bench Verified.

Also announced was Qwen3-MT, a multilingual translation model trained on trillions of tokens across 92+ languages. It supports domain adaptation, terminology control, and inference from just $0.50 per million tokens.

Earlier in the week, the team released Qwen3-235B-A22B-Instruct-2507, a non-reasoning model that surpassed Claude Opus 4 on several benchmarks and introduced a lightweight FP8 variant for more efficient inference on constrained hardware.

All models are licensed under Apache 2.0 and are available through Hugging Face, ModelScope, and the Qwen API.

Licensing: Apache 2.0 and its enterprise advantage

Qwen3-235B-A22B-Thinking-2507 is released under the Apache 2.0 license, a highly permissive and commercially friendly license that allows enterprises to download, modify, self-host, fine-tune, and integrate the model into proprietary systems without restriction.

This stands in contrast to proprietary models or research-only open releases, which often require API access, impose usage limits, or prohibit commercial deployment. For compliance-conscious organizations and teams looking to control cost, latency, and data privacy, Apache 2.0 licensing enables full flexibility and ownership.

Availability and pricing

Qwen3-235B-A22B-Thinking-2507 is available now for free download on Hugging Face and ModelScope.

For those enterprises who don’t want to or don’t have the resources and capability to host the model inference on their own hardware or virtual private cloud through Alibaba Cloud’s API, vLLM, and SGLang.

Input price: $0.70 per million tokens

Output price: $8.40 per million tokens

Free tier: 1 million tokens, valid for 180 days

The model is compatible with agentic frameworks via Qwen-Agent, and supports advanced deployment via OpenAI-compatible APIs.

It can also be run locally using transformer frameworks or integrated into dev stacks through Node.js, CLI tools, or structured prompting interfaces.

Sampling settings for best performance include temperature=0.6, top_p=0.95, and max output length of 81,920 tokens for complex tasks.

Enterprise applications and future outlook

With its strong benchmark performance, long-context capability, and permissive licensing, Qwen3-Thinking-2507 is particularly well suited for use in enterprise AI systems involving reasoning, planning, and decision support.

The broader Qwen3 ecosystem — including coding, instruction, and translation models—further extends the appeal to technical teams and business units looking to incorporate AI across verticals like engineering, localization, customer support, and research.

The Qwen team’s decision to release specialized models for distinct use cases, backed by technical transparency and community support, signals a deliberate shift toward building open, performant, and production-ready AI infrastructure.

As more enterprises seek alternatives to API-gated, black-box models, Alibaba’s Qwen series increasingly positions itself as a viable open-source foundation for intelligent systems—offering both control and capability at scale.