Both for research and medical purposes, researchers have spent decades pushing the limits of microscopy to produce ever deeper and sharper images of brain activity, not only in the cortex but also in regions underneath such as the hippocampus. In a new study, a team of MIT scientists and engineers demonstrates a new microscope system capable of peering exceptionally deep into brain tissues to detect the molecular activity of individual cells by using sound.

“The major advance here is to enable us to image deeper at single-cell resolution,” said neuroscientist Mriganka Sur, a corresponding author along with mechanical engineering Professor Peter So and principal research scientist Brian Anthony. Sur is the Paul and Lilah Newton Professor in The Picower Institute for Learning and Memory and the Department of Brain and Cognitive Sciences at MIT.

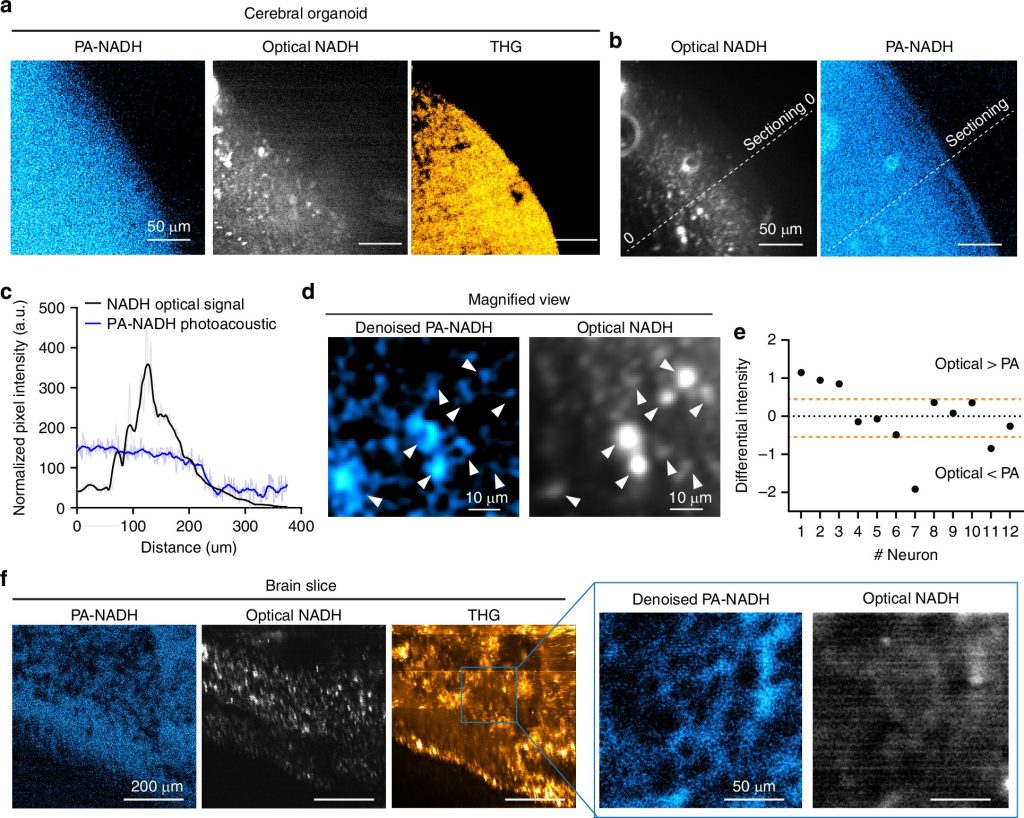

In the journal Light: Science and Applications, the team demonstrates that they could detect NAD(P)H, a molecule tightly associated with cell metabolism in general, and electrical activity in neurons in particular, all the way through samples such as a 1.1 mm “cerebral organoid,” a 3D-mini brain-like tissue generated from human stem cells, and a 0.7 mm thick slice of mouse brain tissue.

In fact, said co-lead author and mechanical engineering postdoc W. David Lee, who conceived the microscope’s innovative design, said the system could have peered far deeper but the test samples weren’t big enough to demonstrate that.

“That’s when we hit the glass on the other side,” he said. “I think we’re pretty confident about going deeper.”

Still, a depth of 1.1 mm is more than five times deeper than other microscope technologies can resolve NAD(P)H within dense brain tissue. The new system achieved the depth and sharpness by combining several advanced technologies to precisely and efficiently excite the molecule and then to detect the resulting energy all without having to add any external labels, either via added chemicals or genetically engineered fluorescence.

Rather than focusing the required NAD(P)H excitation energy on a neuron with near ultraviolet light at its normal peak absorption, the scope accomplishes the excitation by focusing an intense, extremely short burst of light (a quadrillionth of a second long) at three times the normal absorption wavelength. Such “three-photon” excitation penetrates deep into tissue with less scattering by brain tissue because of the longer wavelength of the light (“like fog lamps,” Sur said).

Meanwhile, though the excitation produces a weak fluorescent signal of light from NAD(P)H, most of the absorbed energy produces a localized (~10 microns) thermal expansion within the cell, which produces sound waves that travel relatively easily through tissue compared to the fluorescence emission. A sensitive ultrasound microphone in the microscope detects those waves and, with enough sound data, software turns them into high-resolution images (much like a sonogram does). Imaging created in this way is “three-photon photoacoustic imaging.”

“We merged all these techniques—three-photon, label-free, photoacoustic detection,” said co-lead author Tatsuya Osaki, a research scientist in The Picower Institute in Sur’s lab. “We integrated all these cutting-edge techniques into one process to establish this ‘Multiphoton-In and Acoustic-Out’ platform.”

Lee and Osaki combined with research scientist Xiang Zhang and postdoc Rebecca Zubajlo to lead the study, in which the team demonstrated reliable detection of the sound signal through the samples. So far, the team has produced visual images from the sound at various depths as they refine their signal processing.

In the study, the team also shows simultaneous “third-harmonic generation” imaging, which comes from the three-photon stimulation and finely renders cellular structures, alongside their photoacoustic imaging, which detects NAD(P)H. They also note that their photoacoustic method could detect other molecules such as the genetically encoded calcium indicator GCaMP, which neuroscientists use to signal neural electrical activity.

Alzheimer’s and other applications

With the concept of label free, multiphoton, photoacoustic microscopy (LF-MP-PAM) established in the paper, the team is now looking ahead to neuroscience and clinical applications.

Lee has already established that NAD(P)H imaging can inform wound care. In the brain, levels of the molecule are known to vary in conditions such as Alzheimer’s disease, Rett syndrome, and seizures, making it a potentially valuable biomarker. Because the new system is label free (i.e. no added chemicals or altered genes), it could be used in humans, for instance, during brain surgeries.

The next step for the team is to demonstrate it in a living animal, rather than just in in vitro and ex-vivo tissues. The technical challenge there is that the microphone can no longer be on the opposite side of the sample from the light source (as it was in the current study). It has to be on top, just like the light source.

Lee said he expects that full imaging at depths of 2 mm in live brains is entirely feasible given the results in the new study.

“In principle it should work,” he said.

Mercedes Balcells and Elazer Edelman are also authors of the paper.

More information:

Tatsuya Osaki et al, Multi-photon, label-free photoacoustic and optical imaging of NADH in brain cells, Light: Science & Applications (2025). DOI: 10.1038/s41377-025-01895-x

Massachusetts Institute of Technology

Citation:

Imaging tech promises deepest looks yet into living brain tissue at single-cell resolution (2025, August 7)

retrieved 7 August 2025

from https://medicalxpress.com/news/2025-08-imaging-tech-deepest-brain-tissue.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.