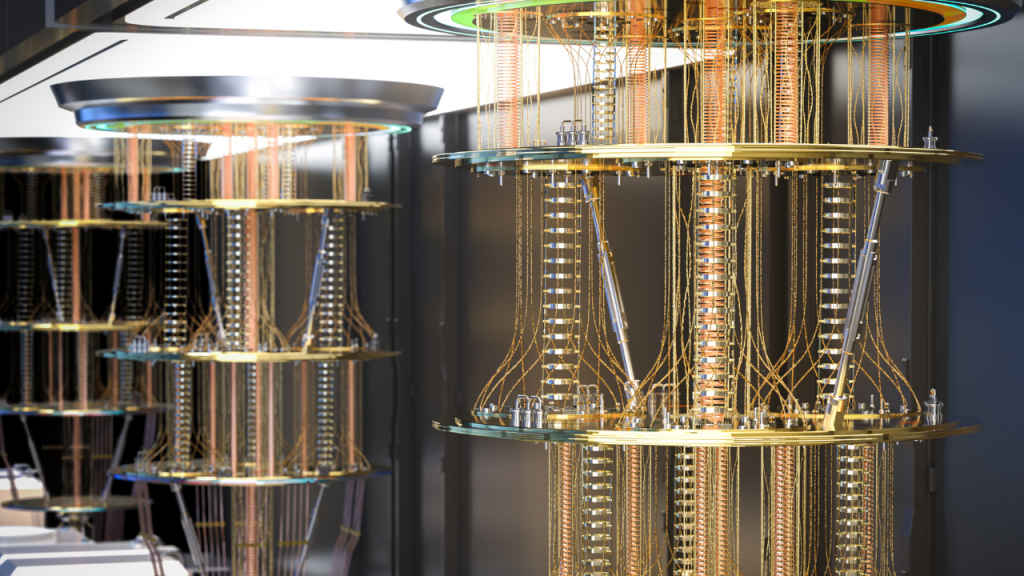

Forgotten in the buzz of WWDC 2025, IBM’s quantum computing announcement might not have been as flashy – but it was quite revealing, not unlike looking through clear liquid glass. IBM’s announcement lays out a remarkably concrete roadmap to deliver Starling, the first large-scale, fault-tolerant quantum computer, by 2029.

Housed in a new IBM Quantum Data Center in New York, Starling promises to execute some 20,000 times more quantum operations than any system in operation today. That’s some marketing hyperbole, as representing its state would require memory equivalent to over a quindecillion or 1048 of today’s fastest classical supercomputers.

Also read: Google Willow quantum chip explained: Faster than a supercomputer

This isn’t IBM’s first quantum roadmap. But it is the first time the company has publicly laid out detailed processor milestones – Loon (2025), Kookaburra (2026), Cockatoo (2027), culminating in Starling (2029) – each testing critical elements of error-corrected, modular architectures based on quantum LDPC or qLDPC codes to slash qubit overhead by 90% relative to previous codes. In plain terms, IBM is tackling the quantum equivalent of the classical “vacuum tube to transistor” transition, which is nothing short of revolutionary, if you ask me.

Journey from ENIAC to quantum entanglement

To appreciate Starling’s significance, we need to rewind to the dawn of programmable computing. Time for a much-needed history lesson. In 1946, ENIAC, a 30-ton behemoth, calculated artillery tables at just 5,000 additions per second. A few years later, IBM’s 650 drum-memory system, at roughly half a million dollars per unit, became the first mass-market computer, ushering in an era of batch processing for business and science.

IBM’s history is tightly woven into both classical and quantum threads. In the 1950s, IBM’s transistor-based computers replaced vacuum tubes, notably with the IBM 1401, making data processing affordable for mid-sized businesses. A decade later, System/360 pioneered architecture-compatible families, defining the mainframe era.

Also read: IBM reveals faster Heron R2 quantum computing chip: Why this matters

Fast-forward to 2011, that’s when IBM launched the first 5-qubit quantum processor at Yorktown Heights. Each subsequent leap – 16 qubits, 50 qubits – tested coherence times and basic algorithms but remained “noisy intermediate-scale quantum” (NISQ) devices, limited by error rates and decoherence. It wasn’t until IBM’s Nature-cover paper on quantum LDPC codes that a clear, efficient path to error-corrected logical qubits emerged .

Now, with Starling and beyond, IBM is uniting decades of hardware, control electronics, and algorithm research into a practical vision: 100 million quantum operations on 200 logical qubits, followed by 1 billion ops on 2,000 logical qubits with Blue Jay in 2033. This roadmap is more than ambition – it’s a stepwise de-risking of large-scale quantum computing, much like IBM’s systematic moves in classical eras.

What’s so special about fault tolerance?

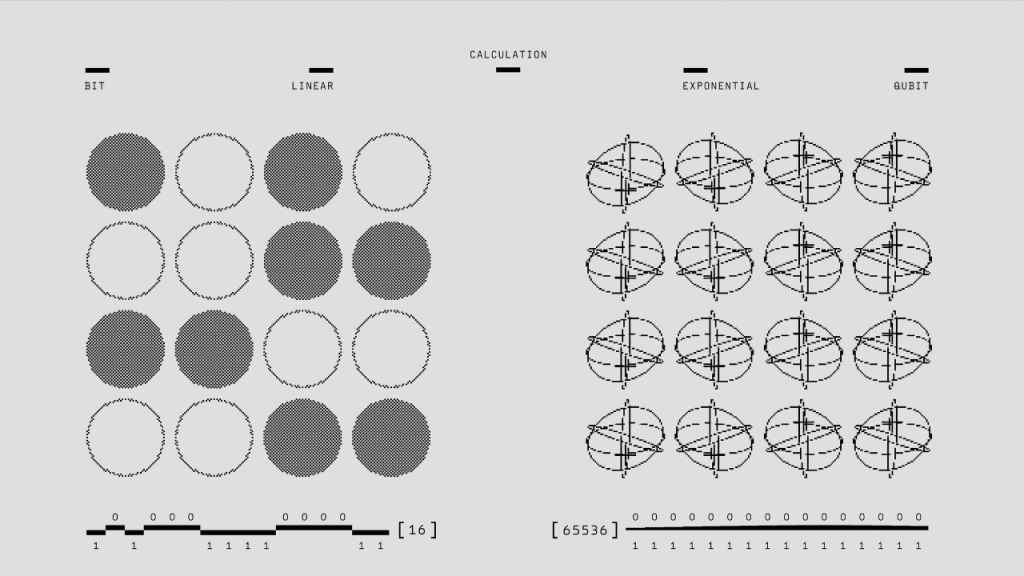

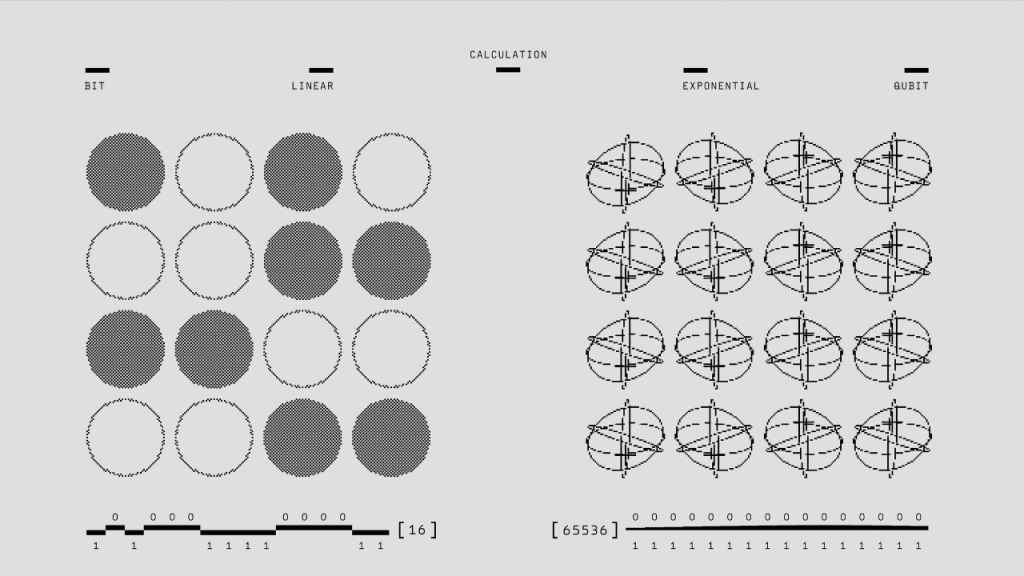

Classical hardware (which is what all our modern PC, laptop, smartphones comprise) is remarkably reliable – bits stay intact and errors are rare. Quantum systems, by contrast, suffer from noise, where stray electromagnetic fields can flip qubit states in microseconds. Fault tolerance, then, is the foundational pillar of quantum computing’s promise – where thousands of noisy physical qubits are bundled together into a single logical qubit, whose error rates shrink exponentially with cluster size.

Also read: Amazon introduces Ocelot, its first quantum computing chip: All you need to know

IBM’s papers detail how qLDPC codes and real-time decoding with classical processors will keep these logical qubits coherent long enough to run meaningful algorithms. It’s the quantum counterpart of error-correcting memory in classical RAM, but orders of magnitude more complex. Achieving this at scale – without insane overhead – was a longstanding hurdle. Starling promises to break that barrier.

The quantum computing road ahead

What’s the need for quantum computing? Think of it this way, that classical computing gave us the internet, climate models, and AI that warns us of pandemics. But certain frontiers – like simulating complex molecules for drug discovery or solving massive optimization problems – remain tantalizingly out of reach. This is where quantum computers shine.

IBM Quantum Starling stands as the next great expedition into the great unknown of computational limits. If delivered by 2029, it will let chemists model reactions at quantum fidelity, economists optimize vast portfolios, and cryptographers test new protocols. And just as the transistor’s debut reshaped society, so might this first fault-tolerant quantum turn a scientific corner.

Also read: Shaping the future of quantum computing: Intel’s Anne Matsuura

IBM’s role in all of this is unique: pioneering transistor-era hardware, building mainframes that powered global commerce, and now forging quantum architectures. Few organizations possess the cross-disciplinary mastery to span vacuum tubes to qubits.

Yet one can’t help but be cynical about their ambitious timeline. Because classical progress took decades per leap. But IBM’s quantum research team must compress that into a few short years lest other players – academia, startups, or global rivals – capture the lead. Yes, IBM isn’t the only tech company fiddling with quantum computing breakthroughs – there’s Google, Intel, Amazon, Microsoft and few others are also in the race.

From ENIAC’s vacuum tubes to the IBM System/360’s transistor logic, from Google’s Willow chip demonstration to today’s IBM Starling blueprint, we’re witnessing a continuum of human ingenuity. IBM’s new roadmap doesn’t just chart a technical path, but aspires to create a computing revolution. As we count down to 2029, the real question isn’t just whether IBM can build Starling – it’s whether we can harness its power responsibly to tackle the world’s most pressing challenges.

Also read: Quantum computing’s next leap: How distributed systems are breaking scalability barriers