It’s easy to get caught up in the latest doom and gloom buzz of AI. Whether it’s a chatbot hallucinating its way into the headlines or startup founders trading ethics for virality, the prevailing narrative often feels like a sci-fi thriller missing a safety manual.

So I called someone who’s worked extensively in this field. Dr. Amith Singhee, Director of IBM Research India and CTO for IBM India and South Asia, has been working on what the industry now brands as “Responsible AI” before the term even entered our lexicon. Over a video call, we unpacked what it really means to build AI tools we can trust – not just in theory, but in practice, at scale. And crucially, in India.

“Open source and responsibility have always been core to IBM,” he began. “Even before AI was hot, IBM has been active and instrumental in contributing to projects like Linux, Java, Eclipse. That same legacy drives how we approach AI today.”

Also read: Navigating the Ethical AI maze with IBM’s Francesca Rossi

As AI transitions from lab experiments to everyday utilities – embedded in everything from customer service bots, credit approval engines, even language models that translate government documents – India stands at a unique crossroads. The country is both a massive data ecosystem and a rapidly digitizing democracy. But how do you build foundational models that don’t just reflect global benchmarks but respect local context?

Dr Singhee answers that with a principle, which is radical transparency. “Our entire Granite family of foundation models – including time series and geospatial variants – are open source, with weights released under Apache 2.0 license. That means anyone can poke at them, test them, adapt them,” he says. “Solving a data problem just within one company is very limiting,” he emphasises. That’s why IBM released tools like DataPrepKit and Docling into the open source to help developers build out LLMs. “We’ve committed to a license that lets people do whatever they want, commercially or otherwise, and we did this while others were still exploring more restrictive models.”

AI-based trust can never be an afterthought

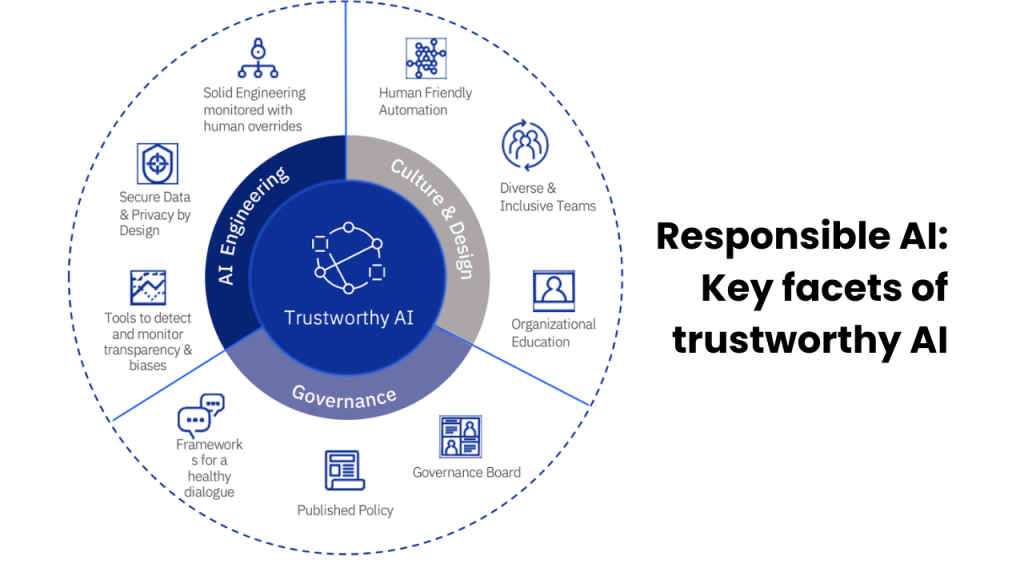

But putting models in the open isn’t enough. For Amith, the process matters just as much as the product, and model evaluation should get equal attention. He isn’t preaching without practice, highlighting how back in 2019, IBM contributed fairness, explainability and robustness toolkits to the Linux Foundation AI. “From data acquisition to model training to evaluation, everything we do is traceable. Every piece of training data goes through governance and legal scrutiny. Nothing is done in the shadows.”

That internal discipline has crucial external implications, Amith is quick to point out. IBM’s participation in global initiatives like the AI Alliance – co-founded with Meta – emphasizes an open, multi-stakeholder approach to safe AI development. “We have over 120 members now, including startups, non-profits, academia, and big enterprises. India is strongly represented.”

Also read: From IIT to Infosys: India’s AI revolution gains momentum, as 7 new members join AI Alliance

One key output from this alliance is a trust and safety evaluation initiative – something Amith believes is foundational. “It’s not enough to just show your model performs well on language benchmarks. You need to stress test it.” Does it follow shutdown instructions? Can it be misled by adversarial inputs? These are not nice-to-haves, but all mission-critical, says Dr Amith Singhee.

It’s a jab, however polite, at the current AI arms race, where functionality often outpaces forethought. I bring up the elephant in the room: high-profile stories about AI models ignoring shutdown commands. What does it take to make models truly safe? Amith is firm in his response. “There’s no magic. You need to evaluate models for these edge cases, document your decisions, and be open to revisiting them. Transparency forces discipline.”

“This process isn’t run purely by engineering teams. There’s a lot of governance oversight and legal oversight to ensure that every piece of data that’s coming in is understood.” Traceability is a key pillar of the whole process, underscores Dr Singhee. If something goes wrong, IBM can trace it back through their governance tools. “Having that governance and traceability and ability to go back and change anything in the entire process is extremely important.”

Regarding Made in India: LLM and AI

Any conversations on Responsible AI is incomplete without the mention of India, and the sheer scale of diversity and challenges it offers. I ask Dr Singhee a question that’s been brewing ever since the DeepSeek reveal from earlier in January 2025. With both government and private actors investing heavily in AI, is India just a consumer of models – or is it building from the ground up?

“Yes, and no,” Amith says, choosing nuance over nationalism. “There’s real momentum – from government missions to startups training models from scratch. But we shouldn’t confuse model-building with impact.” While noting that startups, nonprofits, and public sector missions are all stepping up to do their bit in this area, Dr Amith Singhee is careful not to romanticize homegrown models. “We often get caught up in the model… but at the same time, we also need to have a very strong focus on the impact and solutions.”

He warns of a common trap of the glitz and glamour of AI development, which fetishizes the model and forgets the user. “We often lose the forest for the trees.” The true litmus test is the value it provides, Dr Singhee emphasizes. “Whether we can extract economic value from AI for our country… whether we can empower our citizens from AI for our country and do it quickly.”

If a startup receives funding to build a full-stack Indic LLM and gets distracted by hype cycles, there’s “a risk of opportunity loss.” Amith emphasizes on striking a much-needed balance, “I think organizations and startups, enterprises have to maintain balance in this very fast moving landscape where you have conflicting motivations.”

That pragmatism should extend to choosing models, he highlights. “You don’t necessarily need perfect Bengali or Marathi, whatever it is for every use case that is needed out there.” It’s okay, he implies, to build on top of models from elsewhere – as long as it delivers outcomes that matter.

What government and developers must get right on AI

On the regulatory front, Amith sees positive signals and appreciates India’s overall restraint, but also a long road ahead. “We don’t yet have AI laws in India, we’re still early in the governance laws, and maybe that’s not a bad thing. We need thoughtful laws, not rushed ones.”

IBM’s stance is clear, according to Dr Singhee, which is to regulate use – not tech. “Make developers and deployers accountable.” In other words, don’t criminalize the tech or the tool, but focus on the outcomes it delivers, context of operation or output and all its related risks.

Dr Singhee also flags an overlooked technical benchmark, which is the need of the hour from a responsible AI development perspective. “We need some urgent activity on creating metrics that measure good AI behaviour of different kinds,” especially related to AI outcomes, suggests Dr Singhee, in different kinds of use cases in different parts of India. “Creation of definitions will allow us to qualify AI solutions as helpful or not, and that’s an area where I’d love to see more investment not just from the government, but academic institutions, philanthropists, even non-altruistic entities because that will make us ready for actually consuming AI in a safe way.”

Also read: Balancing AI ethics with innovation, explained by Infosys’ Balakrishna DR

He also applauds the India AI Mission’s creation of a dedicated AI Safety Institute. “It’s a good move. But the real challenge lies at the state and municipal levels. These are the entities that will actually deploy AI in governance, public health, and utilities. They need evaluation frameworks and trusted metrics – urgently.”

He underscores a call to action to the developer community and the open source movement in particular, to dedicate some effort and come together to build these safety benchmarks now, even before solutions hit production. “If we wait till deployment to figure out how to measure fairness or robustness, it’s already too late,” Amith highlights.

For developers who want to contribute to the responsible AI movement, Amith has a clear message. Start with the use case. This human-centered approach may not be as glamorous as publishing leaderboard-busting models, but it’s the only way AI becomes more than just a buzzword in India.

Final word on Agentic AI

As we near the end of our conversation, Amith touches upon what he sees as the next frontier of AI safety and responsible development – AI agents acting on behalf of humans. “If my AI agent books a flight or approves an invoice, it needs to carry my identity, my permissions, my authorizations. We need to rewire identity management for an agentic future.” There needs to be some architectural innovation, Dr Singhee notes, to ensure that the Agentic AI architectures conform and leverage the same security architectures that have existed before Generative AI emerged in the last 2-3 years.

It’s a subtle point, but one that could define the next phase of AI adoption – where machines not only predict or generate, but act. And that makes the case for safe and responsible AI development and deployment even more urgent.

Also read: ChatGPT 5: The DOs and DON’Ts of AI training according to OpenAI