Big Blue may have missed the boat on being one of the big AI model builders, but its IBM Research division has built its own enterprise-grade family of models and its server and research divisions have plenty of experience building accelerators and supercomputers.

The company also has well over 100,000 enterprise customers who are both skeptical of and hopeful about how AI can be transformative to their businesses, and they want someone with expertise to help them build their first AI systems and gradually transform their applications into agentic AI platforms.

Commercializing AI models inside of enterprises is not something that the big model builders like OpenAI and Anthropic can do by themselves. No one we know wants to rent an API and just pump queries into and extract responses from that API. They want a platform, and they don’t want to start from scratch with their IT estates and scrap the applications they have been building over the decades. And besides, the model builders are running at break-neck speed towards artificial general intelligence (matching humans at intellectual tasks) or superintelligence (exceeding humans at intellectual tasks).

And so IBM finds itself in a pretty good position to benefit from the GenAI wave. Not as good perhaps as Oracle and Microsoft and Salesforce, the dominant enterprise application suppliers, two of which are also cloud infrastructure suppliers, mind you. But given IBM’s history of commercializing Web infrastructure in the 1990s and 2000s – IBM mashed up the Apache web server and the Tomcat Java server into the WebSphere Application Server and made untold tens of billions of dollars of revenue from it – and classical HPC simulation for many, many decades, it is foolish to count Big Blue out of this game.

IBM’s supercomputer business pretty much in the wake of the installation of the “Summit” hybrid CPU-GPU supercomputer at Oak Ridge National Laboratory and the similar but slightly different “Sierra” supercomputer at Lawrence Livermore National Laboratory in 2018, and it has been looking for another way to have HPC drive revenues and profits, and clearly GenAI is a mainstreaming of a kind of HPC – which means IBM can make some money selling software and services – and maybe even some iron.

Which brings us to a bunch of announcements that Big Blue made at its techExchange2025 developer’s conference this week.

First of all, there is a partnership between IBM and model builder Anthropic, the first partnership between Big Blue and the AI model spinoff from some of the several founders of OpenAI. No financial details were given on the partnership, which was unveiled by Dinesh Nirmal, senior vice president of products for the Software group at IBM. Nirmal has been at IBM for three decades, starting out in Db2 and IMS mainframe development and crossing over to Hadoop and Spark data analytics a decade ago before taking over data and AI product development at IBM as well as running its Silicon Valley Lab in 2017.

The conversation between Nirmal and Dario Amodei highlighting the partnership between was fairly innocuous and lacking in details, except maybe for Amodei saying that “I think we can move faster together.”

There does not appear to be an equity stake being taken out by IBM much as Amazon and Google have done to give Anthropic a means of getting money that it can round trip back into AWS and Google Cloud to train its various Claude models.

If anything, we strongly suspect that IBM is being used as a vehicle to get Anthropic models into the enterprises of the world where IBM Power Systems and System z mainframes dominate the back office systems and where some of the low hanging fruit for AI modernization of applications exists. IBM is probably getting a deal on licenses to Anthropic models, which it can add to its Watsonx library and which it can also use in tools that it in turn sells to customers. In a sense, Anthropic is a white label distributor of AI models to IBM, which embeds it and puts its brands all over it.

IBM has trained its own AI models, and has even created Watsonx code assistants to help modernize COBOL applications on mainframes and RPG applications on IBM i proprietary platforms. But Anthropic is widely believed to have the best model for analyzing and generating application code, and we would guess that Anthropic’s Claude Sonnet 4.5 is better than IBM’s homegrown Granite 20B model that was used for the COBOL code assistant and the Granite 7B model that was custom-created for the RPG code assistant. (This paper on the Granite models versus others for code assistants is interesting.)

What we can tell you for sure is that those separate code assistants for RPG and COBOL, which were developed by their respective Power Systems and System z divisions within IBM, have been deprecated before they really even got rolling as Watsonx products with a new, AI-infused integrated development environment that spans IBM’s platforms (and surely others) called Project Bob. As in Bob The Builder, we presume, except with a blue safety helmet and a keyboard instead of a yellow helmet and a utility belt.

IBM tells The Next Platform that Project Bob is based on Claude, Mistral, Granite, and Llama models, but is not precise. Our guess is Claude Sonnet 4.5 (which is maybe 150B to 200B in parameter counts), Granite 8B and maybe 7B, Llama 3 70B, and we have no idea about the Mistral models.

Nirmal said in his TechX25 keynote that according to surveys that IBM has done, only 5 percent of enterprise customers are getting return on investment in their AI projects, and that the other 95 percent are having issues because availability is not the same thing as usability when it comes to AI. And to make the point, he said that there were over 2 million models on Hugging Face, but how many were usable, meaning they could be adopted and trusted by enterprises?

Neel Sundaresan, general manager of automation and AI at IBM, says that the Project Bob augmented IDE has been in use for four months inside of Big Blue, and 6,000 software developers have been making use of it. Half of those developers use it every day, and 75 percent use it every two days, and all of them use it at least once over a ten day period. (This is not a measure of productivity, but of engagement.) In the case of a distinguished engineer, they treat Project Bob as a junior engineer that they can give tasks to, says Sundaresan. And averaged out over those developers, IBM calculates a 45 percent increase in productivity for its own code slingers with Project Bob over those months of usage.

You can see the Project Bob demo in the keynote, which is available here. It looks pretty useful, which demos are supposed to do, we realize. In that demo there is a QR code to use Project Bob under early release if you want to take a look at it yourself.

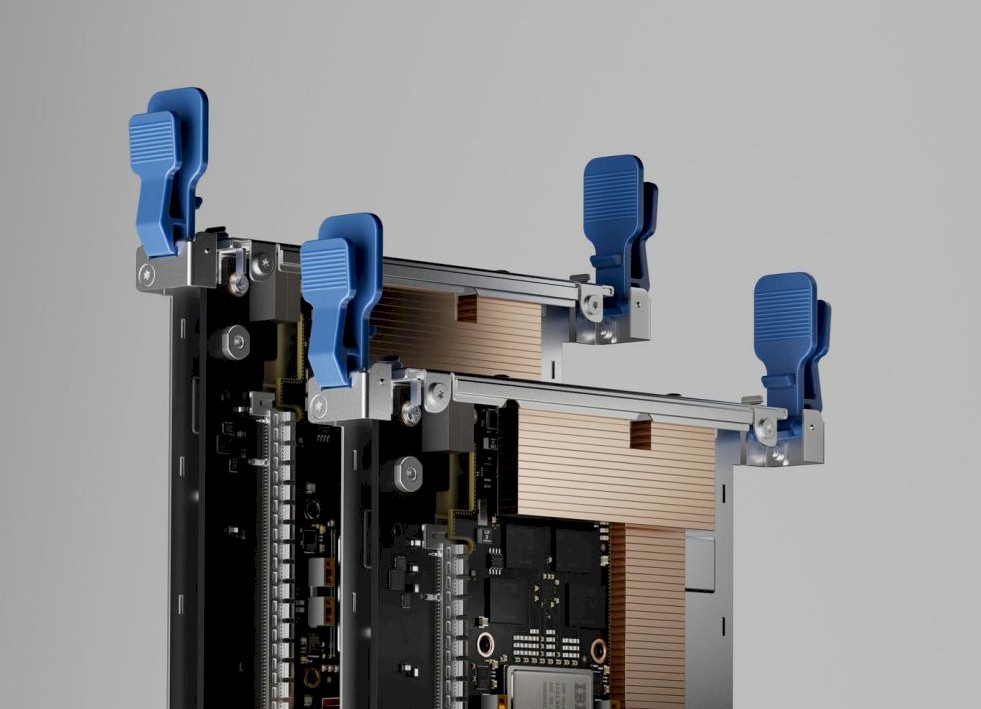

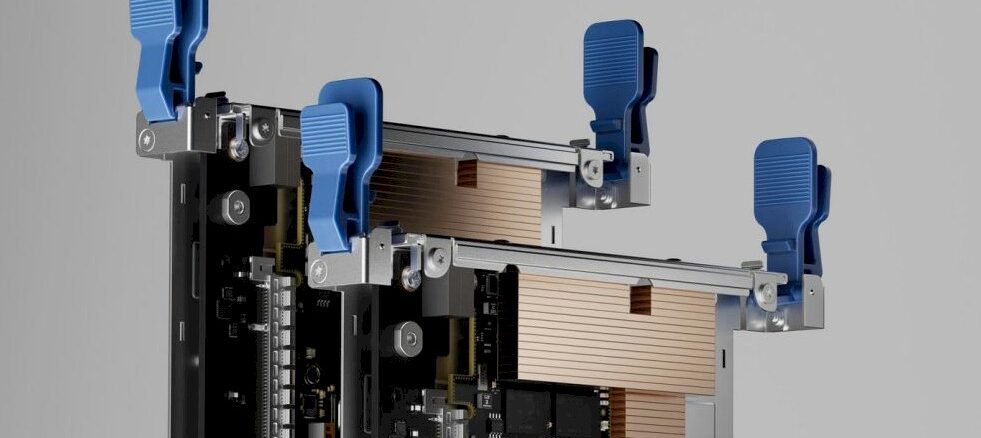

The other bit of relevant news out of IBM at TechX25 concerned the “Spyre” XPU that came out of IBM Research a few years back and that has been in the process of commercialization for the past year. (See IBM’s AI Accelerator: This Had Better Not Be Just A Science Project from October 2022 for our first pass on the matrix math unit that came out of IBM Research and IBM Shows Off Next-Gen AI Acceleration, On Chip DPU For Big Iron for an update of the Spyre effort as of last August.)

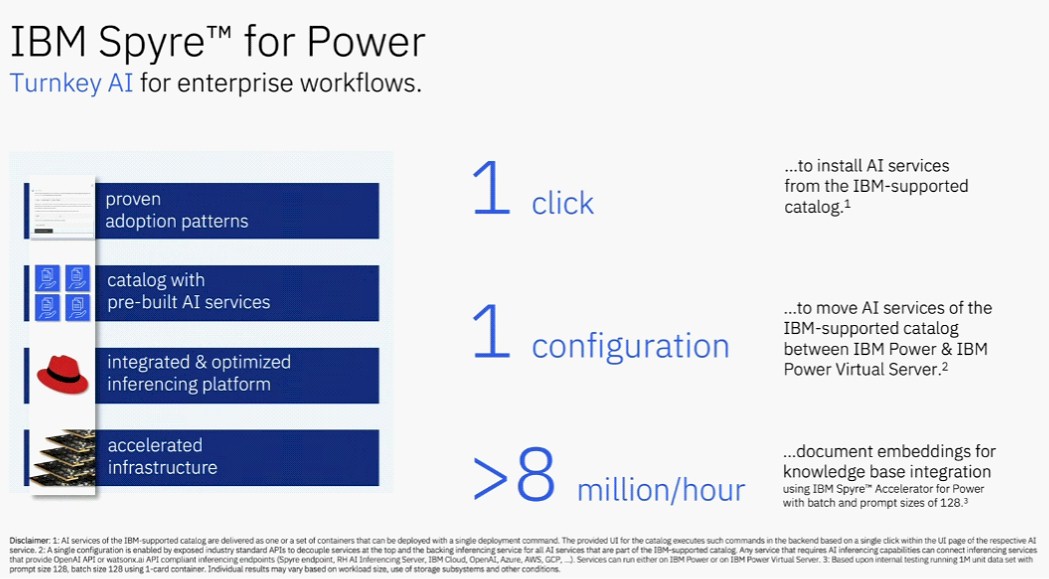

The Spyre cards will be sold in eight-card bundles and will be available under full stack, value-priced bundles from IBM. The Spyre PCI-Express cards will not be sold separately. It was not clear at press time if the Anthropic AI models had been ported to Spyre as yet, but if IBM wants to sell its own iron running Project Bob, then it had better be. The question is will this be enough oomph. Eight Spyre cards can be ganged together with shared memory into a virtual Spyre card that has 1 TB of memory and 1.6 TB/sec of memory bandwidth on which to run AI models, with an aggregate of more than 2.4 petaops of performance (presumably at FP16 resolution, but IBM was not clear). The Spyre cards support INT4, INT8, FP8, and FP16 data types. Throughput performance multiplies as you cut the precision down if those performance figures given were for FP16. If they were for INT4 precision, start dividing.

The Spyre accelerators will ship as sidecars to System z mainframes starting on October 28. The plan is to ship them for Power Systems processing augmentation on December 12. The bundles will come with Red Hat Enterprise Linux, which is required on mainframe or Power system partitions to manage the Spyre cards. The bundle will also include RHEL.AI Inference Server, the inference platform from Big Blue, and in the first quarter of 2026 the whole OpenShift.AI Kubernetes platform with AI extensions and frameworks and the Watsonx.data governance tool will be ported to the stack. As you add things, the cost of the bundle goes up. But customers also get to choose their entry points.

IBM plans to activate Spyre on its platforms by use case, and it has created a lot of digital assistants and agents that make use of AI models running inference on the Spyre accelerators, which are being showed off in the preview of the hardware:

As you can see, there are cross-industry assistants for managing IT, generating and analyzing code, forecasting IT supply chains, or detecting and fixing security vulnerabilities, as well as industry specific agents for banking and finance, healthcare, insurance, the public sector, and more. For the AI stack, there are prebuilt AI services (which themselves use the AI models and run on Spyre) that help manage vector databases, serve models, do searches, translate and summarize documents, extract information and tag it, or convert natural language to SQL so newbies can query databases and datastores.

The neat bit about Spyre, which we learned from Sebastian Lehrig, who was a senior AI and OpenShift systems architect until moving over to the Power Systems division as its AI leader, is that the Spyre accelerators have circuits that let running inference workloads be live migrated along with their CPUs on Power iron. This cannot be done with Nvidia GPUs or AMD GPUs attached to Power Systems machinery. (Presumably this works with Spyres attached to mainframes, too.)

We look forward to seeing these bundles and costing them out to see how they stack up to GPU alternatives. IBM is not talking about that yet, but says it will offer competitive prices and thus far early adopters are happy with the prices that it is talking about.