IBM has launched the latest generation of its IBM LinuxONE servers – the LinuxONE 5 – featuring the IBM Telum II chip.

Big Blue has additionally signed agreements with Oracle and Lumen, bringing IBM’s AI offerings to their respective platforms.

– IBM

The IBM LinuxONE 5 server, according to the company, is capable of processing up to 450 billion AI inference operations per day.

The server is equipped with both the Telum II on-chip AI processor and the IBM Spyre Accelerator, which will be available in Q4 of 2025, and is designed for generative and high-volume AI applications.

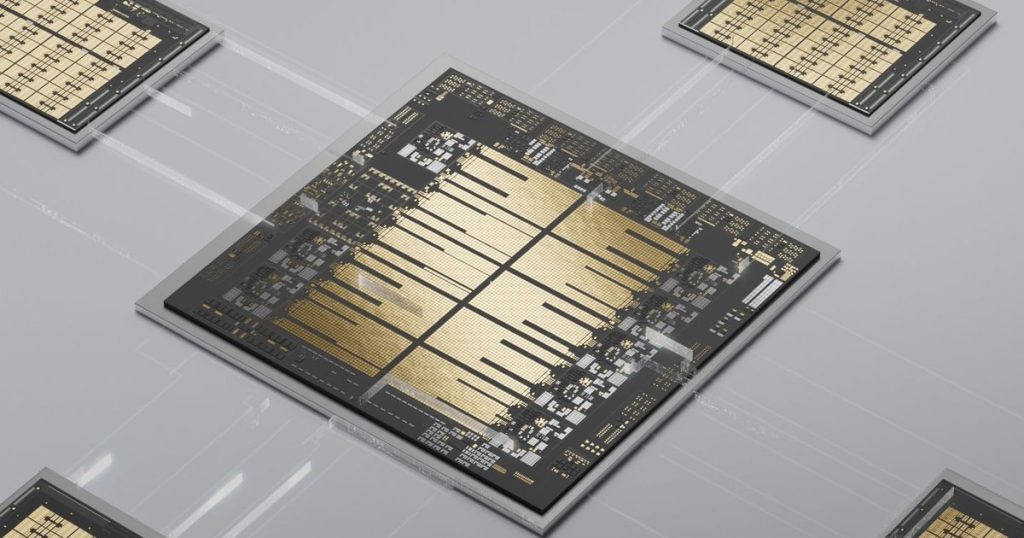

The Telum II chip is developed using Samsung’s 5nm technology. The chip has 32 cores across four interconnected clusters and 10 Level-2 caches, and features a built in low-latency data processing unit.

The upcoming Spyre accelerator will contain 32 AI accelerator cores with a similar architecture to the AI accelerator integrated into the Telum II chip.

IBM launched its original Telum chip in 2021 as its first on-processor chip AI accelerator, originally designed for use in the company’s z16 mainframe computers. Developed with 7nm technology, the first generation chip contains eight processor cores and runs with more than 5 GHz in clock frequency.

The previous LinuxONE server generation also used IBM’s first Telum chip, announced in late 2022 and launched in April 2023.

IBM brings Watsonx to Lumen’s Edge cloud

IBM and Lumen Technologies are collaborating to bring the former’s AI products to Lumen’s Edge cloud infrastructure and network.

IBM will integrate its watsonx portfolio of products with Lumen’s network, bringing AI inference capabilities closer to the source of data.

IBM watsonx technology will be deployed in Lumen’s Edge data centers and made accessible via Lumen’s multi-cloud architecture. According to a Lumen data sheet, the company has more than 200 data centers operating in North America with more than 350,000 miles of backbone fiber.

“Enterprise leaders don’t just want to explore AI, they need to scale it quickly, cost-effectively, and securely,” said Ryan Asdourian, chief marketing and strategy officer at Lumen. “By combining IBM’s AI innovation with Lumen’s powerful network Edge, we’re turning vision into action—making it easier for businesses to tap into real-time intelligence wherever their data lives, accelerate innovation, and deliver smarter, faster customer experiences.”

“Our work with Lumen underscores a strong commitment to meeting clients where they are —bringing the power of enterprise-grade AI and hybrid cloud to wherever data lives,” said Adam Lawrence, general manager, Americas Technology, IBM. “Together, we’re helping clients accelerate their AI journeys with greater speed, flexibility, and security, driving new use cases at the Edge ranging from automated customer service to predictive maintenance and intelligent supply chains.”

Big Blue teams up with Big Red

IBM has similarly teamed up with Oracle to bring watsonx to Oracle Cloud Infrastructure.

From July this year, IBM will offer its watsonx Orchestrate AI agent via OCI. The Orchestrate AI will work with the AI agent offerings already embedded in OCI, and the cloud platform’s other AI services.

IBM agents run in watsonx Orchestrate on Red Hat OpenShift on OCI, including in public, sovereign, government, and Oracle Alloy regions, and can also be hosted on-premises.

IBM will also bring its Granite models to OCI’s Data Science platform.

“AI delivers the most impactful value when it works seamlessly across an entire business,” said Greg Pavlik, executive vice president, AI and Data Management Services, Oracle Cloud Infrastructure. “IBM and Oracle have been collaborating to drive customer success for decades, and our expanded partnership will provide customers new ways to help transform their businesses with AI.”

“By integrating our AI with Oracle’s offerings, we’re enabling businesses to easily deploy and manage AI agents across their enterprise,” added Kareem Yusuf Ph.D, senior vice president, Ecosystem, Strategic Partners & Initiatives, IBM. “Our collaboration with Oracle illustrates how IBM and our partners offer clients a seamless and flexible path to scale AI.”

In April of this year, IBM announced its next-generation mainframe – the z17. According to the company, the z17 is engineered specifically to support AI capabilities across hardware, software, and systems operations, and will be available from June 18, 2025.

During the company’s latest earnings call, IBM revealed it had lost 15 federal contracts as a result of DOGE-related cuts.