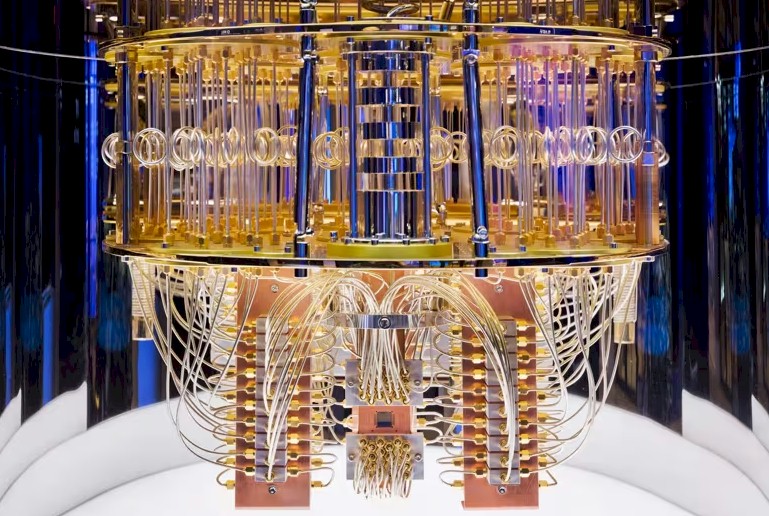

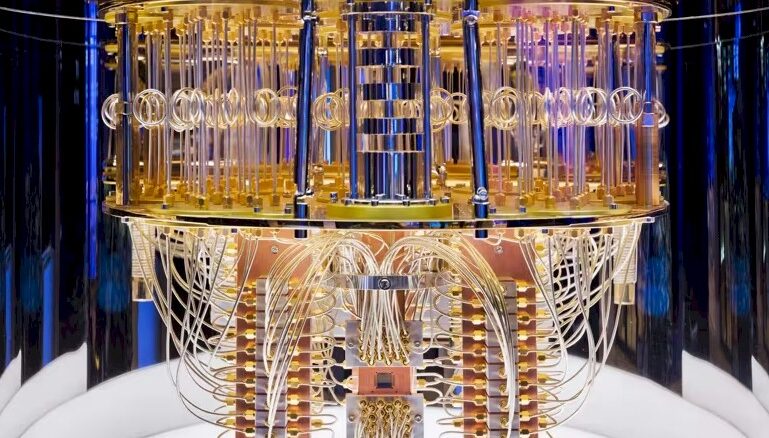

As we talked about a decade ago in the wake of launching The Next Platform, quantum computers – at least the fault tolerant ones being built by IBM, Google, Rigetti, and a few others – need a massive amount of traditional Von Neumann compute to help maintain their state, assist with qubit error correction, and assist with their computations.

Moreover, the quantum computers require FPGAs equipped with RF modulators to hit the qubits with microwave pulses to keep them from decohering for long enough for them to be computationally useful. Most of the current quantum machines out there use a mix of CPU, GPU, and FPGA compute engines to manage their qubits, and therefore, in the wake of AMD’s acquisition of Xilinx and its successful transformation into a supplier of datacenter-class GPU compute engines that can rival those from Nvidia and high-end CPUs that are arguably better than those supplied by Intel, it is only natural that Big Blue would reach out to AMD to form a partnership to advance the state of the art in quantum computing.

By its very nature, as we pointed out above, quantum computing means hybrid computing, mixing all kinds of compute together to not only create a quantum machine that has enough working qubits to be useful, but to also hook into that quantum machine to bring together quantum calculations with classical HPC and AI computing.

According to IBM and AMD, the partnership announced this week by the two companies – which is a memorandum of understanding between them establishing the collaboration, with no money changing hands between the two – researchers will collaborate on hybrid classical-quantum algorithms that will be able to solve problems that cannot be truly solved by either type of compute alone. As an example, the two said that they could use a quantum computer to simulate the behavior of atoms and molecules while using an AI cluster to handle the data analysis from that simulation.

Back in June, IBM inked a deal with RIKEN Lab in Japan to deploy a 156-qubit Quantum Heron system alongside the “Fugaku” supercomputer, which is a custom processor and system designed by Fujitsu that delivers 1.42 exaflops of oomph on the HPL-MxP mixed precision implementation of the High Performance LINPACK benchmark that is commonly used to rank the performance of supercomputers. That 156-qubit level for the Heron system is important because this is a larger number of qubits than can be simulated on any supercomputer in any HPC center in the world. (We are not certain if Microsoft, AWS, Meta Platforms, or xAI would not have enough GPUs to simulate such a machine.)

IBM wants to be able to create a fully fault tolerant – isn’t it really fault correcting? – quantum supercomputer by the end of the decade. The Quantum System Two machines already use Xilinx FPGAs with on-board RF modulators to manipulate the state of qubits, and the two will be working together to advance the state of the art here.

Big Blue has, of course, worked with AMD in the past. Way back in the dawn of time, as we were breaking into the petaflops era of supercomputing, IBM partnered with AMD to pair its PowerXCell vector accelerators with AMD’s Opteron processors to create the 1.7 petaflops “Roadrunner” massively parallel and hybrid supercomputer at Los Alamos National Laboratory.

This time around, IBM is going to AMD for compute engines once again, but it is also going to it for expertise.

“We don’t intend to build QPUs,” Ralph Wittig, who is the head of research and advanced development at AMD and also a Corporate Fellow, tells The Next Platform. “But we look for really strong partners, and IBM and AMD have long historical connections, technology management chains, et cetera. So this really felt like the right partnership. At the other national labs around the world, we are seeing the investigation into quantum processing and trying to solve these many body problems. They all get expressed as quantum problems, whereas simulating using a QPU is far more efficient than trying to approximate many body problems that can map onto a quantum unit trying to approximate those with AI.”

Basically, we are going to have enough actual qubits that we do not have to simulate them anymore, which perhaps is also why Nvidia co-founder and chief executive officer Jensen Huang was cozying up to the usual suspects in quantum computing at the recent GTC 2025 conference.

Just like AI models have many stages where they are using GPU compute, so does quantum computing. So don’t think the GPU makers are not going to do well selling their massively parallel compute engines (they are not really graphics engines anymore, are they?) to companies installing quantum computers. Wittig says that the quantum pipeline will use GPUs to precondition data and push it onto the QPUs in a quantum system, and then once there are some results, AI models usually chew on it, which also takes GPUs.

IBM has lots of expertise in quantum computing, but it no longer has a commodity server business and it has long since abandoned the PowerXCell vector engines used in Roadrunner as well as any hopes of using its Power CPUs to compete against X86 and Arm directly except at the high-end of enterprise computing, where it can still win any throwdown. In the absence of IBM in HPC, AMD paired with Hewlett Packard Enterprise has filled in a lot of the gap left behind when IBM sold off its System x business and its partnership with Nvidia for Power-GPU hybrid supercomputing went up on the rocks. (Some might say an ascending AMD with its aggressive Epyc CPU and Instinct GPU roadmaps forced that breakup because, we think, neither IBM nor Nvidia was making much in the way of money selling supercomputers based on their compute engines to the US Department of Energy’s HPC labs.)

“There’s no such thing as a quantum-only algorithm,” says Scott Crowder, vice president of quantum adoption and business development at IBM. “Any of the workflows are a combination of classical subroutines and quantum subroutines, so we are looking for partners for the acceleration of the classical pieces that go with our acceleration of the quantum pieces in that workflow.”

To elaborate on the example above a bit, Crowder says that very large scale supercomputers have been built to solve chemistry problems with simulations, but these are not very efficient and they use approximate methods because you can’t really do the calculations on any machine of any size. Some approximate methods are good, others not so great. But quantum calculations are “significantly more efficient, exponentially more efficient,” as Crowder put it, than classical approaches. To stress the point, In one chemistry simulation using density matrix renormalization group (DRMG) calculations, it takes Fugaku around eight hours to do the math, but to run a simulation in hybrid mode uses about 12 minutes of Heron system time and about and a half. At the very least, this cuts the time by more than a factor of 4X.

“The accuracy of the classical approximate method is still slightly better than the accuracy of the combo quantum plus classical method,” Crowder admits. “But we are narrowing that gap significantly and we think within the next eighteen months, we are going to cross over that line and the quantum plus classical approach will be better than the classical approach alone.”