This post is cowritten with Jimmy Cancilla from Rapid7.

Organizations are managing increasingly distributed systems, which span on-premises infrastructure, cloud services, and edge devices. As systems become interconnected and exchange data, the potential pathways for exploitation multiply, and vulnerability management becomes critical to managing risk. Vulnerability management (VM) is the process of identifying, classifying, prioritizing, and remediating security weaknesses in software, hardware, virtual machines, Internet of Things (IoT) devices, and similar assets. When new vulnerabilities are discovered, organizations are under pressure to remediate them. Delayed responses can open the door to exploits, data breaches, and reputational harm. For organizations with thousands or millions of software assets, effective triage and prioritization for the remediation of vulnerabilities are critical.

To support this process, the Common Vulnerability Scoring System (CVSS) has become the industry standard for evaluating the severity of software vulnerabilities. CVSS v3.1, published by the Forum of Incident Response and Security Teams (FIRST), provides a structured and repeatable framework for scoring vulnerabilities across multiple dimensions: exploitability, impact, attack vector, and others. With new threats emerging constantly, security teams need standardized, near real-time data to respond effectively. CVSS v3.1 is used by organizations such as NIST and major software vendors to prioritize remediation efforts, support risk assessments, and comply with standards.

There is, however, a critical gap that emerges before a vulnerability is formally standardized. When a new vulnerability is disclosed, vendors aren’t required to include a CVSS score alongside the disclosure. Additionally, third-party organizations such as NIST aren’t obligated or bound by specific timelines to analyze vulnerabilities and assign CVSS scores. As a result, many vulnerabilities are made public without a corresponding CVSS score. This situation can leave customers uncertain about how to respond: should they patch the newly discovered vulnerability immediately, monitor it for a few days, or deprioritize it? Our goal with machine learning (ML) is to provide Rapid7 customers with a timely answer to this critical question.

Rapid7 helps organizations protect what matters most so innovation can thrive in an increasingly connected world. Rapid7’s comprehensive technology, services, and community-focused research remove complexity, reduce vulnerabilities, monitor for malicious behavior, and shut down attacks. In this post, we share how Rapid7 implemented end-to-end automation for the training, validation, and deployment of ML models that predict CVSS vectors. Rapid7 customers have the information they need to accurately understand their risk and prioritize remediation measures.

Rapid7’s solution architecture

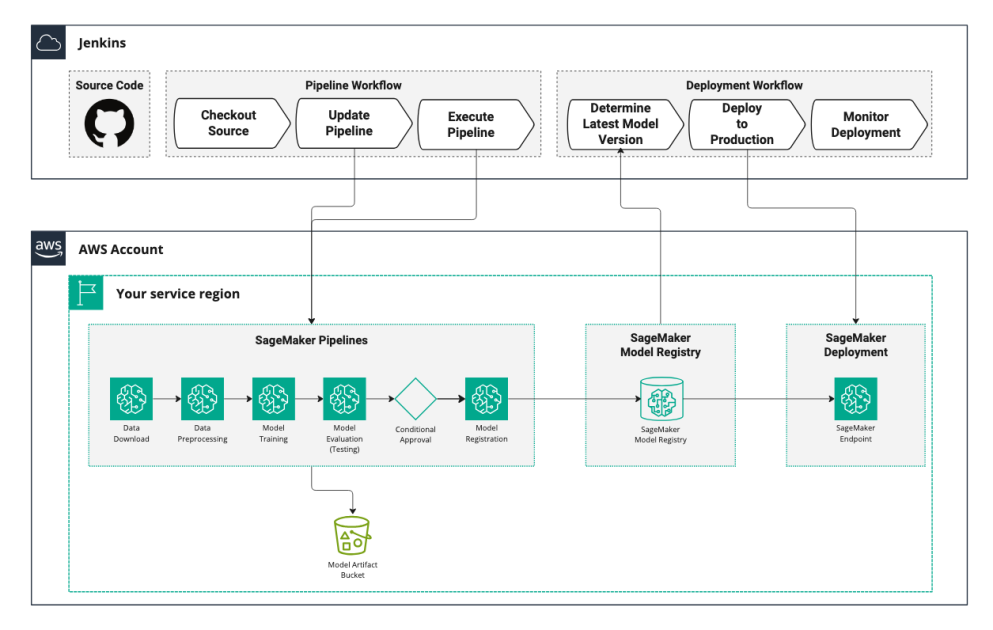

Rapid7 built their end-to-end solution using Amazon SageMaker AI, the Amazon Web Services (AWS) fully managed ML service to build, train, and deploy ML models into production environments. SageMaker AI provides powerful compute for ephemeral tasks, orchestration tools for building automated pipelines, a model registry for tracking model artifacts and versions, and scalable deployment to configurable endpoints.

Rapid7 integrated SageMaker AI with their DevOps tools (GitHub for version control and Jenkins for build automation) to implement continuous integration and continuous deployment (CI/CD) for the ML models used for CVSS scoring. By automating model training and deployment, Rapid7’s CVSS scoring solutions stay up to date with the latest data without additional operational overhead.

The following diagram illustrates the solution architecture.

Orchestrating with SageMaker AI Pipelines

The first step in the journey toward end-to-end automation was removing manual activities previously performed by data scientists. This meant migrating experimental code from Jupyter notebooks to production-ready Python scripts. Rapid7 established a project structure to support both development and production. Each step in the ML pipeline—data download, preprocessing, training, evaluation, and deployment—was defined as a standalone Python module in a common directory.

Designing the pipeline

After refactoring, pipeline steps were moved to SageMaker Training and Processing jobs for remote execution. Steps in the pipeline were defined using Docker images with the required libraries, and orchestrated using SageMaker Pipelines in the SageMaker Python SDK.

CVSS v3.1 vectors consist of eight independent metrics combined into a single vector. To produce an accurate CVSS vector, eight separate models were trained in parallel. However, the data used to train these models was identical. This meant that the training process could share common download and preprocessing steps, followed by separate training, validation, and deployment steps for each metric. The following diagram illustrates the high-level architecture of the implemented pipeline.

Data loading and preprocessing

The data used to train the model comprised existing vulnerabilities and their associated CVSS vectors. This data source is updated constantly, which is why Rapid7 decided to download the most recent data available at training time and uploaded it to Amazon Simple Storage Service (Amazon S3) to be used by subsequent steps. After being updated, Rapid7 implemented a preprocessing step to:

Structure the data to facilitate ingestion and use in training.

Split the data into three sets: training, validation, and testing (80%, 10%, and 10%).

The preprocessing step was defined with a dependency on the data download step so that the new dataset was available before a new preprocessing job was started. The outputs of the preprocessing job—the resulting training, validation, and test sets—are also uploaded to Amazon S3 to be consumed by the training steps that follow.

Model training, evaluation, and deployment

For the remaining pipeline steps, Rapid7 executed each step eight times—one time for each metric in the CVSS vector. Rapid7 iterated through each of the eight metrics to define the corresponding training, evaluation, and deployment steps using the SageMaker Pipelines SDK.

The loop follows a similar pattern for each metric. The process starts with a training job using PyTorch framework images provided by Amazon SageMaker AI. The following is a sample script for defining a training job.

The PyTorch Estimator creates model artifacts that are automatically uploaded to the Amazon S3 location defined in the output path parameter. The same script is used for each one of the CVSS v3.1 metrics while focusing on a different metric by passing a different cvss_metric to the training script as an environment variable.

The SageMaker Pipeline is configured to trigger the execution of a model evaluation step when the model training job for that CVSS v3.1 metric is finished. The model evaluation job takes the newly trained model and test data as inputs, as shown in the following step definition.

The processing job is configured to create a PropertyFile object to store the results from the evaluation step. Here is a sample of what might be found in this file:

This information is critical in the last step of the sequence followed for each metric in the CVSS vector. Rapid7 wants to ensure that models deployed in production meet quality standards, and they do that by using a ConditionStep that allows only models whose accuracy is above a critical value to be registered in the SageMaker Model Registry. This process is repeated for all eight models.

Defining the pipeline

With all the steps defined, a pipeline object is created with all the steps for all eight models. The graph for the pipeline definition is shown in the following image.

Managing models with SageMaker Model Registry

SageMaker Model Registry is a repository for storing, versioning, and managing ML models throughout the machine learning operations (MLOps) lifecycle. The model registry enables the Rapid7 team to track model artifacts and their metadata (such as performance metrics), and streamline model version management as their CVSS models evolve. Each time a new model is added, a new version is created under the same model group, which helps track model iterations over time. Because new versions are evaluated for accuracy before registration, they’re registered with an Approved status. If a model’s accuracy falls below this threshold, the automated deployment pipeline will detect this and send an alert to notify the team about the failed deployment. This enables Rapid7 to maintain an automated pipeline that serves the most accurate model available to date without requiring manual review of new model artifacts.

Deploying models with inference components

When a set of CVSS scoring models has been selected, they can be deployed in a SageMaker AI endpoint for real-time inference, allowing them to be invoked to calculate a CVSS vector as soon as new vulnerability data is available. SageMaker AI endpoints are accessible URLs where applications can send data and receive predictions. Internally, the CVSS v3.1 vector is prepared using predictions from the eight scoring models, followed by postprocessing logic. Because each invocation runs each of the eight CVSS scoring models one time, their deployment can be optimized for efficient use of compute resources.

When the deployment script runs, it checks the model registry for new versions. If it detects an update, it immediately deploys the new version to a SageMaker endpoint.

Ensuring Cost Efficiency

Cost efficiency was a key consideration in designing this workflow. Usage patterns for vulnerability scoring are bursty, with periods of high activity followed by long idle intervals. Maintaining dedicated compute resources for each model would be unnecessarily expensive given these idle times. To address this issue, Rapid7 implemented Inference Components in their SageMaker endpoint. Inference components allow multiple models to share the same underlying compute resources, significantly improving cost efficiency—particularly for bursty inference patterns. This approach enabled Rapid7 to deploy all eight models on a single instance. Performance tests showed that inference requests could be processed in parallel across all eight models, consistently achieving sub-second response times (100-200ms).

Monitoring models in production

Rapid7 continually monitors the models in production to ensure high availability and efficient use of compute resources. The SageMaker AI endpoint automatically uploads logs and metrics into Amazon CloudWatch, which are then forwarded and visualized in Grafana. As part of regular operations, Rapid7 monitors these dashboards to visualize metrics such as model latency, the number of instances behind the endpoint, and invocations and errors over time. Additionally, alerts are configured on response time metrics to maintain system responsiveness and prevent delays in the enrichment pipeline. For more information on the various metrics and their usage, refer to the AWS blog post, Best practices for load testing Amazon SageMaker real-time inference endpoints.

Conclusion

End-to-end automation of vulnerability scoring model development and deployment has given Rapid7 a consistent, fully automated process. The previous manual process for retraining and redeploying these models was fragile, error-prone, and time-intensive. By implementing an automated pipeline with SageMaker, the engineering team now saves at least 2–3 days of maintenance work each month. By eliminating 20 manual operations, Rapid7 software engineers can focus on delivering higher-impact work for their customers. Furthermore, by using inference components, all models can be consolidated onto a single ml.m5.2xlarge instance, rather than deploying a separate endpoint (and instance) for each model. This approach nearly halves the hourly compute cost, resulting in approximately 50% cloud compute savings for this workload. In building this pipeline, Rapid7 benefited from features that reduced time and cost across multiple steps. For example, using custom containers with the necessary libraries improved startup times, while inference components enabled efficient resource utilization—both were instrumental in building an effective solution.

Most importantly, this automation means that Rapid7 customers always receive the most recently published CVEs with a CVSSv3.1 score assigned. This is especially important for InsightVM because Active Risk Scores, Rapid7’s latest risk strategy for understanding vulnerability impact, rely on the CVSSv3.1 score as a key component in their calculation. Providing accurate and meaningful risk scores is critical for the success of security teams, empowering them to prioritize and address vulnerabilities more effectively.

In summary, automating model training and deployment with Amazon SageMaker Pipelines has enabled Rapid7 to deliver scalable, reliable, and efficient ML solutions. By embracing these best practices and lessons learned, teams can streamline their workflows, reduce operational overhead, and remain focused on driving innovation and value for their customers.

About the authors

Jimmy Cancilla is a Principal Software Engineer at Rapid7, focused on applying machine learning and AI to solve complex cybersecurity challenges. He leads the development of secure, cloud-based solutions that use automation and data-driven insights to improve threat detection and vulnerability management. He is driven by a vision of AI as a tool to augment human work, accelerating innovation, enhancing productivity, and enabling teams to achieve more with greater speed and impact.

Jimmy Cancilla is a Principal Software Engineer at Rapid7, focused on applying machine learning and AI to solve complex cybersecurity challenges. He leads the development of secure, cloud-based solutions that use automation and data-driven insights to improve threat detection and vulnerability management. He is driven by a vision of AI as a tool to augment human work, accelerating innovation, enhancing productivity, and enabling teams to achieve more with greater speed and impact.

Felipe Lopez is a Senior AI/ML Specialist Solutions Architect at AWS. Prior to joining AWS, Felipe worked with GE Digital and SLB, where he focused on modeling and optimization products for industrial applications.

is a Senior AI/ML Specialist Solutions Architect at AWS. Prior to joining AWS, Felipe worked with GE Digital and SLB, where he focused on modeling and optimization products for industrial applications.

Steven Warwick is a Senior Solutions Architect at AWS, where he leads customer engagements to drive successful cloud adoption and specializes in SaaS architectures and Generative AI solutions. He produces educational content including blog posts and sample code to help customers implement best practices, and has led programs on GenAI topics for solution architects. Steven brings decades of technology experience to his role, helping customers with architectural reviews, cost optimization, and proof-of-concept development.

Steven Warwick is a Senior Solutions Architect at AWS, where he leads customer engagements to drive successful cloud adoption and specializes in SaaS architectures and Generative AI solutions. He produces educational content including blog posts and sample code to help customers implement best practices, and has led programs on GenAI topics for solution architects. Steven brings decades of technology experience to his role, helping customers with architectural reviews, cost optimization, and proof-of-concept development.