It looked like a perfect mess; noodles and limbs appearing in something approximating a person, but more like a chaotic and uninspiring Vincent Van Gogh painting. The Fresh Prince himself, Will Smith, was somewhat recognisable, but only through his trademark Nineties high-top fade and more modern-era goatee. The clip did not look like a real person. It was not Will Smith eating spaghetti, not really.

What it was, however, was a short clip, portraying the pinnacle of publicly available generative AI software in 2023, made on Modelscope’s Text2Video generator and posted on Reddit. Most people had a laugh at it, some wondered if this could be the next tech marvel to pry into the mainstream. The metaverse was being largely vacated, and NFTs were becoming practically worthless.

Fast forward to today, and in an AI-generated video made using Google’s new VEO3 programme, Will Smith eats spaghetti again. The noodles sound a bit too crunchy, but there is no denying that the clip is shockingly realistic.

The video is a good representation of the brisk pace of generative AI’s refinement. Text production, video, image and audio generation, and deepfake technology have all improved at lightning speed, to the delight of tech companies, their shareholders and malicious actors.

Its use in political misinformation is now commonplace, but its impact is disputed.

Though there is a more pernicious misinformation being deployed. In short, if it can make Smith eat spaghetti, can it not make you, or your boss, or your family eat it too?

Live deepfakes in just one click

Since late 2018, Sensity has been watching and countering the rise of the deepfake and its implementation in scams and fraud, both by state actors and lone cybercriminals.

In a report, Sensity found 2,298 tools available for AI face swapping, lip-syncing or facial re-enactment. Many of these are open source, allowing creators to harness “sophisticated tools with unprecedented ease”.

“My suggestion was to ask them to put a hand in front of their face. That would break the deepfake. But now there are models where you can touch your face, even shine a light on it, and the model doesn’t break,” Francesco Cavalli, co-founder and chief operating officer of Sensity, tells me.

A programme posted on tech developer platform GitHub is one of many that have risen to prominence in the last year off the back of viral videos of users turning their faces into those of Robert Downey Jr or Mark Zuckerberg with surprising realism. The programme advertises “live deepfakes in just three clicks” and prompts potential users to make fun videos or surprise people on live chat.

-copy.jpeg)

open image in gallery

It’s not hard to find out where else this technology is being put to more malicious use. Last year, a finance worker was duped into paying $25m during a video conference call. He was invited in and, to his knowledge, was met with the faces and voices of his co-workers. They were all deepfakes.

Stories like this have had an impact. A 2024 report by the Alan Turing Institute showed that, though people were aware of the problem, with 90 per cent of people concerned about the issue, most respondents were not confident in their ability to detect deepfakes.

In terms of doability, Cavalli tells me that most deepfake programmes available online need only one picture to create a semi-believable facial model. No doubt you have a social media profile with a wealth of such content, and your photos have probably already been used to train Meta’s AI.

Sensity aids companies against this type of situation, scanning the faces and voices of video call members every few seconds to detect any anomalies. Cavalli is confident it has the rise and refinement of deepfakes covered; it will be “challenging”. As for humans hoping to identify fakes? “Pretty much impossible.”

Audio fakes are where it’s at

As visiting professor at the Centre for Regulation of the Creative Economy (CREATe) at Glasgow University and director of Pangloss Consulting, Lilian Edwards isn’t as sold on deepfake and generated video’s potential for personal misinformation as other AI tech. “Audio fakes are where it’s at,” she says. “After all, there are more chances of a scam being identified as fake if it is a video.”

Audio is where things can get pretty personal.

The spate of text message scams involving impersonations of relatives has taken on a whole new dimension. Last month, Santander warned that “Hi Mum” scammers – fraudulent messages from a “close relative” in an emergency situation asking for money or personal details – were implementing voice recognition technology to further lure victims.

-copy.jpeg)

open image in gallery

A Manitoba woman received a call from what she thought was her son, asking if he could make an admission to her. She was confused and (rightfully) hung up and rang her son, waking him. He hadn’t called her. “It was definitely my son’s voice,” the woman told CBC News.

And this week it was revealed a new AI voice-cloning tool from a British firm claims to be able to reproduce a range of UK accents more accurately than some of its US and Chinese rivals. The company Synthesia spent a year recording people in studios and gathering online material to compile a database of UK voices with regional accents

These were then used to train a product called Express-Voice, which can clone a real person’s voice or generate a synthetic voice with the view to use it in the context of training videos, sales support and presentations, but you can see how, in the wrong hands, similar technology could pose some serious problems.

Much like the deepfake, if there is audio of you available, a believable audio fake can probably be made. And this isn’t just for podcasters or lecturers. Leading vocal AI company ElevenLabs boasts it can make voices “indistinguishable from the real thing” with only “minutes of audio”. This is what led the National Cybersecurity Alliance (NCA) to suggest using “code words” to properly identify yourself to co-workers and family.

But Edwards is quick to centre the current focus on AI’s use in producing illicit adult material. “I’d say 90 or 95 per cent of deepfake videos are non-consensual videos of a sexual nature. It’s a heavily gendered problem.”

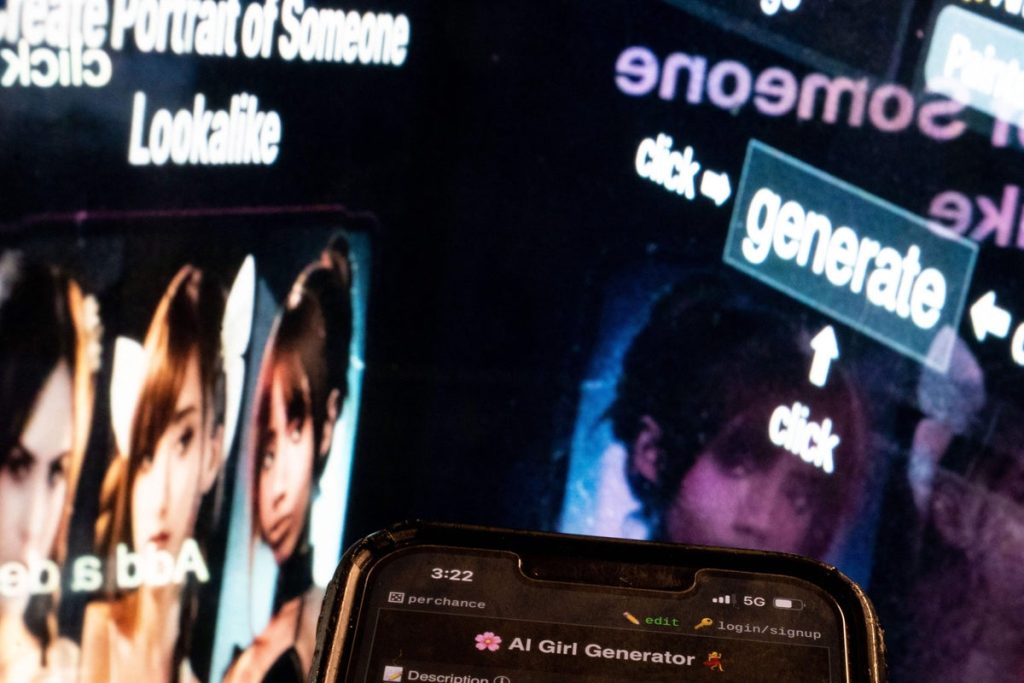

In these corners of the internet, women on sites like OnlyFans are already having their identity stolen and their likeness used in deepfake video content, similar videos of celebrities are sold, as well as images of child sexual abuse. But it’s spilling into the mainstream, too.

Nudify apps – where an AI programme takes a picture and approximates a non-consensual nude image – are also gaining traction. These images are turning up on social media as well, with platforms struggling to quell the tide. “The people behind these exploitative apps constantly evolve their tactics to evade detection,” a Meta rep told CBS News.

Thankfully, on these points, Edwards has good news. “The UK is something of a leader in legislating in this space. We’ve already had a long series of legislative amendments to basically ensure that making a deepfake, image or video, is illegal as well as sharing a ‘real’ or fake image.”

-copy.jpeg)

open image in gallery

It’s relieving to hear, but what about those areas of our society that are not prepared to determine what is real and what is not?

“Think of traffic and parking disputes. What’s stopping someone from producing a believable image where their car isn’t on a yellow line?” Or someone mocking up dashcam footage that shows that you were in the wrong? Money, reputations and liberty could be at stake in such situations.

Whether or not these sorts of tricks would work is another question. However, it’s reasonable to assume that these courts and companies are not currently prepared for this kind of thing, and such personal misinformation could clog up an already crowded system.

“Insurance companies are already overwhelmed with fake claims, so they must be thinking about it,” she says.

How AI is turning against us

Malicious actors are a part of life in a world galvanised by the confluence of the real and the virtual. But, perhaps the most malicious result of progress in generative AI is the misinformation we are willing to inflict on ourselves. And this can be the most personal as well, shared only between you, your phone and your algorithm.

Sandra Wachter, professor of technology and regulation at the University of Oxford, has followed the development of what is known as “hallucinations” within chatbots like the popular ChatGPT. This is demonstrably wrong information, without an explicit intent to harm or misinform.

We know that salespeople may try to cajole us into buying something. But with ChatGPT, “I assume no one is trying to trick me, and no one is trying to. But that’s the problem,” she says.

There are no active volcanoes in England, but that didn’t stop ChatGPT from naming Mount Snowdon when Sandra and her team asked. “The system is not designed to tell you the truth. The system is designed, as usual, to keep you as engaged as long as possible,” she says. These chatbots are being refined constantly, but it’s likely that this tendency for engagement over truth is being refined along with them.

.jpg)

open image in gallery

Reports have been trickling in over the last year of conversations with ChatGPT leading to divorces and even suicides. Yet there is potential for more widespread misinformation beneath the surface level of these shocking stories. An astounding number of people have already integrated it into their workflows and even as a companion for their general life – OpenAI’s COO Brad Lightcap counts 400 million active weekly users, and it is aiming for a billion by year’s end. All these people are interacting with a programme that many use as a substitute for a search engine, but one with the tendency to mislead.

The same goes for AI-generated video or image content pumped into our vertical feeds. A video recently went viral of an “emotional support kangaroo” being refused entry onto an aeroplane. A ridiculous but harmless video. Many were still quick to believe it, saying it was the first time they’d been tricked.

It may be the video’s harmlessness that helped it slip under people’s radar. We see all sorts of horrific and absurd things when we scroll on our phones, so why not? It is what Edwards has referred to for some time as “unreality”, a hallucinatory virtual world asserting its new subjective reality.

After all, social media’s algorithms have been pressing our buttons to keep us engaged for some time. But there is now potential for an endless stream of rage-inducing AI content too: deepfaked people espousing controversial opinions or fake interviews with nameless females on the street, designed to cause misogynistic or racist anger, with the odd airport wallaby to speed up your journey to unreality. If you’re watching it, ingesting it, does it really matter if it’s real?

It is clear, in an age of “personal misinformation”, that a whole new layering of legal and technological defences is needed. However, perhaps what is even more important is the need for psychological defences if we are to remember that not everything we are looking at is as real as it may seem. And if we get scammed? Well, we’re only human after all…

Have you been tricked by AI? Let us know in the comments below