This blog post is co-written with Jonas Neuman from HERE Technologies.

HERE Technologies, a 40-year pioneer in mapping and location technology, collaborated with the AWS Generative AI Innovation Center (GenAIIC) to enhance developer productivity with a generative AI-powered coding assistant. This innovative tool is designed to enhance the onboarding experience for HERE’s self-service Maps API for JavaScript. HERE’s use of generative AI empowers its global developer community to quickly translate natural language queries into interactive map visualizations, streamlining the evaluation and adaptation of HERE’s mapping services.

New developers who try out these APIs for the first time often begin with questions such as “How can I generate a walking route from point A to B?” or “How can I display a circle around a point?” Although HERE’s API documentation is extensive, HERE recognized that accelerating the onboarding process could significantly boost developer engagement. They aim to enhance retention rates and create proficient product advocates through personalized experiences.

To create a solution, HERE collaborated with the GenAIIC. Our joint mission was to create an intelligent AI coding assistant that could provide explanations and executable code solutions in response to users’ natural language queries. The requirement was to build a scalable system that could translate natural language questions into HTML code with embedded JavaScript, ready for immediate rendering as an interactive map that users can see on screen.

The team needed to build a solution that accomplished the following:

Provide value and reliability by delivering correct, renderable code that is relevant to a user’s question

Facilitate a natural and productive developer interaction by providing code and explanations at low latency (as of this writing, around 60 seconds) while maintaining context awareness for follow-up questions

Preserve the integrity and usefulness of the feature within HERE’s system and brand by implementing robust filters for irrelevant or infeasible queries

Offer reasonable cost of the system to maintain a positive ROI when scaled across the entire API system

Together, HERE and the GenAIIC built a solution based on Amazon Bedrock that balanced goals with inherent trade-offs. Amazon Bedrock is a fully managed service that provides access to foundation models (FMs) from leading AI companies through a single API, along with a broad set of capabilities, enabling you to build generative AI applications with built-in security, privacy, and responsible AI features. The service allows you to experiment with and privately customize different FMs using techniques like fine-tuning and Retrieval Augmented Generation (RAG), and build agents that execute tasks. Amazon Bedeck is serverless, alleviates infrastructure management needs, and seamlessly integrates with existing AWS services.

Built on the comprehensive suite of AWS managed and serverless services, including Amazon Bedrock FMs, Amazon Bedrock Knowledge Bases for RAG implementation, Amazon Bedrock Guardrails for content filtering, and Amazon DynamoDB for conversation management, the solution delivers a robust and scalable coding assistant without the overhead of infrastructure management. The result is a practical, user-friendly tool that can enhance the developer experience and provide a novel way for API exploration and fast solutioning of location and navigation experiences.

In this post, we describe the details of how this was accomplished.

Dataset

We used the following resources as part of this solution:

Domain documentation – We used two publicly available resources: HERE Maps API for JavaScript Developer Guide and HERE Maps API for JavaScript API Reference. The Developer Guide offers conceptual explanations, and the API Reference provides detailed API function information.

Sample examples – HERE provided 60 cases, each containing a user query, HTML/JavaScript code solution, and brief description. These examples span multiple categories, including geodata, markers, and geoshapes, and were divided into training and testing sets.

Out-of-scope queries – HERE provided samples of queries beyond the HERE Maps API for JavaScript scope, which the large language model (LLM) should not respond to.

Solution overview

To develop the coding assistant, we designed and implemented a RAG workflow. Although standard LLMs can generate code, they often work with outdated knowledge and can’t adapt to the latest HERE Maps API for JavaScript changes or best practices. HERE Maps API for JavaScript documentation can significantly enhance coding assistants by providing accurate, up-to-date context. The storage of HERE Maps API for JavaScript documentation in a vector database allows the coding assistant to retrieve relevant snippets for user queries. This allows the LLM to ground its responses in official documentation rather than potentially outdated training data, leading to more accurate code suggestions.

The following diagram illustrates the overall architecture.

The solution architecture comprises four key modules:

Follow-up question module – This module enables follow-up question answering by contextual conversation handling. Chat histories are stored in DynamoDB and retrieved when users pose new questions. If a chat history exists, it is combined with the new question. The LLM then processes it to reformulate follow-up questions into standalone queries for downstream processing. The module maintains context awareness while recognizing topic changes, preserving the original question when the new question deviates from the previous conversation context.

Scope filtering and safeguard module – This module evaluates whether queries fall within the HERE Maps API for JavaScript scope and determines their feasibility. We applied Amazon Bedrock Guardrails and Anthropic’s Claude 3 Haiku on Amazon Bedrock to filter out-of-scope questions. With a short natural language description, Amazon Bedrock Guardrails helps define a set of out-of-scope topics to block for the coding assistant, for example topics about other HERE products. Amazon Bedrock Guardrails also helps filter harmful content containing topics such as hate speech, insults, sex, violence, and misconduct (including criminal activity), and helps protect against prompt attacks. This makes sure the coding assistant follows responsible AI policies. For in-scope queries, we employ Anthropic’s Claude 3 Haiku model to assess feasibility by analyzing both the user query and retrieved domain documents. We selected Anthropic’s Claude Haiku 3 for its optimal balance of performance and speed. The system generates standard responses for out-of-scope or infeasible queries, and viable questions proceed to response generation.

Knowledge base module – This module uses Amazon Bedrock Knowledge Bases for document indexing and retrieval operations. Amazon Bedrock Knowledge Bases is a comprehensive managed service that simplifies the RAG process from end to end. It handles everything from data ingestion to indexing and retrieval and generation automatically, removing the complexity of building and maintaining custom integrations and managing data flows. For this coding assistant, we used Amazon Bedrock Knowledge Bases for document indexing and retrieval. The multiple options for document chunking, embedding generation, and retrieval methods offered by Amazon Bedrock Knowledge Bases make it highly adaptable and allow us to test and identify the optimal configuration. We created two separate indexes, one for each domain document. This dual-index approach makes sure content is retrieved from both documentation sources for response generation. The indexing process implements hierarchical chunking with the Cohere embedding English V3 model on Amazon Bedrock, and semantic retrieval is implemented for document retrieval.

Response generation module – The response generation module processes in-scope and feasible queries using Anthropic’s Claude 3.5 Sonnet model on Amazon Bedrock. It combines user queries with retrieved documents to generate HTML code with embedded JavaScript code, capable of rendering interactive maps. Additionally, the module provides a concise description of the solution’s key points. We selected Anthropic’s Claude 3.5 Sonnet for its superior code generation capabilities.

Solution orchestration

Each module discussed in the previous section was decomposed into smaller sub-tasks. This allowed us to model the functionality and various decision points within the system as a Directed Acyclic Graph (DAG) using LangGraph. A DAG is a graph where nodes (vertices) are connected by directed edges (arrows) that represent relationships, and crucially, there are no cycles (loops) in the graph. A DAG allows the representation of dependencies with a guaranteed order, and it helps enable safe and efficient execution of tasks. LangGraph orchestration has several benefits, such as parallel task execution, code readability, and maintainability through state management and streaming support.

The following diagram illustrates the coding assistant workflow.

When a user submits a question, a workflow is invoked, starting at the Reformulate Question node. This node handles the implementation of the follow-up question module (Module 1). The Apply Guardrail, Retrieve Documents, and Review Question nodes run in parallel, using the reformulated input question. The Apply Guardrail node uses denied topics from Amazon Bedrock Guardrails to enforce boundaries and apply safeguards against harmful inputs, and the Review Question node filters out-of-scope inquiries using Anthropic’s Claude 3 Haiku (Module 2). The Retrieve Documents node retrieves relevant documents from the Amazon Bedrock knowledge base to provide the language model with necessary information (Module 3).

The outputs of the Apply Guardrail and Review Question nodes determine the next node invocation. If the input passes both checks, the Review Documents node assesses the question’s feasibility by analyzing if it can be answered with the retrieved documents (Module 2). If feasible, the Generate Response node answers the question and the code and description are streamed to the UI, allowing the user to start getting feedback from the system within seconds (Module 4). Otherwise, the Block Response node returns a predefined answer. Finally, the Update Chat History node persistently maintains the conversation history for future reference (Module 1).

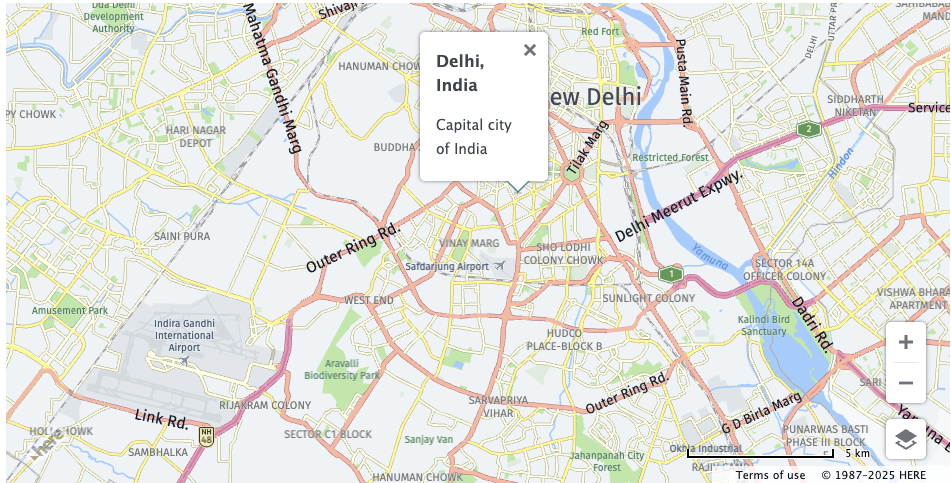

This pipeline backs the code assistant chatbot capability, providing an efficient and user-friendly experience for developers seeking guidance on implementing the HERE Maps API for JavaScript. The following code and screenshot is an example of the model generated code and code rendered map for the query “How to open an infobubble when clicking on a marker?

“;

// Add a click event listener to the marker

marker.addEventListener(‘tap’, function(evt) {

// Create an info bubble object

var bubble = new H.ui.InfoBubble(evt.target.getGeometry(), {

content: bubbleContent

});

// Add info bubble to the UI

ui.addBubble(bubble);

});

}

/**

* Boilerplate map initialization code starts below:

*/

//Step 1: initialize communication with the platform

// In your own code, replace variable window.apikey with your own apikey

var platform = new H.service.Platform({

apikey: ‘Your_API_Key’

});

var defaultLayers = platform.createDefaultLayers();

//Step 2: initialize a map

var map = new H.Map(document.getElementById(‘map’),

defaultLayers.vector.normal.map, {

center: {lat:28.6071, lng:77.2127},

zoom: 13,

pixelRatio: window.devicePixelRatio || 1

});

// add a resize listener to make sure that the map occupies the whole container

window.addEventListener(‘resize’, () => map.getViewPort().resize());

//Step 3: make the map interactive

// MapEvents enables the event system

// Behavior implements default interactions for pan/zoom (also on mobile touch environments)

var behavior = new H.mapevents.Behavior(new H.mapevents.MapEvents(map));

//Step 4: Create the default UI components

var ui = H.ui.UI.createDefault(map, defaultLayers);

// Step 5: main logic

addMarkerWithInfoBubble(map, ui);