1. Alibaba has released the largest model in history, exceeding 1 trillion parameters, with programming capabilities surpassing Claude, proving that the Scaling Law is still effective. 2. Alibaba’s “Model + Cloud” strategy has created the shortest path from technology research and development to commercialization, which is one of the keys to Qwen’s success as a latecomer. 3. The core challenge of Alibaba’s open source model lies in balancing openness and profit. Qwen will need to continue to break through technically, but also prove itself in terms of business models and organizational capabilities.

Yesterday, Anthropic played an “extreme measure,” and Alibaba responded with a significant move late at night—launching its largest model ever, Qwen3-Max-Preview, with over 1 trillion parameters!

According to the official title from the “Tongyi Big Model” WeChat public account, its capability can be described as “stronger than just a little bit.”

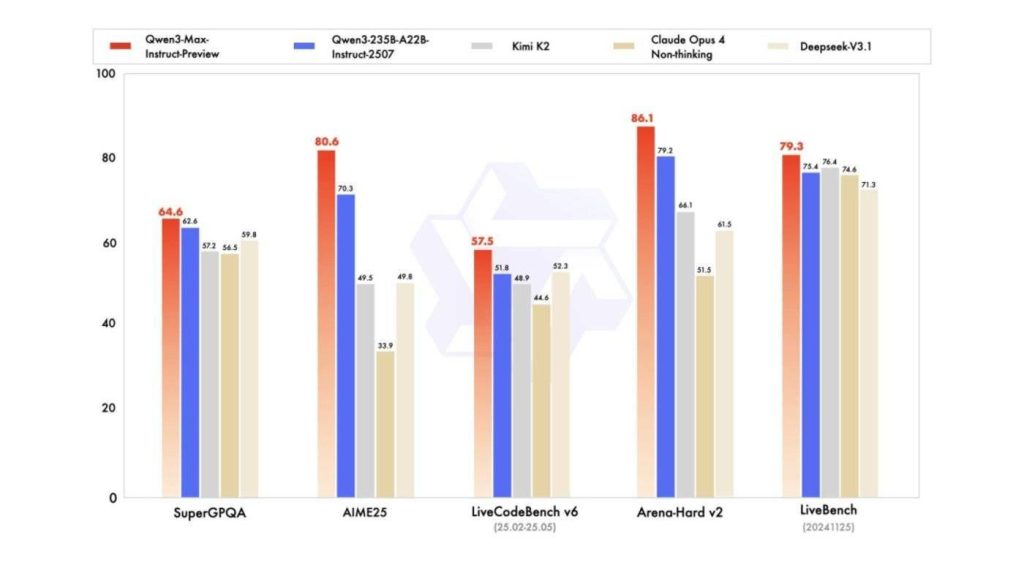

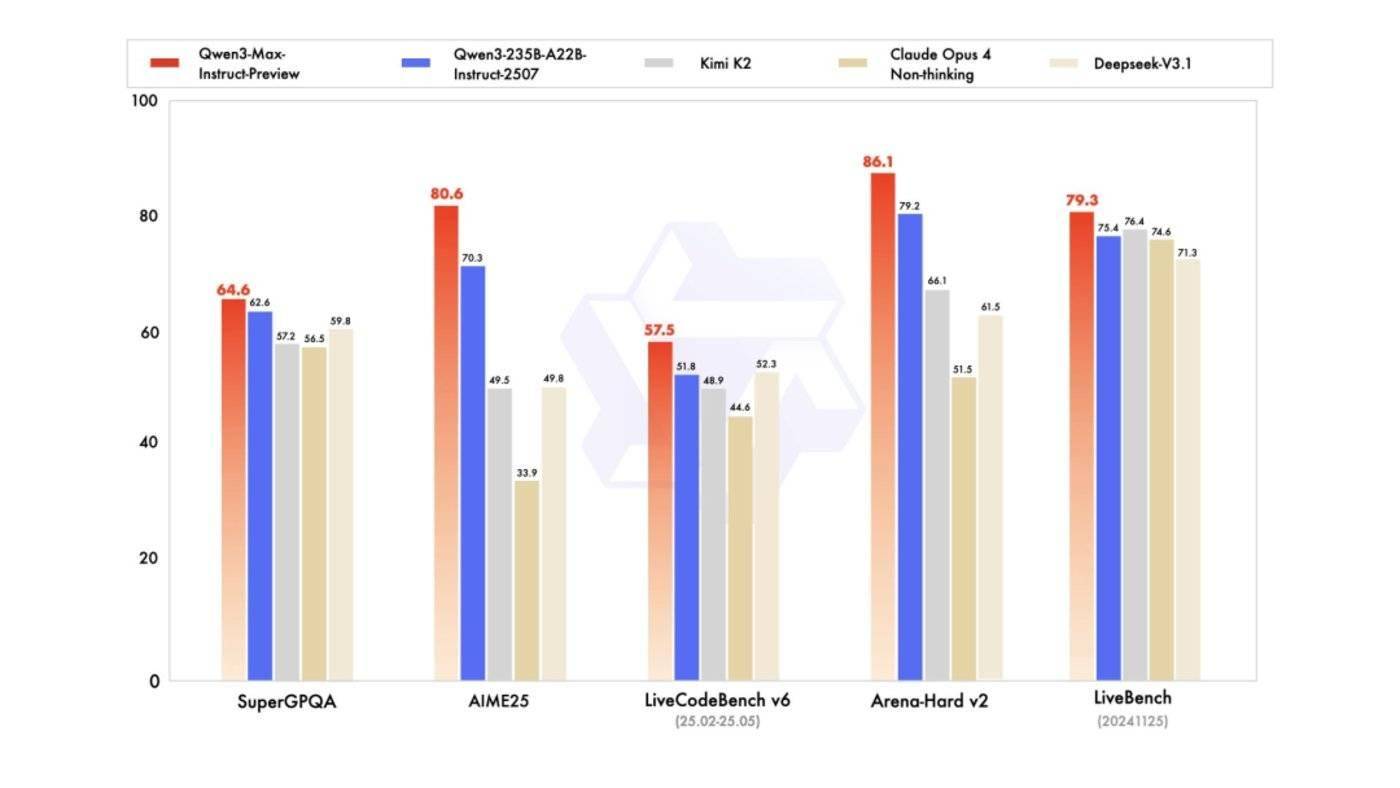

From the benchmark test results, it can be seen that Qwen3-Max-Preview has already surpassed its previous model, Qwen3-235B-A22B-2507.

Additionally, official comparisons have been released against Kimi K2, Claude Opus 4 (Non-thinking), and DeepSeek-V3.1.

As can be seen from the table below, Qwen3-Max-Preview has surpassed other competitors in benchmarks such as SuperGPQA, AIME2025, LiveCodeBench V6, Arena-Hard V2, and LiveBench.

Especially in terms of programming capabilities, where Claude was previously considered the strongest in the industry, Qwen3-Max-Preview has managed to leapfrog ahead in a miraculous manner, astonishing many netizens.

Qwen’s tweet on X seems to reveal the “secret”: Scaling works.

01 Testing Alibaba’s Largest Model

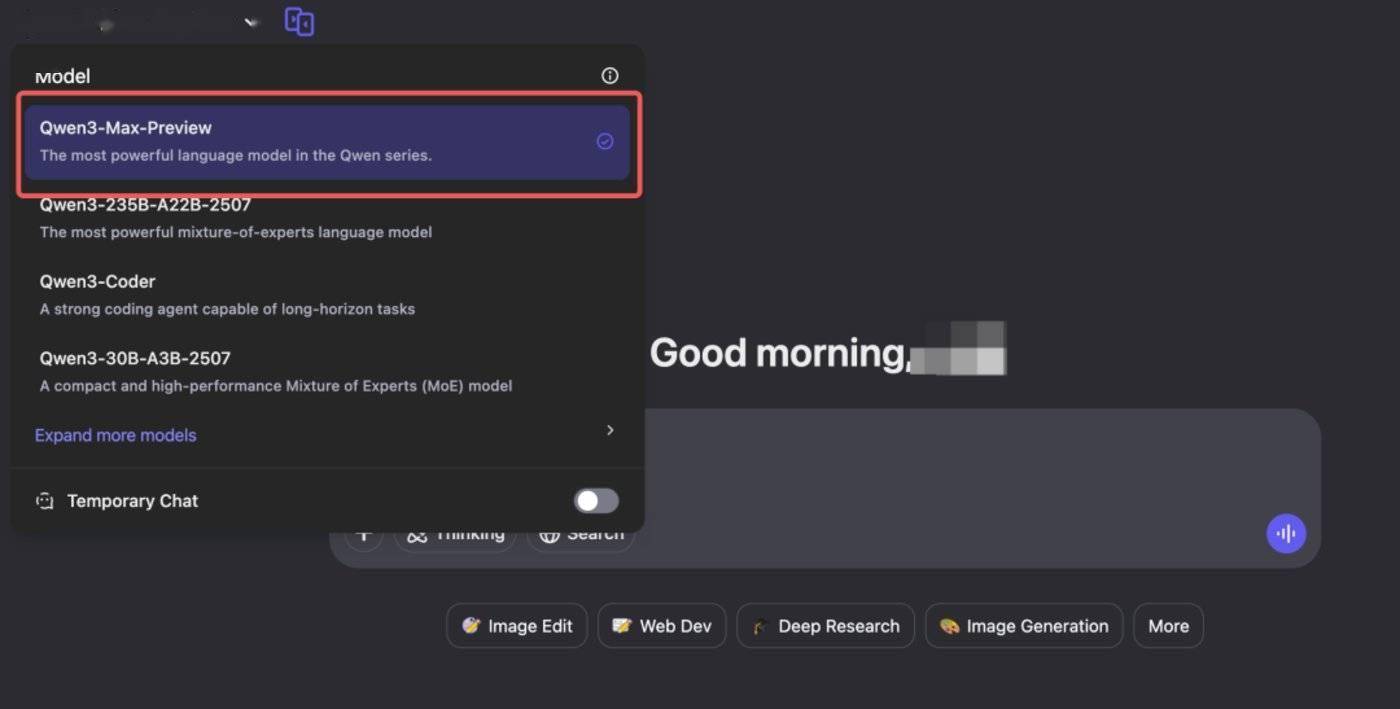

Currently, Qwen3-Max-Preview is available for experience, and it can be selected from the model dropdown options:

Experience link: https://chat.qwen.ai

The official API service has also been opened: https://bailian.console.aliyun.com/?tab=model#/model-market (search for Qwen3-Max-Preview).

In terms of actual performance, many netizens, both domestic and international, have begun testing; for example, a well-known blogger on X, AK, integrated Qwen3-Max-Preview into a project called AnyCoder on HuggingFace, inputting the following prompt:

Design and create a very creative, elaborate, and detailed voxel art scene of a pagoda in a beautiful garden with trees, including some cherry blossoms. Make the scene impressive and varied and use colorful voxels. Use whatever libraries to get this done.

According to AK’s deion, Qwen3-Max-Preview achieved the following results in one go:

When we input this prompt on the official website:

Create a beautiful celebratory landing page for the launch of Qwen3 Max.

In just a few seconds, Qwen3-Max-Preview generated a complete celebratory page, with a very fast response time:

Next, we increased the difficulty by presenting a classic programming problem—simulating a bouncing ball collision.

First, a small test:

Write a java code that shows a ball bouncing inside a spinning hexagon. The ball should be affected by gravity and friction, and it must bounce off the rotating walls realistically, implement it in java and html.

As can be seen, the ball moves within the hexagon based on physical laws; when we apply a force with the “up arrow key,” it responds immediately.

When we set the number of balls to 10, the effect generated all at once is quite natural:

Finally, we asked Qwen3-Max-Preview to generate a mini-game:

Create a mini-game of “Angry Birds.”

However, although it was successfully generated in one go, perhaps due to the simplicity of the prompt, this mini-game has some minor flaws, such as the monsters’ positions not being very accurate; interested partners can try multiple times.

02 How Did Qwen Surpass Its Competitors?

From Alibaba’s direct leap from a hundred billion parameter model to a trillion-scale model (an almost fourfold increase), and taking first place in numerous assessments, it is clear that Qwen has firmly established itself in the top tier globally.

However, to be fair, looking at the entire AI large model competition, Alibaba was not the first company in China to launch a product that competes with ChatGPT, but it is definitely among the latecomers that have surpassed others.

Compared to early movers domestically (such as Baidu), Alibaba’s large model was relatively low-key at the start, but its path has been exceptionally clear—building an ecosystem through model open-sourcing while exploring technological frontiers through self-research and closed-source methods.

For example, regarding open-source, starting from 2023, Qwen has rapidly open-sourced multiple model versions to global developers. From the 7 billion parameter Qwen-7B to 14 billion, 72 billion parameters, and then to multimodal models in vision and audio, it has almost covered all mainstream sizes and application scenarios. More importantly, Alibaba not only open-sourced model weights but also opened commercial licenses, greatly stimulating the enthusiasm of small and medium-sized enterprises and individual developers.

This series of actions has quickly established a wide influence in top global open-source communities like Hugging Face, attracting a large number of developers to innovate and develop around the Qwen ecosystem, forming a strong community driving force. This strategy of accumulating resources has earned Tongyi Qwen valuable developer mindshare and application scenario data, which is an advantage that closed-source models find hard to match.

However, beyond open-source, Alibaba has also never stopped exploring the limits of model capabilities internally. As stated in the official release of the trillion-parameter model, Scaling works. This reflects a belief in the Scaling Law—that as model parameters, data volume, and computing power grow exponentially, model capabilities will experience qualitative leaps.

Training a trillion-parameter model like Qwen3 Max Preview requires not only resource accumulation but also demands excellence in every detail of ultra-large-scale computing cluster stability, distributed training algorithm efficiency, data processing accuracy, and engineering optimization.

This is backed by Alibaba’s massive investment in computing power infrastructure over the years and deep accumulation in AI engineering. It is this kind of miraculous saturation investment that has allowed Qwen to surpass top models like Claude Opus in core capabilities such as programming and reasoning.

In addition to open-source models and capability exploration, Alibaba Cloud is also a key factor in Qwen’s success as a latecomer.

After all, large model training and inference is indeed a computing power-consuming beast, and Alibaba Cloud provides stable and efficient computing power infrastructure for Qwen’s development, integrating full-link tools from data labeling, model development, distributed training to deployment inference, greatly reducing the engineering burden on research and development teams and allowing them to focus on algorithm and model innovation.

Moreover, in terms of model application and popularization, it is also thanks to Alibaba Cloud’s MaaS strategy that allows Qwen to quickly delve into various industries; for example, enterprise customers can directly call Qwen API on Alibaba Cloud without training a model from scratch, or use platform tools to fine-tune the open-source Qwen model and quickly build AI applications.

This “Model + Cloud” strategy has created the shortest path from technology research and development to commercialization.

03 But It Is Not Perfect

While Alibaba’s strategic choices in the development of large models have contributed to its advantage as a latecomer, it does not mean that Qwen currently lacks risks.

Alibaba’s choice of open-source model traffic diversion and cloud service monetization can be seen as a path filled with both opportunities and challenges; it is similar to Meta’s Llama series, aiming to quickly capture market share and developer mindshare through an open ecosystem, ultimately directing commercial value to its own infrastructure.

This sharply contrasts with the elite routes of closed-source + API companies like OpenAI and Anthropic, which have the advantage of better protecting core technologies, maintaining technological gaps, and directly obtaining high profits through high-value API services.

While Alibaba’s open-source strategy can rapidly popularize technology, it also means that its most advanced models may find it difficult to create an absolute gap with competitors, and the business model is more circuitous, requiring customers to first recognize the value of its cloud platform.

The core challenge of the open-source model lies in how to balance openness and profit; when enterprises can access and privately deploy sufficiently good open-source models for free, how strong will their willingness to pay for official cloud services be?

In other words, Alibaba Cloud must not only provide simple model hosting but also deliver performance optimization, security guarantees, and a powerful toolchain and enterprise-level services that far exceed open-source versions to build a sufficiently deep moat. How to effectively convert a large open-source user base into high-value paying cloud customers is the most critical business leap on this path.

In addition to commercialization challenges, as the competition for top AI talent heats up, any loss of core talent can have far-reaching effects on the team.

In recent years, several key technical talents, including Jia Yangqing in the AI framework and infrastructure field, have left Alibaba to join the entrepreneurial wave or other giants. Although for a company of Alibaba’s size, the departure of individual personnel may not shake its foundation, the negative impact still exists.

After all, the departure of core leaders can affect team morale, convey negative signals externally, and increase the difficulty of attracting top talent in the future; changes in leadership in key technical directions may also introduce uncertainty to the long-term strategic continuity of projects.

Just like the ongoing talent war at Meta in Silicon Valley, those who leave often become new competitors, fully aware of the strengths and weaknesses of the original system and potentially posing more precise threats in niche areas.

Thus, how Alibaba can maintain its appeal to top AI talent globally and build a stable and sustainable talent pipeline under intense competition is a serious issue it must face in its future development.

Conclusion

Overall, Alibaba’s Tongyi Qwen is undoubtedly a top force in the field of large models in China and globally. It has successfully secured a leading position in fierce competition through its clear strategy of “parallel open-source and self-research,” relying on Alibaba Cloud’s strong ecosystem and deep technical talent accumulation. The release of the trillion-parameter model further demonstrates its determination and strength in Scaling Law.

However, the path to success also comes with clear challenges. The business model of exchanging open-source for an ecosystem still needs to be tested by the market for its sustainability; the pursuit of technological gaps with closed-source giants like OpenAI will be a long-term process; and retaining and attracting top talent is the lifeline for maintaining innovative vitality.

In the future, Qwen will not only need to continue to break through technically but also prove its unique value in terms of business models and organizational capabilities. Whether it can transform today’s technological advantages into tomorrow’s unshakeable market dominance will be the focus of the entire industry, including the capital market. This is also key to whether Alibaba’s market value can rise further.

1. https://x.com/Alibaba_Qwen/status/1963991502440562976

2. https://chat.qwen.ai/

3. https://x.com/_akhaliq/status/1964001592710975971返回搜狐,查看更多