On the early evening of June 22, 2010, American tennis star John Isner began a grueling Wimbledon match against Frenchman Nicolas Mahut that would become the longest in the sport’s history. The marathon battle lasted 11 hours and stretched across three consecutive days. Though Isner ultimately prevailed 70–68 in the fifth set, some in attendance half-jokingly wondered at the time whether the two men might be trapped on that court for eternity.

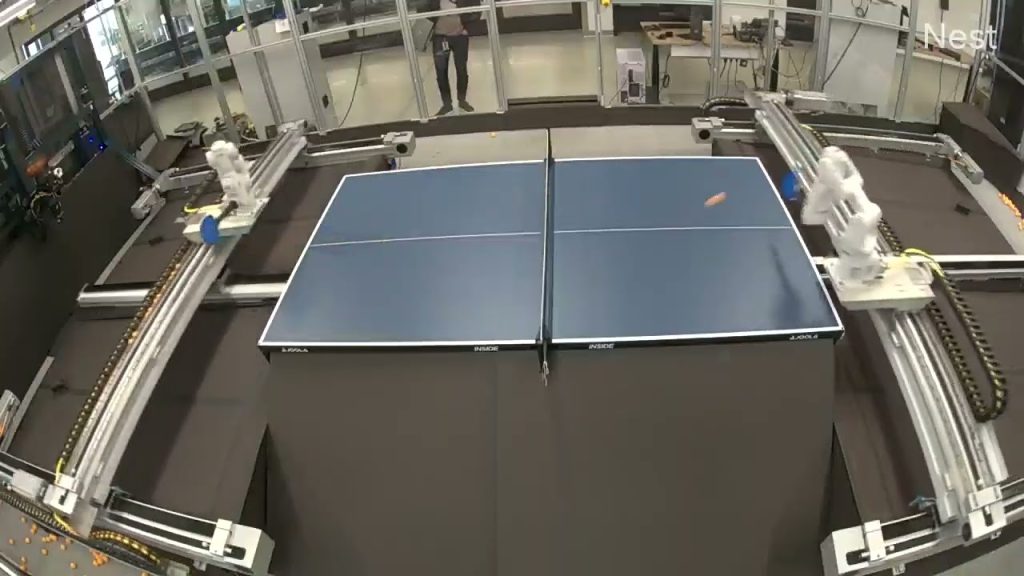

A similarly endless-seeming skirmish of rackets is currently unfolding just an hour’s drive south of the All England Club—at Google DeepMind. Known for pioneering AI models that have outperformed the best human players at chess and Go, DeepMind now has a pair of robotic arms engaged in a kind of infinite game of table tennis. The goal of this ongoing research project, which began in 2022, is for the two robots to continuously learn from each other through competition. Just as Isner eventually adapted his game to beat Mahut, each robotic arm uses AI models to shift strategies and improve.

But unlike the Wimbledon example, there’s no final score the robots can reach to end their slugfest. Instead, they continue to compete indefinitely, with the aim of improving at every swing along the way. And while the robotic arms are easily beaten by advanced human players, they’ve been shown to dominate beginners. Against intermediate players, the robots have roughly 50/50 odds—placing them, according to researchers, at a level of “solidly amateur human performance.”

All of this, as two researchers involved noted this week in an IEEE Spectrum blog, is being done in hopes of creating an advanced, general-purpose AI model that could serve as the “brains” of humanoid robots that may one day interact with people in real-world factories, homes, and beyond. Researchers at DeepMind and elsewhere are hopeful that this learning method, if scaled up, could spark a “ChatGPT moment” for robotics—fast-tracking the field from stumbling, awkward hunks of metal to truly useful assistants.

“We are optimistic that continued research in this direction will lead to more capable, adaptable machines that can learn the diverse skills needed to operate effectively and safely in our unstructured world,” DeepMind senior staff engineer Pannag Sanketi and Arizona State University Professor Heni Ben Amor write in IEEE Spectrum.

Related: [Robots could now understand us better with some help from the web]

How DeepMind trained a table tennis robot

The initial inspiration for the racket-swinging robots came from a desire to find better, more scalable ways to train robots at completing multiple types of tasks. Though beefy humanoids like Boston Dynamics’ Atlas have been able to perform terrifyingly impressive feats of acrobatics for the better part of a decade, many of those feats were scripted and the result of meticulous coding and fine-tuning by human engineers. That approach works for a tech demo or limited, single-use cases, but it falls short when designing a robot meant to work alongside people in dynamic environments like warehouses. In those settings, it’s not enough for a robot to simply know how to load a box onto a crate—it also needs to adapt to people and to an environment that’s constantly introducing new and unpredictable variables.

Table tennis, it turns out, is a pretty effective way to test that unpredictability. The sport has been used as a benchmark for robotics research since the 1980s because it combines speed, responsiveness, and strategy all at once. To succeed at the sport, a player must master a range of skills. They need fine motor control and perceptual abilities to track the ball and intercept it—even when it comes in with varying speeds and spins. At the same time, they also have to make strategic decisions about how to outplay their opponent and when to take calculated risks. The DeepMind researchers describe the game as a “constrained, yet highly dynamic, environment.”

DeepMind began the project using reinforcement learning (where an AI is rewarded for making the right decision) to teach a robotic arm the basics of the sport. At first, the two arms were trained simply to engage in cooperative rallies, so neither had a reason to try to win points. Eventually, with some fine-tuning by engineers, the team developed two robotic agents capable of autonomously sustaining long rallies.

Learning from humans on route to infinite play

From there, the researchers adjusted the parameters and instructed the arms to try to win points. The process, they wrote, quickly overwhelmed the still-inexperienced robots. The arms would take in new information during a point and learn fresh tactics, only to forget some of the previous moves they had made. The result was a steady stream of short rallies, often ending with one robot slamming an unreturnable winner.

Interestingly, the robots showed a noticeable spike in improvement when they were tasked with playing points against human opponents. At the start, humans of various skill levels were better at keeping the ball in play. That proved crucial to improving the robots’ performance, as it exposed them to a wider variety of shots and playing styles to learn from. Over time, both robots improved, increasing not only their consistency but also their ability to play more sophisticated points—mixing in defense, offense, and greater unpredictability. In total, the robots won 45 percent of the 29 games they played against humans, including beating intermediate-level players 55 percent of the time.

Since then, the now-veteran AI robots have squared off against each other once again. Researchers say they are constantly improving. Part of that progress has come through a new kind of AI coaching. DeepMind has been using Google Gemini’s vision-language model to watch videos of the robots playing and generate feedback on how to better win points. Videos of “Coach Gemini” in action show the robotic arm adjusting its play in response to AI-generated commands like “hit the ball as far right as possible” and “hit a shallow ball close to the net.”

Longer rallies could one day lead to helpful robots

The hope at DeepMind and other companies is that agents competing against each other will help improve general-purpose AI software in a way that more closely resembles how humans learn to navigate the world around them. Though AI can easily outperform most humans at tasks like basic coding or chess, even the most advanced AI-enabled robots struggle to walk with the same stability as a toddler. Tasks that are inherently easy for humans—like tying a shoe or typing a letter on a keyboard—remain monumental challenges for robots. This dilemma, known in the robotics community as Moravec’s paradox, remains one of the biggest hurdles to creating a Jetsons-style “Rosie” robot that could actually be helpful around the house.

But there are some early signs those roadblocks might be starting to subside. Last year, DeepMind finally succeeded in teaching a robot to tie a shoe, a feat that was once thought to be years away. (Whether or not it tied the shoe well is another story.) This year, Boston Dynamics released a video showing its new, lighter autonomous Atlas robot adjusting in real-time to mistakes it made while loading materials in a mock manufacturing facility.

These might seem like baby steps—and they are—but researchers hope that generalized, multi-purpose AI systems, like the one the table tennis robots are training, could help such advancements happen more frequently. In the meantime, the DeepMind robots will keep swatting away, unaware of their never-ending fifth-set odyssey.

More deals, reviews, and buying guides