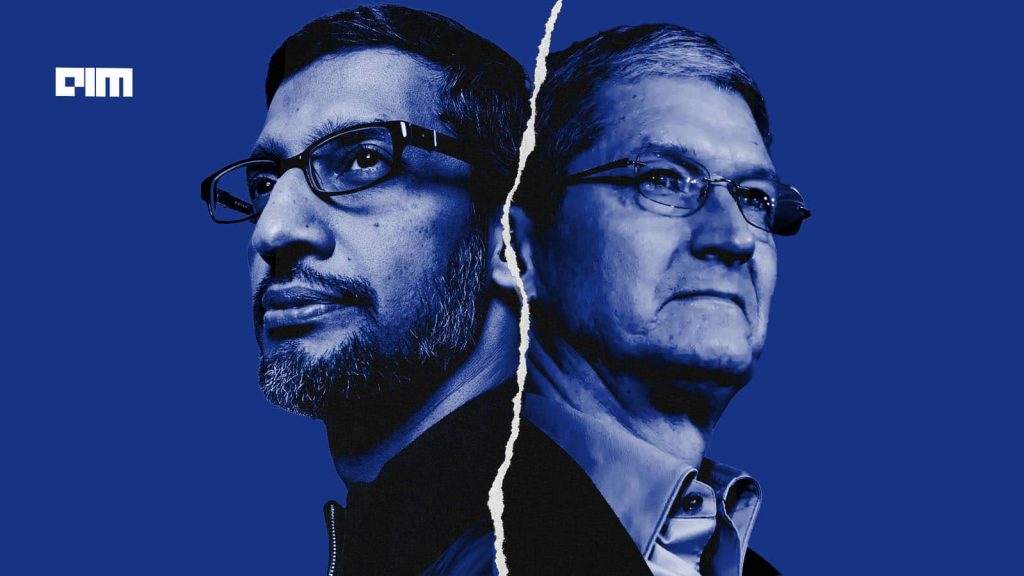

Apple is struggling in the AI battle, and it knows it. But the tech giant isn’t one to give up without a good fight. The Cupertino-based company has introduced a ‘Foundation Models’ framework, which gives developers access to a 3-billion parameter on-device model. The model supports 15 languages, processes both text and images, and is optimised for performance on Apple Silicon.

Apple’s 3B parameter language model can handle a wide variety of text-based tasks, including summarising, extracting key information, understanding and improving text, handling brief conversations, and creating original content.

It now faces direct competition from Google’s Gemma 3n, which runs on phones, tablets, and laptops. The model supports text, image, and audio inputs, includes advanced video processing capabilities, and operates with a dynamic memory footprint of just 2 to 3 GB, enabled by Google DeepMind’s per-layer embeddings innovation.

Its audio features support reliable speech recognition and spoken language translation. The model is also built to interpret combinations of different input types for more complex use cases.

Though the two models share similar goals, Gemma 3n is a better fit for developers and users, thanks to its multimodal capabilities and open-source approach. Google collaborated with Qualcomm Technologies, MediaTek and Samsung’s System LSI business to optimise performance in a wide range of hardware.

Meet Gemma 3n, a model that runs on as little as 2GB of RAM 🤯 It shares the same architecture as Gemini Nano, and is engineered for incredible performance. We added audio understanding, so now it’s multimodal, fast and lean, and runs on-device (no cloud connection required!) pic.twitter.com/2FyzJHVGZa

— Google AI (@GoogleAI) May 20, 2025

In contrast, Apple Intelligence takes a hybrid approach, combining on-device processing with cloud-based computation. While Apple’s neural processing units (NPUs) handle fast tasks locally, more complex queries often rely on server-side processing, which requires an internet connection.

“Apple doesn’t report benchmarks for their AIs, reporting on an ill-documented head-to-head evaluation. But even by their standards, Apple’s latest on-device models are mostly worse than the open Gemma 3-4B from Google or Qwen 3-4B,” said Ethan Mollick, professor at Wharton University, in a post on X.

On the LMArena leaderboard for text-based tasks, the Gemma 3n scored 1293 points. In comparison, OpenAI’s o3-mini scored 1329 points, and the o4-mini scored 1379 points.

Android vs iOS Ecosystem

Lately, Google has been pushing AI deeper into the Android ecosystem, letting smartphone users run large language models locally without needing an internet connection.

To support this, the company introduced Edge Gallery, a new Android app that allows users to download and use its AI models directly on their devices. The app is available through the Google AI Edge GitHub repository, and an iOS version is expected soon.

“This app is a resource for understanding the practical implementation of the LLM Inference API and the potential of on-device generative AI,” the company said in a blog post.

Apple Intelligence for Android is already here! We published is an example app on how to install and run the most capable @GoogleDeepMind Gemma 3n model on Android.

📱 Runs 100% locally & offline, no internet after setup

🤖 Supports Multiple Gemma versions

🖼️ Multimodal, ask… pic.twitter.com/poYD6Qu16y— Philipp Schmid (@_philschmid) June 10, 2025

Google has the advantage of the Android ecosystem as well. As of 2025, Android’s global user base ranges from 3.9 billion to 4.5 billion, making up approximately 71 to 72% of smartphone operating systems in use. iOS trails with 1.56 to 1.8 billion users, holding a market share close to 28 to 29%.

However, Apple is stepping up its AI game with features that feel genuinely useful. Live Translation helps you chat across languages in real time, even on calls. Image Playground and Genmoji let you create expressive, custom visuals. Visual Intelligence can understand what’s on your screen and take action. And for fitness buffs, Workout Buddy delivers live motivation based on your workout stats.

In a recent interview, Apple’s marketing chief, Greg Joswiak, said that Apple’s approach to AI is different from its competitors. Rather than offering a standalone AI app or chatbot, Apple is integrating generative AI quietly and deeply into the operating system.

“There’s no app called Apple Intelligence. Sometimes, you’re doing things and you don’t even realise you’re using Apple Intelligence or, you know, AI to do them,” said Joswiak.

Apple claims its on-device model outperforms Qwen-2.5-3B across all supported languages, and holds up well against Qwen-3-4B and Gemma-3-4B in English. Meanwhile, its server-based model compares favourably with Llama-4-Scout, though it still lags behind larger models like Qwen-3-235B and GPT-4o.

Yet, Siri received barely a mention during WWDC 2025. Addressing this, Apple’s software chief Craig Federighi explained that the company had a two-phase architecture and working demos in place, but the quality and reliability fell short of Apple’s internal standards, particularly when handling unpredictable and open-ended queries.

Although parts of the system were functional, Apple chose not to release a product that did not meet its bar.

Apple may be playing the long game, but in the fast-moving world of AI, time is a luxury it can’t afford. With Google setting the pace, Apple now faces a future where quiet integration alone may no longer be enough.