Google’s Robby Stein, VP of Product at Google, explained that Google Search is converging with AI in a new manner that builds on three pillars of AI. The implications for online publishers, SEOs, and eCommerce stores are profound.

Three Pillars Of AI Search

Google’s Stein said that there are three essential components to the “next generation” of Google Search:

AI Overviews

Multimodal search

AI Mode

AI Overviews is natural language search. Multimodal are new ways of searching with images, enabled by Google Lens. AI Mode is the harnessing of web content and structured knowledge to provide a conversational turn-based way of discovering information and learning. Stein indicates that all three of these components will converge as the next step in the evolution of search. This is coming.

Stein explained:

“I can tell you there’s kind of three big components to how we can think about AI search and kind of the next generation of search experiences. One is obviously AI overviews, which are the quick and fast AI you get at the top of the page many people have seen. And that’s obviously been something growing very, very quickly. This is when you ask a natural question, you put it into Google, you get this AI now. It’s really helpful for people.

The second is around multimodal. This is visual search and lens. That’s the other big piece. You go to the camera in the Google app, and that’s seeing a bunch of growth.

And then with AI mode, it brings it all together. It creates an end-to-end frontier search experience on state-of-the-art models to really truly let you ask anything of Google search.”

AI Mode Triggered By Complex Queries

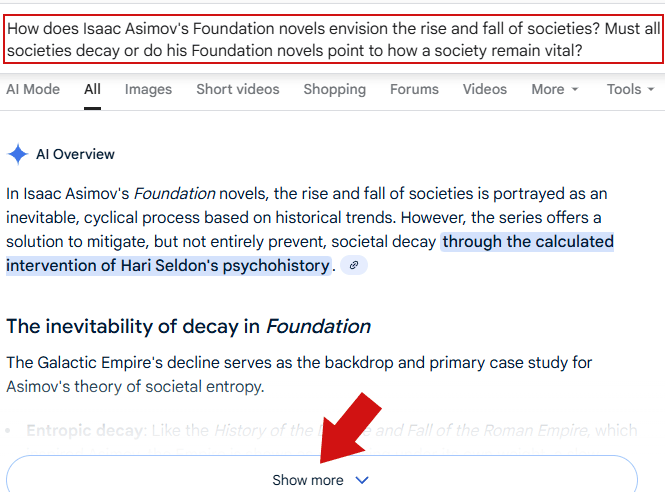

The above screenshot shows a complex two sentence search query entered into Google’s search box. The complex query automatically triggers an AI Mode preview with a “Show more” link that leads to an immersive AI Mode conversational search experience. Publishers who wish to be cited need to think about how their content will fit into this kind of context.

Next Generation Of Google: AI Mode Is Like A Brain

Stein described the next frontier of search as something that is radically different from what we know as Google Search. Many SEOs still think of search as this ranking paradigm with ten blue links. That’s something that’s not quite existed since Google debuted Featured Snippets back in 2014. That’s eleven years that the concept of ten blue links has been out of step with the reality in Google’s search results.

What Stein goes on to describe completely does away with the concept of ten blue links, replacing it with the concept of a brain that users can ask questions and interact with. SEOs, merchants and other publishers really need to begin doing away with the mental concept of ten blue links and focus on surfacing content within an interactive natural language environment that’s completely outside of search.

Stein explained this new concept of a brain in the context of AI Mode:

“You can go back and forth. You can have a conversation. And it taps into and is specially designed for search. So what does that mean? One of the cool things that I think it does is it’s able to understand all of this incredibly rich information that’s within Google.

So there’s 50 billion products in the Google Shopping Graph, for instance. They’re updated 2 billion times an hour by merchants with live prices.

You have 250 million places and maps.

You have all of the finance information.

And not to mention, you have the entire context of the web and how to connect to it so that you can get context, but then go deeper.And you put all of that into this brain that is effectively this way to talk to Google and get at this knowledge.

That’s really what you can do now. So you can ask anything on your mind and it’ll use all of this information to hopefully give you super high quality and informed information as best as we can.”

Stein’s description shows that Google’s long-term direction is to move beyond retrieval toward an interactive turn-based mode of information discovery. The “brain” metaphor signals that search will increasingly be less about locating web pages but about generating informed responses built from Google’s own structured data, knowledge graphs, and web content. This represents a fundamental change and as you’ll see in the following paragraphs, this change is happening right now.

AI Mode Integrates Everything

Stein describes how Google is increasingly triggering AI Mode as the next evolution of how users find answers to questions and discover information about the world immediately around them. This goes beyond asking “what’s the best kayak” and becomes more of a natural language conversation, an information journey that can encompass images, videos, and text, just like in real life. It’s an integrated experience that goes way beyond a simple search box and ten links.

Stein provided more information of what this will look like:

“And you can use it directly at this google.com/ai, but it’s also been integrated into our core experiences, too. So we announced you can get to it really easily. You can ask follow-up questions of AI overviews right into AI mode now.

Same for the lens stuff, take a picture, takes it to AI mode. So you can ask follow-up questions and go there, too. So it’s increasingly an integrated experience into the core part of the product.”

How AI Will Converge Into One Interface

At this point the host of the podcast asked for a clearer explanation of how all of these things will be integrated.

He asked:

“I imagine much of this is… wait and see how people use it. But what’s the vision of how all these things connect?

Is the idea to continue having this AI mode on the side, AI overviews at the top, and then this multimodal experience? Or is there a vision of somehow pushing these together even more over time?”

Stein answered that all of these modes of information discovery will converge together. Google will be able to detect by the query whether to trigger AI Mode or just a simple search. There won’t be different interfaces, just the one.

Stein explained:

“I think there’s an opportunity for these to come closer together. I think that’s what AI Mode represents, at least for the core AI experiences. But I think of them as very complementary to the core search product.

And so you should be able to not have to think about where you’re asking a question. Ultimately, you just go to Google.

And today, if you put in whatever you want, we’re actually starting to use much of the power behind AI mode, right in AI Overviews. So you can just ask really hard, you could put a five-sentence question right into Google search.

You can try it. And then it should trigger AI at the top, it’s a preview. And then you can go deeper into AI mode and have this back and forth. So that’s how these things connect.

Same for your camera. So if you take a picture of something, like, what’s this plant? Or how do I buy these shoes? It should take you to an AI little preview. And then if you go deeper, again, it’s powered by AI mode. You can have that back and forth.

So you shouldn’t have to think about that. It should feel like a consistent, simple product experience, ultimately. But obviously, this is a new thing for us. And so we wanted to start it in a way that people could use and give us feedback with something like a direct entry point, like google.com/AI.”

Stein’s answer shows that Google is moving from separate AI features toward one unified search system that interprets intent and context automatically.

For users, that means typing, speaking, or taking a picture will all connect to the same underlying process that decides how to respond.

For publishers and SEOs, it means visibility will depend less on optimizing for keywords and more on aligning content with how Google understands and responds to different kinds of questions.

How Content Can Fit Into AI Triggered Search Experiences

Google is transitioning users out of the traditional ten blue links paradigm into a blended AI experience. Users can already enter questions consisting of multiple sentences and Google will automatically transition into an AI Mode deep question and answer. The answer is a preview with an option to trigger a deeper back and forth conversation.

Robbie Stein indicated that the AI Search experience will converge even more, depending on user feedback and how people interact with it.

These are profound changes that demand publishers ask deep questions about how content:

Should you consider how curating unique images, useful video content, and step-by-step tutorials may fit into your content strategies?

Information discovery is increasingly conversational, does your content fit into that context?

Information discovery may increasingly include camera snapshots, will your content fit into that kind of search?

These are examples of the kinds of questions publishers, SEOs and store owners should be thinking about.

Watch the podcast interview with Robby Stein

Inside Google’s AI turnaround: AI Mode, AI Overviews, and vision for AI-powered search | Robby Stein

Featured image/Screenshot of Lenny’s Podcast video