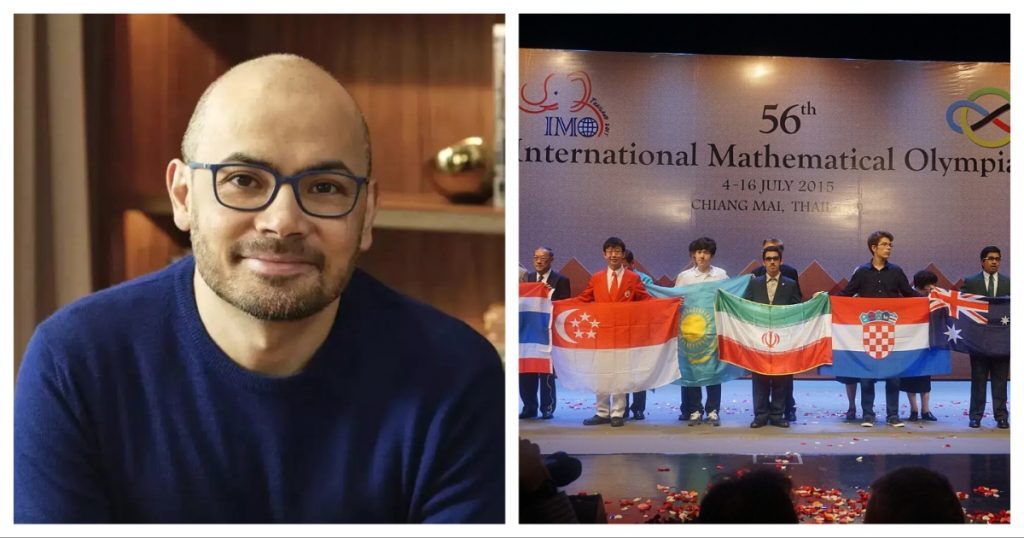

OpenAI had claimed two days ago that based on internal evaluations, one of its undisclosed models had delivered a gold-medal winning performance at the International Mathematics Olympiad. But Google has now done one better — it has actually won a gold medal, which has been ratified by the organizers.

An advanced version of Gemini Deep Think has achieved a gold-medal level at the International Mathematics Olympiad. “Very excited to share that an advanced version of Gemini Deep Think is the first to have achieved gold-medal level in the International Mathematical Olympiad!” wrote Google DeepMind researcher Thang Luong on X. “It solved five out of six problems perfectly, as verified by the IMO organizers! It’s been a wild run to lead this effort and I am grateful to everyone in the team for such an amazing achievement! Blog post in the thread and more to share soon!” he added.

“We can confirm that Google DeepMind has reached the much-desired milestone, earning 35 out of a possible 42 points — a gold medal score,” said International Mathematics Olympiad President Prof. Dr. Gregor Dolinar. “Their solutions were astonishing in many respects. IMO graders found them to be clear, precise and most of them easy to follow,” he added. Gemini Deep Think solved five out of the six IMO problems perfectly, earning 35 total points.

The International Mathematical Olympiad (IMO) is a mathematical Olympiad for pre-university students, and is widely regarded as the most prestigious mathematical competition in the world. It’s being held since 1959, and now sees participation from more than 100 countries.

“This year, our advanced Gemini model operated end-to-end in natural language, producing rigorous mathematical proofs directly from the official problem descriptions – all within the 4.5-hour competition time limit,” wrote Google DeepMind in its blog.

“We achieved this year’s result using an advanced version of Gemini Deep Think – an enhanced reasoning mode for complex problems that incorporates some of our latest research techniques, including parallel thinking. This setup enables the model to simultaneously explore and combine multiple possible solutions before giving a final answer, rather than pursuing a single, linear chain of thought,” DeepMind said.

“To make the most of the reasoning capabilities of Deep Think, we additionally trained this version of Gemini on novel reinforcement learning techniques that can leverage more multi-step reasoning, problem-solving and theorem-proving data. We also provided Gemini with access to a curated corpus of high-quality solutions to mathematics problems, and added some general hints and tips on how to approach IMO problems to its instructions,” it added.

Google said that the model will eventually be rolled out to Ultra subscribers. “We will be making a version of this Deep Think model available to a set of trusted testers, including mathematicians, before rolling it out to Google AI Ultra subscribers,” it said.

Just two days ago, OpenAI had claimed that one of its models had managed to secure a gold-medal winning performance at the International Mathematics Olympiad. But while OpenAI’s result created a lot of buzz, OpenAI had only tested the model internally on its systems, and had graded them internally as well. Google DeepMind, on the other hand, had submitted an official entry, and has had its results ratified by the IMO.

It now appears that OpenAI’s announcement might’ve been made to beat Google to the punch. Google Deepmind researcher Thang Luong had appeared to say as much yesterday after OpenAI announced its result. “There is an official marking guideline from the IMO organizers which is not available externally. Without the evaluation based on that guideline, no medal claim can be made,” he had posted on X. And with Google DeepMind now getting the official ratification just a day later, it appears that it’s Google DeepMind which will make it to the record books as the first AI company to manage a gold-level performance at the International Mathematics Olympiad.