MANCHESTER, England (CN) — A landmark legal battle between Getty Images and Stability AI marks the U.K.’s first major copyright case to challenge how artificial intelligence systems are trained, setting the stage for a ruling that could reshape tech industry norms.

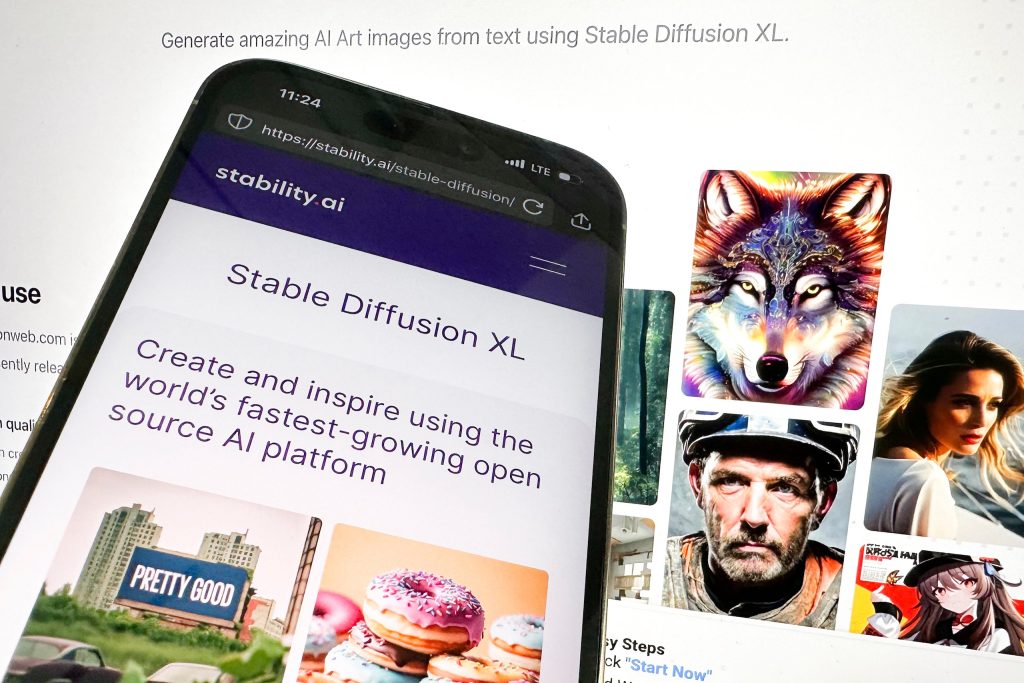

Getty, the global stock image agency, charges that Stability used millions of its copyrighted photos without permission to develop Stable Diffusion, its generative AI model capable of producing photorealistic images from text and images prompts.

Getty’s legal team told London’s High Court that the case isn’t a battle between the creative and technology industries; the two can work together in “synergistic harmony” as licensing creative works is critical to the success of AI.

“The problem is when AI companies such as Stability AI want to use those works without payment,” Getty’s trial lawyer, Lindsay Lane, said June 9 as the trial opened.

Stability, headquartered in London, denies the claims, arguing that “only a tiny proportion” of its AI system’s outputs “look at all similar” to Getty images.

Hugo Cuddigan, Stability’s lawyer, said in court filings that the lawsuit represented “an overt threat” not only for the company but for “the wider generative AI industry.”

Stability has also challenged the U.K. court’s jurisdiction, arguing that the training of its AI model took place on computers run by U.S. tech giant Amazon.

As the legal arguments unfold, a recent decision by the Court of Appeal narrowed the scope of the case.

On Monday, the court rejected Getty’s attempt to introduce claims that Stability’s AI model could generate child sexual abuse material into the case.

Getty had originally referred more generally to “pornography” in its pleadings but later sought to argue that this implicitly included child sexual abuse material. The court found that this was not sufficiently pleaded and had not been supported with specific examples.

The trial is one of the first of its kind in the world to reach this stage, drawing global scrutiny as regulators and courts grapple with how to balance the interests of creators and tech companies.

“This is, to my knowledge, the only case currently going ahead in the U.K.,” said Alina Trapova, professor of law at University College London, whose research focuses on copyright law and the impact of AI on the creative industries.

“Many more and parallel lawsuits have been launched in the U.S.,” Trapova added. “In the U.K., others might be in the making, but this is going to have a very important impact on the debate.”

Clash between innovation and ownership

The case comes at a critical moment in the U.K., which has pitched itself as a global leader in AI, despite lacking a settled framework for AI and copyright.

In a January speech at University College London, Prime Minister Keir Starmer outlined his vision for Britain to become an “AI superpower,” pledging to create designated data center zones and adopt a pro-innovation approach to regulation.

The government estimates that AI could boost productivity by 1.5% annually, adding $57 billion to the economy each year.

“Britain will be one of the great AI superpowers,” Starmer said. “We’re going to make the breakthroughs, we’re going to create the wealth, and we’re going to make AI work for everyone in our country.”

As the global AI race accelerates, the Getty case has become a flashpoint in how far the U.K. is willing to go to protect creative rights in the process. But it’s not the only one.

On May 10, hundreds of British artists, including Dua Lipa, Elton John, Coldplay and Paul McCartney, signed an open letter urging the prime minister to protect their copyright and not “give our work away” to “a handful of powerful overseas tech companies.”

They warn the creative industry will be under threat if the government carries out its plan to let AI companies use copyright-protected work without permission.

Trapova said the U.K., no longer bound by EU directives, has the chance to strike its own balance.

“This is seen as an opportunity to get it right in terms of balance of interests between rightholders, tech industry and the general public,” she said.

A British case with global stakes

Under current U.K. law, text and data mining for commercial purposes is not exempt from copyright restrictions — a key issue at the heart of Getty’s complaint.

“There is no exception to copyright infringement for text and data mining for commercial use,” Trapova said, noting that a public consultation on potential reforms just closed in February.

“AI companies may be required to license all training data,” she added. “That said, this is very difficult in practice.”

The outcome could influence not only U.K. legislative efforts but also international copyright debates.

Getty has filed a similar lawsuit against Stability AI in the U.S. District Court for the District of Delaware, though that case remains in early stages.

“Whichever way it goes though, the case is not going to solve the licensing debate,” Trapova said. There is no one-size-fits-all approach, she added. “Text-to-text, text-to-image, text-to-video — all employ different techniques and that complicates the matter.”

There needs to be an international approach, Trapova suggested, which is gaining some momentum in various legislation around the world, including in the European Union.

The AI Act is the EU’s landmark regulation which classifies AI systems by risk level. Coming into force in August 2024, generative AI must comply with transparency requirements and EU copyright law.

In a separate piece of EU legislation, rightsholders like Getty can prevent commercial text and data mining by opting out, preventing their work from being used in training AI models.

With a draft AI bill moving through the British parliament, Trapova added that the court’s findings could carry political as well as legal weight.

“All eyes are on the court,” she said. “It is an incredibly difficult situation to maneuver around, considering the fact that the public consultation results have not come out and a draft bill is going through various debates, which hits squarely on the issue of licensing and transparency of training data.”

The trial is expected to run to the end of June, with a ruling likely later this year.

Follow @jayfranklinlive

Subscribe to Closing Arguments

Sign up for new weekly newsletter Closing Arguments to get the latest about ongoing trials, major litigation and hot cases and rulings in courthouses around the U.S. and the world.