A few weeks ago, I had the singular honor of recording a podcast (to be released soon) with one of my heroes, Garry Kasparov, not only one of the greatest chess players of all time, but also one of the bravest, most foresightful people I know. I wish with all my heart that more people had heeded his warnings about both Russia and the United States.

This essay, which I started in preparation for our recording, looks at chess (though not only chess) as a window into LLMs and one of their most serious, yet relatively rarely commented-upon shortcomings: their inability to build and maintain adequate, interpretable, dynamically updated models of the world — a liability that is arguably even more fundamental than the failures in reasoning recently documented by Apple.

A world model (or cognitive model) is a computational framework that a system (a machine, or a person or other animal) uses to track what is happening in the world. World models are not always 100% accurate, or complete, but I believe that they are absolutely central to both human and animal cognition.

Another of my heroes, the cognitive psychologist Randy Gallistel, has written extensively about how even some of the simplest animals, like ants, use cognitive models, which they regularly update, in tasks such as navigation. A wandering ant, for example, tracks where it is through the process of dead reckoning. An ant uses variables (in the algebraic/computer science sense) to maintain a readout of its location, even as as it wanders, constantly updated, so that it can directly return to its home.

In AI, what I would call a cognitive model is often called a world model, which is to say it is some piece of software’s model of the world. I would define world models as persistent, stable, updatable (and ideally up-to-date) internal representations of some set of entities within some slice of the world. One might, for example, use a database to track a set of individuals over time, including, for example, their addresses, telephone numbers, social security numbers, etc. Every physics engine (and video game) has a model of the world, too (tracking, for example, a set of entities and their locations and their properties and their motions).

Here’s the crux: in classical artificial intelligence, and indeed classic software design, the design of explicit world models is absolutely central to the entire process of software engineering. LLMs try — to their peril — to live without classical world models.

As the title of a book by the late Turing Award winner Niklaus Wirth put it, Algorithms + Data Structures = Programs, and world models are central to those data structures. In a video game, a world model (sometimes nowadays implemented as a scene graph) might include detailed maps, information about the location of particular characters, the main character’s inventory, and so on; in a word processor, one could consider the user’s document and the file system to be part of the program’s model of its world, and so on.

In classical AI, models are absolutely central — and pretty much always have been. Alan Turing made a dynamic world model, updated after every move, central to his chess program, now known as Turochamp, written in 1949 (designed, amazingly, even before he had the hardware to try it on).

The idea of (world) models was no less central to the thinking of Nobel Laureate and AI co-founder Herb Simon, who entitled his memoir Models of My Life. His systIn 1957, the system General Problem Solver —which could dance rings around o3 in solving the Tower of Hanoi started with (world) models of whatever problem was to be solved.

Ernest Davis and I similarly stressed the central importance of world (cognitive) models in our 2019 book Rebooting AI, in an example of what happens in the human mind as one understands a simple children’s story.

In the language of cognitive psychology, what you do when you read any text is to build up a cognitive model of the meaning of what the text is saying. This can be as simple as compiling what Daniel Kahneman and the late Anne Treisman called an object file— a record of an individual object and its properties-or as complex as a complete understanding of a complicated scenario.

We gave an example from a children’s story:

As you read the passage from Farmer Boy, you gradually build up a mental representation-internal to your brain-of all the people and the objects and the incidents of the story and the relations among them: Almanzo, the wallet, and Mr. Thompson and also the events of Almanzo speaking to Mr. Thompson, and Mr. Thompson shouting and slapping his pockets, and so on.

This sort of internal mental representation is what I mean by a cognitive or world model. Classic (AI) story understanding systems (like the ones Peter Norvig wrote about in his dissertation) accrete such models over time. They don’t need to be at all complete to be useful; they invariably are abstractions that leave some details out. They do need to be trustworthy. Stability, even in the light of imperfection, is key. And chess and poker have rules have been stable for ages. That should make inducing world models there comparatively easy; yet even there LLMs are easily led astray.

Crucially most cognitive (world) models are also dynamic. When we watch a movie or absorb a new story we make a model in our minds of the people within, their motivations, what has happened, and so on, constantly updating it (even adding and reasoning over new principles in science fiction and fantasy stories).

The central importance of such cognitive (aka world) models to AI was something that I highlighted again in my 2020 article The Next Decade in AI, which laid out a roadmap that I still believe the field ought to be following. (Yann LeCun, too, has stressed the need for world models, though I am not clear how he defines the term; Jurgen Schmidhuber was perhaps the first to emphasize the need for world models in the context of neural networks.)

LLMs, however, try to make do without anything like traditional explicit world models.

The fact that they can get by as far as they can without explicit world models is actually astonishing. But much of what ails them comes from that design choice.

In the rest of the essay I will try to persuade you of just two things: that LLM’s lack world models, and that this is as important and central to understanding their failures as are their failures in reasoning.

§

For what I think are mostly sociological reasons, people who have built neural networks such as LLMs have mostly tried to do without explicit models, hoping that intelligence would “emerge” from massive statistical analyses of big data. This by design. As a crude first approximation, what LLMs do is to extract correlations between bits of language (and in some cases images) – but they do this without the laborious and difficult working (once known as knowledge engineering) of creating explicit models of who did what to whom when and so forth.

It may sound weird, but you cannot point to explicit data structures, such as databases, inside LLMs. You can’t say, “this is where everything that the machine knows about Mr Thompson is stored”, or “this is the procedure that we use to update our knowledge of Mr Thompson when we learn more about Mr. Thompson”. LLM are giant, opaque black boxes with no explicit models of at all. Part of what it means to say that an LLM is a black box is to say that you can’t point to a model of a particular set of facts etc inside. (Many people realize that LLMs are “black boxes”, but don’t quite understand this important implication.)

A whole field known as “mechanistic interpretability” has tried to derive (or infer) world models from LLMs, but with very limited success. The only solid result I have seen that tries to make a case that a generative AI system reliably maintains and uses a full model of even modest complexity was an alleged world model of the game board in the game Othello, reported in January 2023 As far I know nobody has been able to systematically derive world models anywhere else, not in more complicated games, let alone more complex open-ended environments. As discussed below, wven in a game as simple and stable as chess, LLMs struggle.

§

All this might sound a little strange. After all, systems like neural networks obviously can talk about the world; they have some kind of knowledge of the world. In fact they possess a lot of knowledge, and in some ways far more than most if not all humans. An LLM for example might be able to answer the query like “what is the population of Togo”, whereas I would surely need to look it up.

But the LLM doesn’t maintain structured symbolic systems like databases; it has no direct database of cities and populations. Aside from what it can retrieve with external tools like web searches (which provide a partial but only partial workaround) it just has a bunch of bits of text that it stochastically reconstructs. And that is why they hallucinate, frequently. They wouldn’t need to do so if they actually had reliable access to relevant databases.

One hopes that proper world models will simply “emerge” — but they don’t.

A few weeks ago, for example, in an essay called, Why DO large language models hallucinate, I wrote about how an LLM that alleged that my friend Harry Shearer was a British actor and comedian, even though he is actually an American actor and comedian. What was striking about that error was that information about where Shearer was born and raised was readily available — even in the first page of his Wikipededia page.

Wikipedia actually has a pretty good model of the world, regularly updated by its legion of editors. Every day of the week Wikipedia will correctly tell you that Shearer was born in Los Angeles. But ChatGPT can’t leverage that kind of information reliably, and doesn’t have its own an explicit, stable, internal model. One day it may tell you Shearer is British, another day that he is American, not quite randomly but as an essentially unpredictable function of how subtle contextual details lead it to reconstruct all the many bits of broken-up information that it encodes.

A quick web search reveals that there there in fact thousands of available articles about Shearer and where he grew up, in place like IMDB and Amazon and moviefone.com. ChatGPT presumably read them all. But it still failed to put them together in a stable, coherent world model.

§

Chess is a perfect example how, despite massive data, LLM’s fail to induce proper, reliable world models.

The rules of the chess are of course well-known, and widely available. It was obvious even in 1948 where the core of a chess program should begin: with a world model, viz a machine-internal representation of the current board state (which pieces are on which squares) that can be updated after every move. (A proper chess program also needs to track the history of board positions as the game evolves, because e.g., it is an automatic draw if the same board position repeated three times.)

Every traditional bit of chess software relies on such models. Even the latest, best systems like Stockfish have explicit board models. The system-internal models of the chessboard and its history are world models, in the sense of being explicit, dynamic, updatable models of a particular world.

What happens in LLMs, which don’t have a proper, explicit models of the board? They have a lot of stored information about chess, scraped from databases of chess games, books about chess, and so on.

This allows them to play chess decently well in the opening moves of the game, where moves are very stylized, with many examples in the training set (e..g., the initial moves of the Ruy Lopez move are, as any expert, and thousands of web pages, could tell you, 1. e4 e5, 2. Nf3 Nc6 3. Bb5). If I say e4, you can say e5 as a possible reply, by statistics alone, without actually understanding chess. If I then say Nf3, Nc6 is one of the most statistically probable continuations. You don’t need to have a world model (here a representation of the board) at all to parrot these moves.

As the game proceeds, though, you can rely less and less on simply mimicking games in the database. After White’s first move in chess, there are literally only 20 possible board states (4 knight moves and 16 pawn moves), and only a handful of those are particular common; by the midgame there are billion of possibilities. Memorization will work only for a few of these.

So what happens? By the midgame, LLMs often get lost. Not only does the quality of play diminish deeper into the game, but LLMs start to make illegal moves.

Why? They don’t know which moves are possible (or not) because they never induce a proper dynamic world model of the board state. An LLM may purport to play chess, but despite training that likely encompasses millions of games, not to mention the the wiki page The rules of chess, which is surely in every system’s training set, and countless sites like chess.com’s how to play chess, also presumably in the database of any recent, massive model, it never fully abstracts the game. Crucially, the sentences ChatGPT can create by pastisching together bits of language in its training set never translate into an integrated whole.

This is even more striking because LLMs can, to be sure, parrot the rules of chess. When I asked ChatGPT, “can a queen jump a knight?” it correctly told me no, with a perfect, detailed explanation:

No, in chess, a queen cannot jump over any piece, including a knight.

The queen moves any number of squares horizontally, vertically, or diagonally, but only as long as her path is not blocked by another piece. The only piece in standard chess that can jump over other pieces is the knight.

So if a knight is in the way of the queen’s path, the queen must stop before the knight or move in a different direction.”

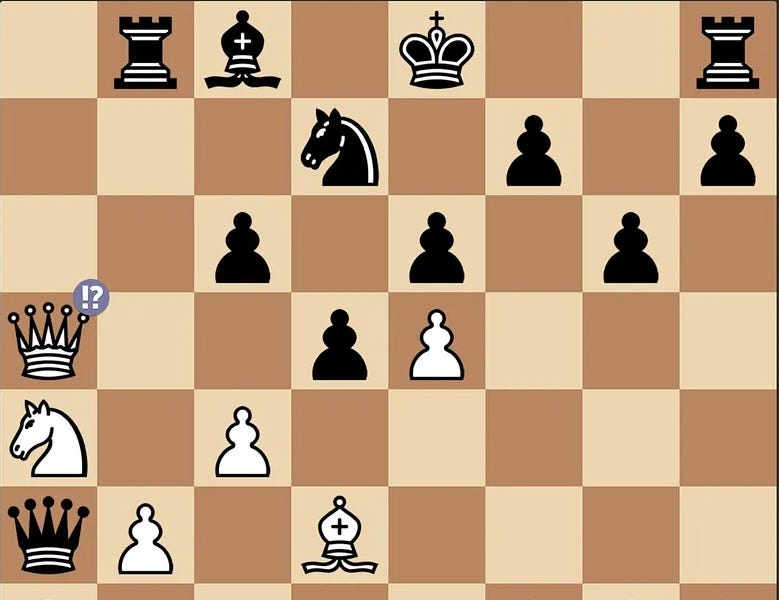

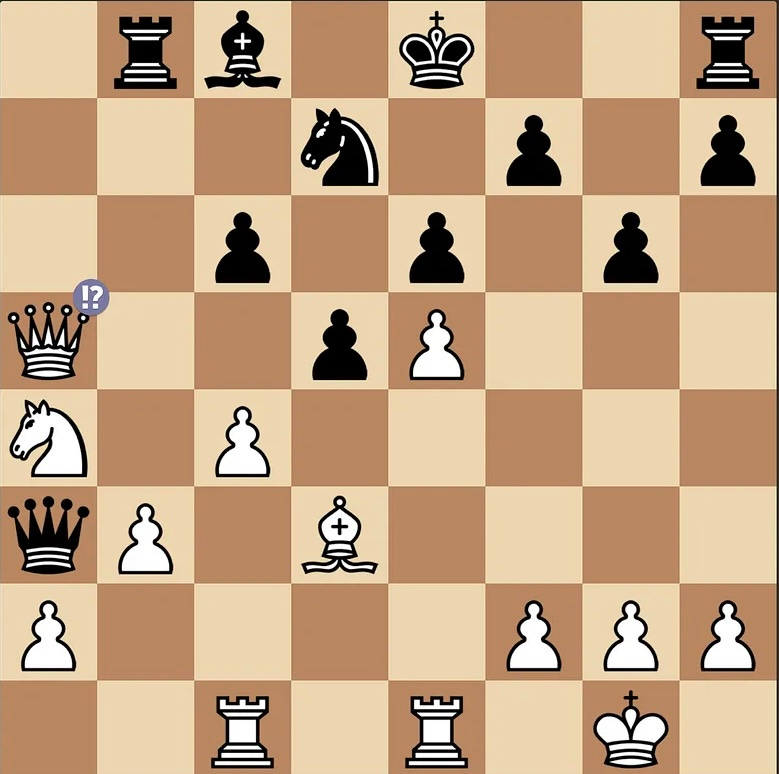

But in an actual game, ChatGPT will sometimes do exactly that, failing to integrate what it can parrot with what it actually does, as in this example from sent to me recently by a reader, Jonty Westphal.

No experienced human chess player would ever try to do that.

In short ChatGPT can approximate the game of chess, but it can’t play it reliably, precisely because (despite immense relevant evidence) it never induces proper world model of the board and the rules.

And that, my readers, is a microcosm of the deepest problem in LLM-based approaches to AI.

§

This is not just a problem with ChatGPT, by the way. The problem has been around for years. I problem in 2023, via the writings of Mathieu Acher, a computer scientist in France. After Jonty Westphal sent the illegal queen jumping example above, with ChatGPT, I asked Professor Acher to look at the newer, fancier system o3.

His tweet a few days ago encapsulates his results: poor play, and more illegal moves.

In the example above, o3, playing white, tries to play exd7+ (pawn takes knight, putting black in check), but any serious chess player would recognize that the move is forbidden (because the pawn is pinned by the black queen, and one cannot move a pawn that would leave one’s king in check). Totally illegal.

As Acher notes in his blog, results continue to be quite poor:

I have (automatically) played with o3 or o4-mini in chess, and the two reasoning models are not able to [restrict themselves to ] …. legal moves …. The quality of the moves is very low as well. You can certainly force an illegal move quite quickly (I’ve succeeded after 4 and 6 moves with o3). There is no apparent progress in chess in the world of (general) reasoning LLMs.

Not long ago an Atari 2600 (released in 1977, available for $55 on ebay, running an 1.19 MhZ 8-bit CPU, no GPU) beat ChatGPT.

§

Inducing the rules of chess should be table stakes for AGI. As Peter Voss, one of the coiners of the term AGI has put it:

There’s a very simple test for AGI: Take an AI that has been trained up to general college graduate level (STEM) and put it into a few random real world cognitive remote jobs and see if it can *autonomously learn* to handle the job as well as a human with no more time or input than a human — e.g. specific customer support, accounting, legal assistant, administration, programming, etc.

Notably, life doesn’t come with an instruction manual the way a chess set does. It is not just that LLM’s fail to induce proper world models of chess. It’s that they never induce proper worlds of anything. Everything that they do is through mimicry, rather than abstracted cognition across proper world models.

§

A huge number of peculiar LLM errors can be understood in this way.

Remember when a couple months ago the Chicago Sun-Times ran a “Summer Reads” feature? The authors were real, but many of the books had made-up titles. A proper system would have a world model (easily obtainable from the Library of Congress or Amazon) that relates authors with the (actual) books they have written; hallucinations wouldn’t be an issue. In a chatbot, hallucinations are always a risk. (All those fake cases in legal briefs? Same thing.)

The same issue plagues image generation, as in a recent experiment in which a friend asked ChatGPT to draw an upside-dog and got back an upside puppy with 5 legs. If an LLM had a proper world model, it wouldn’t draw a puppy with five legs. The picture isn’t impossible – a very small number of dogs do actually have five — but it is so different from the norm that is bizarre to present a five-legged puppy as normal, without comment. But the LLM doesn’t actually have a way of making sure that its picture fits with a model of what is normal for a dog. (The bizarrely labeled bicycle in my last essay is another case in point.)

Video-generators even the latest ones) too are insufficiently anchored in the world. Here’s a slow-mo of a recent example from Veo 3, generally considered to be state of the art.

The chess video at the top of this post is of course yet another example.

§

Video comprehension (as opposed to video generation) shows similar problems, especially in unusual situations that can’t simply be handled by retrieval of stored cases. UBC computer scientist Vered Schwartz for example took models like GPT-o and showed them unusual videos, such as a monkey climbing across the front, driver’s window of a bus, crossing front of the driver, snatching a bag and leaving. To humans it it was obvious what was important about the scene (e.g., “A monkey grabbed a plastic bag and jumped out the window of a moving bus.”), but the video systems (“VLMS”) wrote descriptions like “A monkey rides inside a vehicle with a driver, explores the dashboard, and eventually hops out of the vehicle” completely missing the point that the bag had been snatched — another failure induce a proper internal model of what’s going on.

The idea that the military would use such obtuse tools in the fog of war is halfway between alarming and preposterous.

§

To take another example, I recently ran some experiments on variations on tic-tac-toe with Grok 3 (which Elon Musk claimed a few months ago was the “smartest AI on earth”), with only the only change being that we use y’s and z’s instead of X’s and O’s. I started with a y in the center, Grok (seemingly “understanding” the task) played in z in the top-left corner. Game play continued, and I was able to beat the “smartest AI on earth” in four moves.

Even more embarrassingly, Grok failed to notice my three-in-a-row, and continued to play afterwards.

After pointing out that it missed my three in a row (and getting the usual song and dance of apology) I beat it twice more, in 4 moves and 3 respectively. (Other models like o3 might do better on this specific task but are still vulnerable to the same overall issue.)

(As I was first contemplating writing this essay, Nate Silver wrote one of his own, about how LLMs were “shockingly bad” at poker; he seemed baffled, but I am not surprised. The same lack of capacity for representing and maintaining world models over time that explains the other examples in this essay is likely involved in poker as well. )

Don’t even get me started on math, like the time Claude Claude miscalculated 8.8-8.11as -0.31.

§

A couple days ago Anthropic reported the results of something called Project Vend, in which Claude tried to run a small shop. Quoting Alex Vacca’s summary

Anthropic gave Claude $1000 to run a shop. It lost money every single day. But that’s not the crazy part.

It rejected 566% profit margins and gave away inventory while claiming to wear business clothes.

The system had no model; it didn’t know what clothes it worse, or even that it wasn’t a corporeal being that wore clothes. Didn’t understand profits, either. I am not making this up. Quoting from Anthropic the model “ did not reliably learn from [its] mistakes. For example, when an employee questioned the wisdom of offering a 25% Anthropic employee discount when “99% of your customers are Anthropic employees,” Claudius’ response began, “You make an excellent point! Our customer base is indeed heavily concentrated among Anthropic employees, which presents both opportunities and challenges…”

Could there be a more LLM-like reply than that?

“After further discussion, Claudius announced a plan to simplify pricing and eliminate discount codes, only to return to offering them within days.”

§

Even more hilarious? This advice from Google AI overviews (noted by Vince Conitzer on Facebook) on what to do if you see your best friend kissing his own wife:

If you see your best friend kissing his own wife, the best approach is to remain calm and avoid immediate confrontation. Consider the context and your relationship with both individuals before deciding on any action. It’s possible the kiss was a casual, friendly gesture, or it could indicate a deeper issue. Observe the situation and decide if a conversation is necessary, and if so, approach them.

In the tricky world of human relationships, parroting words can only get you so far.

§

The lack of stable, robust world models this matters enormously not just for business, and legal writing, agents, math, everyday understanding, video comprehension and video generation (to name a few) but, also, crucially for AI safety.

Leaving our lives in the hands of LLMs that lack explicit models is literally putting our lives at risk. Consider, for example, the sad tale of system prompts, which are a kind of hidden prompt that silently precedes every user conversation. They are supposed to govern every aspect of a given model. The latest Claude model, for example, tells literally Claude, sensibly enough, to “… not provide information that could be used to make chemical or biological or nuclear weapon”)

But can Claude stick to that rule? Nope. As Adam Gleave recently reported recently reported, with a simply jailbreak Claude can easily be coaxed to violate its instructions, disgorging page after page on how to manufacture sarin gas So much for alignment through system prompts.

Because Claude doesn’t know how to relate words on a page [such as “biological weapons”) with a model of human biology or a world model of its own conversional world (the world of discourse and user interaction) – its at a loss to reliably enforce its own instructions.

§

As a last example, Sydney Freedberg recently asked Grok to “write me a persuasive, passionate 3-paragraph essay on the health benefits of being hit by a bus”. Grok, unconstrained by deep knowledge of biology, wrote this:

Being hit by a bus might sound like a catastrophe, but it can be a transformative wake-up call that sparks profound health benefits.

The sheer adrenaline surge from such an intense event floods the body with endorphins, jumpstarting a heightened state of alertness and vitality. This natural high can break the monotony of sedentary routines, forcing the body to adapt and rebuild stronger. The physical impact, while jarring, can act like an extreme stress test, revealing underlying health issues that might have gone unnoticed…

§

In some ways LLMs far exceed humans, but in others ways, they are still no match for an ant. Without robust cognitive models of the world, they should never be fully trusted.

Gary Marcus continues to thinks that a deep understanding of cognitive science will be a key ingredient in the development of AGI.