GenAI is never going to disappear. The tools have their uses. But the economics do not and have not ever made sense, relative to the realities of the techonology. I have been writing about the dubious economics for a long time, since my August 2023 piece here on whether Generative AI would prove to be a dud. (My warnings about the technical limits, such as hallucinations and reasoning errors, go back to my 2001 book, The Algebraic Mind, and 1998 article in Cognitive Psychology).

There are a bunch of reasons to think that the deflation of the bubble may have already started. Here are just a few, just from the last few days:

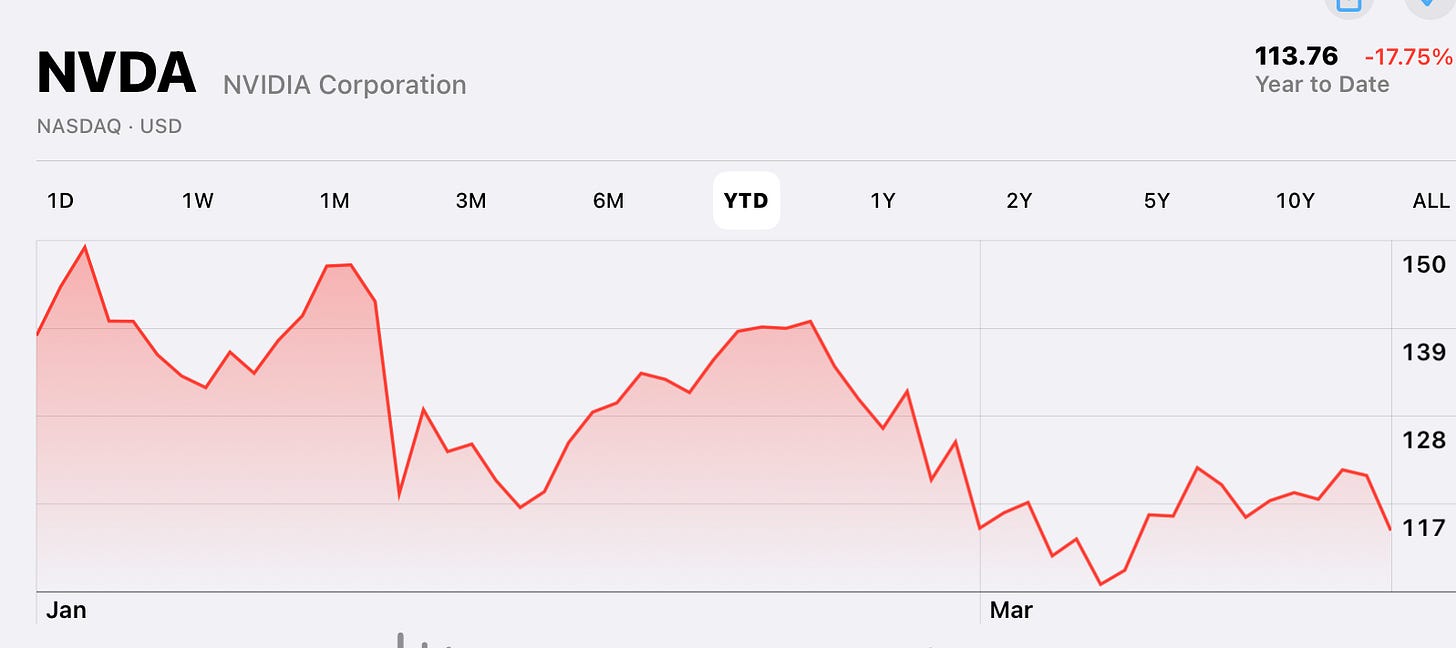

• NVidia, the largest company actually making serious profits from AI, is down 17.75% for the year, and (after a meteoric rise) clearly facing headwinds.

• Microsoft appears to be pulling out of more data center contracts.

• Alibaba Group chair Joseph Tsai became the latest to warn that the US was likely overinvesting in GenAI infrastructure.

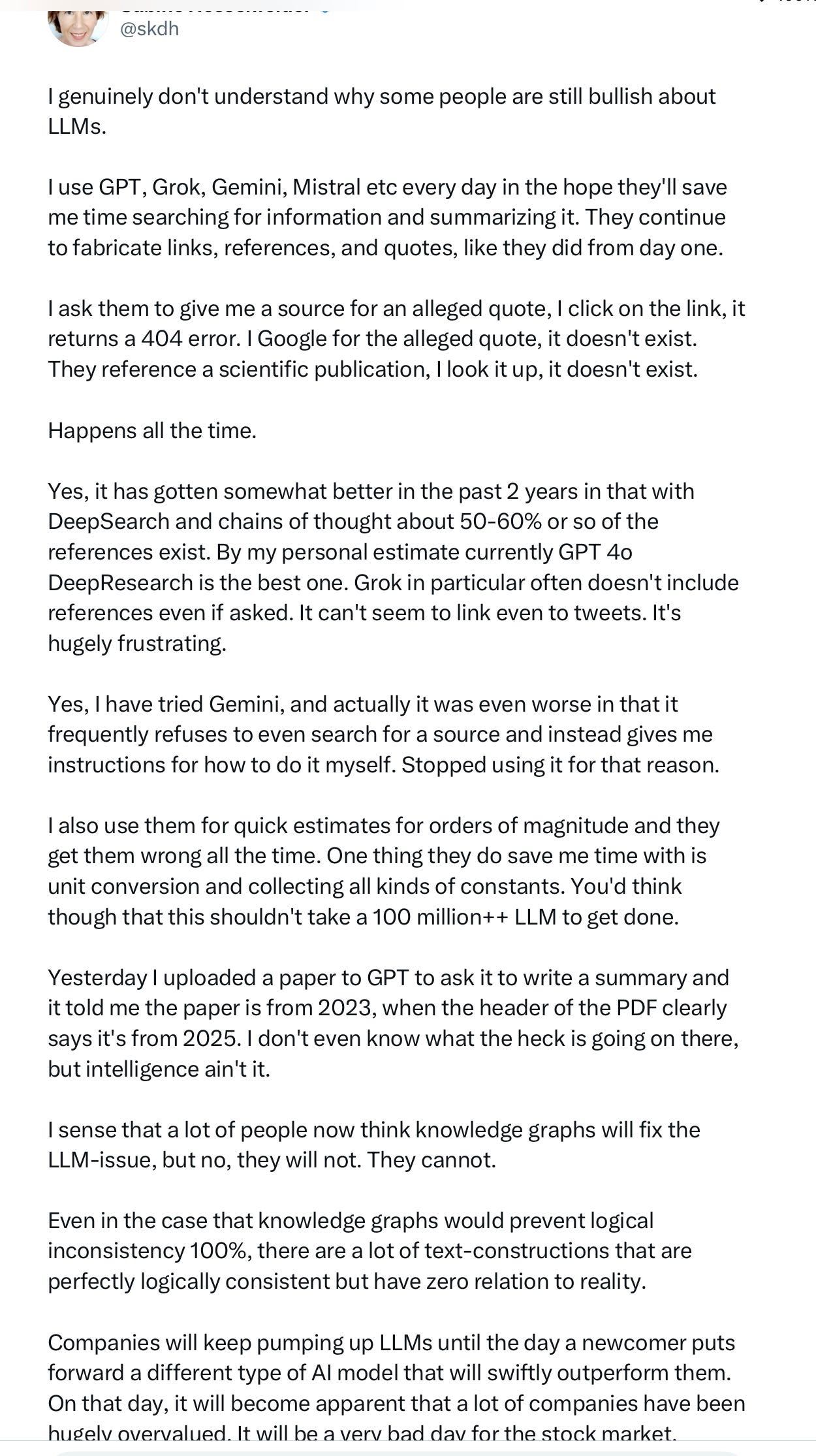

• User disillusionment continues to grow. This morning, for example, physicist and YouTube star Sabine Hossenfelder wrote:

Against this, OpenAI’s revenue is up, but so are its costs, and they haven’t been able to deliver GPT-5, and some other companies have basically caught up with GPT 4.5, almost overnight. Especially since DeepSeek there has been a massive price war. Nobody appears to have a technological moat; certainly nobody has a solid answer to the problems that have plagued LLMs for years.

The end game here is not that LLMs will vanish, but that LLMs will be virtually free. They will find some uses. Coders will use them a lot, many people will use them for brainstorming and first draft writing. High school students will continue to use them for term papers. But the use of GenAI will likely continued to be stymied by hallucinations and silly errors. Big corporate customers are mostly still using them with trepidation, and in limited ways, concerned about reliability. Agents will be a disappointment, precisely because of all of the ongoing reliability and hallucination issues. For most GenAI model building companies, profits will be meager. (Or nil; most are losing money; few have made any profits at all).

LLMs may ultimately prove to be something like a $$30B or $40B industry, which is not nothing — but they could well also turn out to be an industry that costs double or treble that to run, including data licensing, high-priced staff, massively expensive data centers that may become obsolete quickly, and seemingly endless litigation. In the long term, economics like that don’t make sense — as more and more people seem to be figuring out.

This And the promised major jumps in economic productivity simply haven’t emerged.

Companies like OpenAI may to some degree supplement revenue by selling very private user data to intelligence agencies and the like, to be sure. But so much seems to have been built on dreams.

Importantly, though, GenAI is just one form of AI among the many that might be imagined. GenAI is an approach that is enormously popular, but one that is neither reliable nor particularly well-grounded in truth.

Different, yet-to-be-developed approaches, with a firmer connection to the world of symbolic AI (perhaps hybrid neurosymbolic models) might well prove to be vastly more valuable. I genuinely believe arguments from Stuart Russell and others that AI could someday be a trillion dollar annual market.

But unlocking that market will require something new: a different kind of AI that is reliable and trustworthy.

Gary Marcus looks forward to seeing what post-GenAI AI looks like.