Introduction

AI safety has become a key subject with the recent progress of AI. Debates on the topic have helped outline desirable properties a safe AI should follow, such as provenance (where does the model come from), confidentiality (how to ensure the confidentiality of prompts or of the model weights), or transparency (how to know what model is used on data).

While such discussions have been necessary to define what properties such models should have, they are not sufficient, as there are few technical solutions to actually guarantee that those properties are implemented in production.

See our other post with Mithril Security on verifiable training of AI models.

For instance, there is no way to guarantee that a good actor who trained an AI satisfying some safety requirements has actually deployed that same model, nor is it possible to detect if a malicious actor is serving a harmful model. This is due to the lack of transparency and technical proof that a specific and trustworthy model is indeed loaded in the backend.

This need for technical answers to the AI governance challenges has been expressed in the highest spheres. For instance, the White House Executive Order on AI Safety and Security has highlighted the need to develop privacy-preserving technologies and the importance of having confidential, transparent, and traceable AI systems.

Hardware-backed security today

Fortunately, modern techniques in cryptography and secure hardware technology provide the building blocks to provide verifiable systems that can enforce AI governance policies. For example, unfalsifiable cryptographic proof can be created to attest that a model comes from the application of a specific code on a specific dataset. This could prevent copyright issues, or prove that a certain number of training epochs were done, verifying whether a threshold in compute has or has not been breached.

The field of secure hardware has been evolving and has reached a stage where it can be used in production to make AI safer. While initially developed for users’ devices (e.g. iPhones use secure enclaves to securely store and process biometric data), large server-side processors have become mature enough to tackle AI workloads.

While recent cutting-edge AI hardware, such as Intel Xeon with Intel SGX or Nvidia H100s with Confidential Computing, possess the hardware features to implement AI governance properties, few projects have emerged yet to leverage them to build AI governance tooling.

Proof-of-concept: Secure AI deployment

The Future of Life Institute, an NGO leading the charge for safety in AI systems, has partnered with Mithril Security, a startup pioneering the use of secure hardware with enclave-based solutions for trustworthy AI. This collaboration aims to demonstrate how AI governance policies can be enforced with cryptographic guarantees.

In our first joint project, we created a proof-of-concept demonstration of confidential inference.

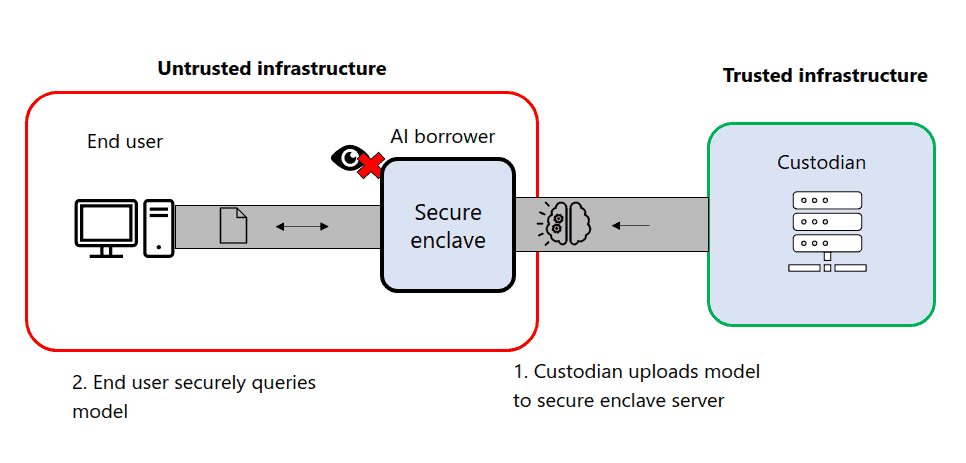

Scenario: Leasing of confidential and high-value AI model to untrusted party

The use case we are interested in involves two parties:

an AI custodian with a powerful AI model

an AI borrower who wants to consume the model on their infrastructure but is not to be trusted with the weights directly

The AI custodian wants technical guarantees that:

the model weights are not directly accessible to the AI borrower

trustable telemetry is provided to know how much computing is being done

a non-removable off-switch button can be used to shut down inference if necessary

Current AI deployment solutions, where the model is shipped on the AI borrower infrastructure, provide no IP protection, and it is trivial for the AI borrower to extract the weights without awareness from the custodian.

Through this collaboration, we have developed a framework for packaging and deploying models in an enclave using Intel secure hardware. This enables the AI custodian to lease a model, deployed on the infrastructure of the AI borrower, while having hardware guarantees the weights are protected and the trustable telemetry for consumption and off-switch will be enforced.

While this proof-of-concept is not necessarily deployable as is, due to performance (we used Intel CPUs) and specific hardware attacks that need mitigation, it serves as a demonstrator of how enclaves can enable collaboration under agreed terms between parties with potentially misaligned interests.

By building upon this work, one can imagine how a country like the US could lease its advanced AI models to allied countries while ensuring the model’s IP is protected and the ally’s data remains confidential.

Open-source deliverables available

This proof of concept is made open-source under an Apache-2.0 license. It is based on BlindAI, an open-source secure AI deployment solution using Intel SGX, audited by Quarkslab.

We provide the following resources to explore in more detail our collaboration on hardware-backed AI governance:

A demo to understand how controlled AI consumption works and looks like in practice.

Code is made open-source to reproduce our results.

Technical documentation to dig into the specifics of the implementation.

Future investigations

By developing and evaluating frameworks for hardware-backed AI governance, FLI and Mithril hope to help encourage the creation and use of such measures so that we can keep AI safe without compromising the interests of AI providers, users, or regulators.

Many other capabilities are possible, and we plan to roll out demos and analyses of more in the coming months.

Many of these can be implemented on existing and widely deployed hardware to allow AI compute governance backed by hardware measures. This answers concerns that compute governance mechanisms are unenforceable or enforceable only with intrusive surveillance.

The security of these measures needs testing and improvement for some scenarios, and we hope these demonstrations, and the utility of hardware-backed AI governance, will encourage both chipmakers and policymakers to include more and better versions of such security measures in upcoming hardware.

See our other post with Mithril Security on verifiable training of AI models.