AI agents are quickly becoming an integral part of customer workflows across industries by automating complex tasks, enhancing decision-making, and streamlining operations. However, the adoption of AI agents in production systems requires scalable evaluation pipelines. Robust agent evaluation enables you to gauge how well an agent is performing certain actions and gain key insights into them, enhancing AI agent safety, control, trust, transparency, and performance optimization.

Amazon Bedrock Agents uses the reasoning of foundation models (FMs) available on Amazon Bedrock, APIs, and data to break down user requests, gather relevant information, and efficiently complete tasks—freeing teams to focus on high-value work. You can enable generative AI applications to automate multistep tasks by seamlessly connecting with company systems, APIs, and data sources.

Ragas is an open source library for testing and evaluating large language model (LLM) applications across various LLM use cases, including Retrieval Augmented Generation (RAG). The framework enables quantitative measurement of the effectiveness of the RAG implementation. In this post, we use the Ragas library to evaluate the RAG capability of Amazon Bedrock Agents.

LLM-as-a-judge is an evaluation approach that uses LLMs to assess the quality of AI-generated outputs. This method employs an LLM to act as an impartial evaluator, to analyze and score outputs. In this post, we employ the LLM-as-a-judge technique to evaluate the text-to-SQL and chain-of-thought capabilities of Amazon Bedrock Agents.

Langfuse is an open source LLM engineering platform, which provides features such as traces, evals, prompt management, and metrics to debug and improve your LLM application.

In the post Accelerate analysis and discovery of cancer biomarkers with Amazon Bedrock Agents, we showcased research agents for cancer biomarker discovery for pharmaceutical companies. In this post, we extend the prior work and showcase Open Source Bedrock Agent Evaluation with the following capabilities:

Evaluating Amazon Bedrock Agents on its capabilities (RAG, text-to-SQL, custom tool use) and overall chain-of-thought

Comprehensive evaluation results and trace data sent to Langfuse with built-in visual dashboards

Trace parsing and evaluations for various Amazon Bedrock Agents configuration options

First, we conduct evaluations on a variety of different Amazon Bedrock Agents. These include a sample RAG agent, a sample text-to-SQL agent, and pharmaceutical research agents that use multi-agent collaboration for cancer biomarker discovery. Then, for each agent, we showcase navigating the Langfuse dashboard to view traces and evaluation results.

Technical challenges

Today, AI agent developers generally face the following technical challenges:

End-to-end agent evaluation – Although Amazon Bedrock provides built-in evaluation capabilities for LLM models and RAG retrieval, it lacks metrics specifically designed for Amazon Bedrock Agents. There is a need for evaluating the holistic agent goal, as well as individual agent trace steps for specific tasks and tool invocations. Support is also needed for both single and multi-agents, and both single and multi-turn datasets.

Challenging experiment management – Amazon Bedrock Agents offers numerous configuration options, including LLM model selection, agent instructions, tool configurations, and multi-agent setups. However, conducting rapid experimentation with these parameters is technically challenging due to the lack of systematic ways to track, compare, and measure the impact of configuration changes across different agent versions. This makes it difficult to effectively optimize agent performance through iterative testing.

Solution overview

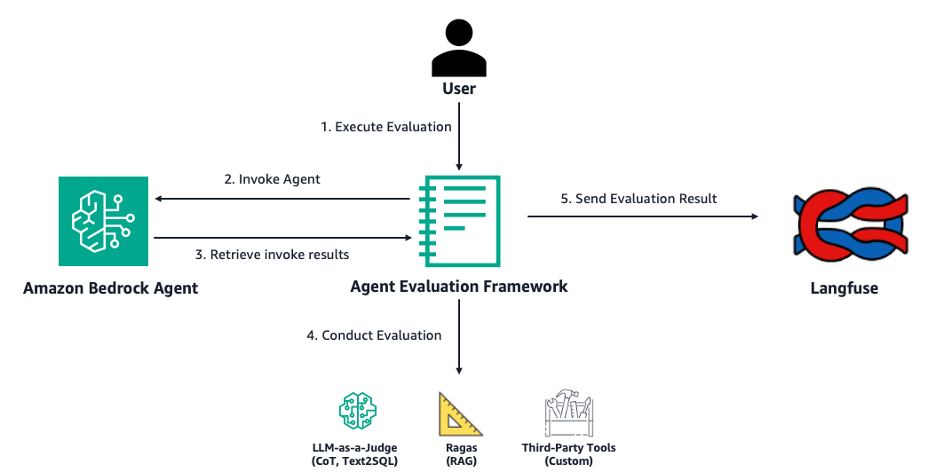

The following figure illustrates how Open Source Bedrock Agent Evaluation works on a high level. The framework runs an evaluation job that will invoke your own agent in Amazon Bedrock and evaluate its response.

The workflow consists of the following steps:

The user specifies the agent ID, alias, evaluation model, and dataset containing question and ground truth pairs.

The user executes the evaluation job, which will invoke the specified Amazon Bedrock agent.

The retrieved agent invocation traces are run through a custom parsing logic in the framework.

The framework conducts an evaluation based on the agent invocation results and the question type:

Chain-of-thought – LLM-as-a-judge with Amazon Bedrock LLM calls (conducted for every evaluation run for different types of questions)

RAG – Ragas evaluation library

Text-to-SQL – LLM-as-a-judge with Amazon Bedrock LLM calls

Evaluation results and parsed traces are gathered and sent to Langfuse for evaluation insights.

Prerequisites

To deploy the sample RAG and text-to-SQL agents and follow along with evaluating them using Open Source Bedrock Agent Evaluation, follow the instructions in Deploying Sample Agents for Evaluation.

To bring your own agent to evaluate with this framework, refer to the following README and follow the detailed instructions to deploy the Open Source Bedrock Agent Evaluation framework.

Overview of evaluation metrics and input data

First, we create sample Amazon Bedrock agents to demonstrate the capabilities of Open Source Bedrock Agent Evaluation. The text-to-SQL agent uses the BirdSQL Mini-Dev dataset, and the RAG agent uses the Hugging Face rag-mini-wikpedia dataset.

Evaluation metrics

The Open Source Bedrock Agent Evaluation framework conducts evaluations on two broad types of metrics:

Agent goal – Chain-of-thought (run on every question)

Task accuracy – RAG, text-to-SQL (run only when the specific tool is used to answer question)

Agent goal metrics measure how well an agent identifies and achieves the goals of the user. There are two main types: reference-based evaluation and no reference evaluation. Examples can be found in Agent Goal accuracy as defined by Ragas:

Reference-based evaluation – The user provides a reference that will be used as the ideal outcome. The metric is computed by comparing the reference with the goal achieved by the end of the workflow.

Evaluation without reference – The metric evaluates the performance of the LLM in identifying and achieving the goals of the user without reference.

We will showcase evaluation without reference using chain-of-thought evaluation. We conduct evaluations by comparing the agent’s reasoning and the agent’s instruction. For this evaluation, we use some metrics from the evaluator prompts for Amazon Bedrock LLM-as-a-judge. In this framework, the chain-of-thought evaluations are run on every question that the agent is evaluated against.

Task accuracy metrics measure how well an agent calls the required tools to complete a given task. For the two task accuracy metrics, RAG and text-to-SQL, evaluations are conducted based on comparing the actual agent answer against the ground truth dataset that must be provided in the input dataset. The task accuracy metrics are only evaluated when the corresponding tool is used to answer the question.

The following is a breakdown of the key metrics used in each evaluation type included in the framework:

RAG:

Faithfulness – How factually consistent a response is with the retrieved context

Answer relevancy – How directly and appropriately the original question is addressed

Context recall – How many of the relevant pieces of information were successfully retrieved

Semantic similarity – The assessment of the semantic resemblance between the generated answer and the ground truth

Text-to-SQL:

Chain-of-thought:

Helpfulness – How well the agent satisfies explicit and implicit expectations

Faithfulness – How well the agent sticks to available information and context

Instruction following – How well the agent respects all explicit directions

User-agent trajectories

The input dataset is in the form of trajectories, where each trajectory consists of one or more questions to be answered by the agent. The trajectories are meant to simulate how a user might interact with the agent. Each trajectory consists of a unique question_id, question_type, question, and ground_truth information. The following are examples of actual trajectories used to evaluate each type of agent in this post.

For more simple agent setups like the RAG and text-to-SQL sample agent, we created trajectories consisting of a single question, as shown in the following examples.

The following is an example of a RAG sample agent trajectory:

The following is an example of a text-to-SQL sample agent trajectory:

Pharmaceutical research agent use case example

In this section, we demonstrate how you can use the Open Source Bedrock Agent Evaluation framework to evaluate pharmaceutical research agents discussed in the post Accelerate analysis and discovery of cancer biomarkers with Amazon Bedrock Agents . It showcases a variety of specialized agents, including a biomarker database analyst, statistician, clinical evidence researcher, and medical imaging expert in collaboration with a supervisor agent.

The pharmaceutical research agent was built using the multi-agent collaboration feature of Amazon Bedrock. The following diagram shows the multi-agent setup that was evaluated using this framework.

As shown in the diagram, the RAG evaluations will be conducted on the clinical evidence researcher sub-agent. Similarly, text-to-SQL evaluations will be run on the biomarker database analyst sub-agent. The chain-of-thought evaluation evaluates the final answer of the supervisor agent to check if it properly orchestrated the sub-agents and answered the user’s question.

Research agent trajectories

For a more complex setup like the pharmaceutical research agents, we used a set of industry relevant pregenerated test questions. By creating groups of questions based on their topic regardless of the sub-agents that might be invoked to answer the question, we created trajectories that include multiple questions spanning multiple types of tool use. With relevant questions already generated, integrating with the evaluation framework simply required properly formatting the ground truth data into trajectories.

We walk through evaluating this agent against a trajectory containing a RAG question and a text-to-SQL question:

Chain-of-thought evaluations are conducted for every question, regardless of tool use. This will be illustrated through a set of images of agent trace and evaluations on the Langfuse dashboard.

After running the agent against the trajectory, the results are sent to Langfuse to view the metrics. The following screenshot shows the trace of the RAG question (question ID 3) evaluation on Langfuse.

The screenshot displays the following information:

Trace information (input and output of agent invocation)

Trace steps (agent generation and the corresponding sub-steps)

Trace metadata (input and output tokens, cost, model, agent type)

Evaluation metrics (RAG and chain-of-thought metrics)

The following screenshot shows the trace of the text-to-SQL question (question ID 4) evaluation on Langfuse, which evaluated the biomarker database analyst agent that generates SQL queries to run against an Amazon Redshift database containing biomarker information.

The screenshot shows the following information:

Trace information (input and output of agent invocation)

Trace steps (agent generation and the corresponding sub-steps)

Trace metadata (input and output tokens, cost, model, agent type)

Evaluation metrics (text-to-SQL and chain-of-thought metrics)

The chain-of-thought evaluation is included in part of both questions’ evaluation traces. For both traces, LLM-as-a-judge is used to generate scores and explanation around an Amazon Bedrock agent’s reasoning on a given question.

Overall, we ran 56 questions grouped into 21 trajectories against the agent. The traces, model costs, and scores are shown in the following screenshot.

The following table contains the average evaluation scores across 56 evaluation traces.

Metric Category

Metric Type

Metric Name

Number of Traces

Metric Avg. Value

Agent Goal

COT

Helpfulness

50

0.77

Agent Goal

COT

Faithfulness

50

0.87

Agent Goal

COT

Instruction following

50

0.69

Agent Goal

COT

Overall (average of all metrics)

50

0.77

Task Accuracy

TEXT2SQL

Answer correctness

26

0.83

Task Accuracy

TEXT2SQL

SQL semantic equivalence

26

0.81

Task Accuracy

RAG

Semantic similarity

20

0.66

Task Accuracy

RAG

Faithfulness

20

0.5

Task Accuracy

RAG

Answer relevancy

20

0.68

Task Accuracy

RAG

Context recall

20

0.53

Security considerations

Consider the following security measures:

Enable Amazon Bedrock agent logging – For security best practices of using Amazon Bedrock Agents, enable Amazon Bedrock model invocation logging to capture prompts and responses securely in your account.

Check for compliance requirements – Before implementing Amazon Bedrock Agents in your production environment, make sure that the Amazon Bedrock compliance certifications and standards align with your regulatory requirements. Refer to Compliance validation for Amazon Bedrock for more information and resources on meeting compliance requirements.

Clean up

If you deployed the sample agents, run the following notebooks to delete the resources created.

If you chose the self-hosted Langfuse option, follow these steps to clean up your AWS self-hosted Langfuse setup.

Conclusion

In this post, we introduced the Open Source Bedrock Agent Evaluation framework, a Langfuse-integrated solution that streamlines the agent development process. The framework comes equipped with built-in evaluation logic for RAG, text-to-SQL, chain-of-thought reasoning, and integration with Langfuse for viewing evaluation metrics. With the Open Source Bedrock Agent Evaluation agent, developers can quickly evaluate their agents and rapidly experiment with different configurations, accelerating the development cycle and improving agent performance.

We demonstrated how this evaluation framework can be integrated with pharmaceutical research agents. We used it to evaluate agent performance against biomarker questions and sent traces to Langfuse to view evaluation metrics across question types.

The Open Source Bedrock Agent Evaluation framework enables you to accelerate your generative AI application building process using Amazon Bedrock Agents. To self-host Langfuse in your AWS account, see Hosting Langfuse on Amazon ECS with Fargate using CDK Python. To explore how you can streamline your Amazon Bedrock Agents evaluation process, get started with Open Source Bedrock Agent Evaluation.

Refer to Towards Effective GenAI Multi-Agent Collaboration: Design and Evaluation for Enterprise Applications from the Amazon Bedrock team to learn more about multi-agent collaboration and end-to-end agent evaluation.

About the authors

Hasan Poonawala is a Senior AI/ML Solutions Architect at AWS, working with healthcare and life sciences customers. Hasan helps design, deploy, and scale generative AI and machine learning applications on AWS. He has over 15 years of combined work experience in machine learning, software development, and data science on the cloud. In his spare time, Hasan loves to explore nature and spend time with friends and family.

Hasan Poonawala is a Senior AI/ML Solutions Architect at AWS, working with healthcare and life sciences customers. Hasan helps design, deploy, and scale generative AI and machine learning applications on AWS. He has over 15 years of combined work experience in machine learning, software development, and data science on the cloud. In his spare time, Hasan loves to explore nature and spend time with friends and family.

Blake Shin is an Associate Specialist Solutions Architect at AWS who enjoys learning about and working with new AI/ML technologies. In his free time, Blake enjoys exploring the city and playing music.

Blake Shin is an Associate Specialist Solutions Architect at AWS who enjoys learning about and working with new AI/ML technologies. In his free time, Blake enjoys exploring the city and playing music.

Rishiraj Chandra is an Associate Specialist Solutions Architect at AWS, passionate about building innovative artificial intelligence and machine learning solutions. He is committed to continuously learning and implementing emerging AI/ML technologies. Outside of work, Rishiraj enjoys running, reading, and playing tennis.

Rishiraj Chandra is an Associate Specialist Solutions Architect at AWS, passionate about building innovative artificial intelligence and machine learning solutions. He is committed to continuously learning and implementing emerging AI/ML technologies. Outside of work, Rishiraj enjoys running, reading, and playing tennis.