Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The whale has returned.

After rocking the global AI and business community early this year with the January 20 initial release of its hit open source reasoning AI model R1, the Chinese startup DeepSeek — a spinoff of formerly only locally well-known Hong Kong quantitative analysis firm High-Flyer Capital Management — has released DeepSeek-R1-0528, a significant update that brings DeepSeek’s free and open model near parity in reasoning capabilities with proprietary paid models such as OpenAI’s o3 and Google Gemini 2.5 Pro

This update is designed to deliver stronger performance on complex reasoning tasks in math, science, business and programming, along with enhanced features for developers and researchers.

Like its predecessor, DeepSeek-R1-0528 is available under the permissive and open MIT License, supporting commercial use and allowing developers to customize the model to their needs.

Open-source model weights are available via the AI code sharing community Hugging Face, and detailed documentation is provided for those deploying locally or integrating via the DeepSeek API.

Existing users of the DeepSeek API will automatically have their model inferences updated to R1-0528 at no additional cost. The current cost for DeepSeek’s API is

For those looking to run the model locally, DeepSeek has published detailed instructions on its GitHub repository. The company also encourages the community to provide feedback and questions through their service email.

Individual users can try it for free through DeepSeek’s website here, though you’ll need to provide a phone number or Google Account access to sign in.

Enhanced reasoning and benchmark performance

At the core of the update are significant improvements in the model’s ability to handle challenging reasoning tasks.

DeepSeek explains in its new model card on HuggingFace that these enhancements stem from leveraging increased computational resources and applying algorithmic optimizations in post-training. This approach has resulted in notable improvements across various benchmarks.

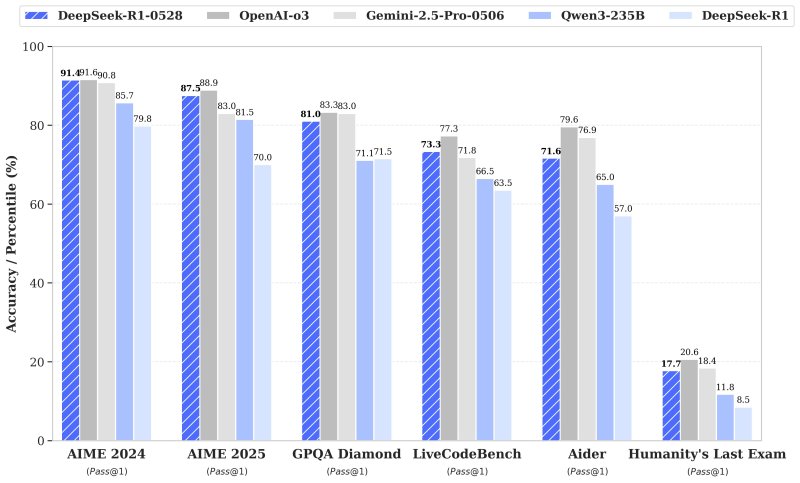

In the AIME 2025 test, for instance, DeepSeek-R1-0528’s accuracy jumped from 70% to 87.5%, indicating deeper reasoning processes that now average 23,000 tokens per question compared to 12,000 in the previous version.

Coding performance also saw a boost, with accuracy on the LiveCodeBench dataset rising from 63.5% to 73.3%. On the demanding “Humanity’s Last Exam,” performance more than doubled, reaching 17.7% from 8.5%.

These advances put DeepSeek-R1-0528 closer to the performance of established models like OpenAI’s o3 and Gemini 2.5 Pro, according to internal evaluations — both of those models either have rate limits and/or require paid subscriptions to access.

UX upgrades and new features

Beyond performance improvements, DeepSeek-R1-0528 introduces several new features aimed at enhancing the user experience.

The update adds support for JSON output and function calling, features that should make it easier for developers to integrate the model’s capabilities into their applications and workflows.

Front-end capabilities have also been refined, and DeepSeek says these changes will create a smoother, more efficient interaction for users.

Additionally, the model’s hallucination rate has been reduced, contributing to more reliable and consistent output.

One notable update is the introduction of system prompts. Unlike the previous version, which required a special token at the start of the output to activate “thinking” mode, this update removes that need, streamlining deployment for developers.

Smaller variants for those with more limited compute budgets

Alongside this release, DeepSeek has distilled its chain-of-thought reasoning into a smaller variant, DeepSeek-R1-0528-Qwen3-8B, which should help those enterprise decision-makers and developers who don’t have the hardware necessary to run the full

This distilled version reportedly achieves state-of-the-art performance among open-source models on tasks such as AIME 2024, outperforming Qwen3-8B by 10% and matching Qwen3-235B-thinking.

According to Modal, running an 8-billion-parameter large language model (LLM) in half-precision (FP16) requires approximately 16 GB of GPU memory, equating to about 2 GB per billion parameters.

Therefore, a single high-end GPU with at least 16 GB of VRAM, such as the NVIDIA RTX 3090 or 4090, is sufficient to run an 8B LLM in FP16 precision. For further quantized models, GPUs with 8–12 GB of VRAM, like the RTX 3060, can be used.

DeepSeek believes this distilled model will prove useful for academic research and industrial applications requiring smaller-scale models.

Initial AI developer and influencer reactions

The update has already drawn attention and praise from developers and enthusiasts on social media.

Haider aka “@slow_developer” shared on X that DeepSeek-R1-0528 “is just incredible at coding,” describing how it generated clean code and working tests for a word scoring system challenge, both of which ran perfectly on the first try. According to him, only o3 had previously managed to match that performance.

Meanwhile, Lisan al Gaib posted that “DeepSeek is aiming for the king: o3 and Gemini 2.5 Pro,” reflecting the consensus that the new update brings DeepSeek’s model closer to these top performers.

Another AI news and rumor influencer, Chubby, commented that “DeepSeek was cooking!” and highlighted how the new version is nearly on par with o3 and Gemini 2.5 Pro.

Chubby even speculated that the last R1 update might indicate that DeepSeek is preparing to release its long-awaited and presumed “R2” frontier model soon, as well.

Looking Ahead

The release of DeepSeek-R1-0528 underscores DeepSeek’s commitment to delivering high-performing, open-source models that prioritize reasoning and usability. By combining measurable benchmark gains with practical features and a permissive open-source license, DeepSeek-R1-0528 is positioned as a valuable tool for developers, researchers, and enthusiasts looking to harness the latest in language model capabilities.

Let me know if you’d like to add any more quotes, adjust the tone further, or highlight additional elements!