Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Deep Cogito, a lesser-known AI research startup based in San Francisco founded by ex-Googlers, has released four new open-ish large language models (LLMs) that attempt something few others do: Learning how to reason more effectively over time — and get better at it on their own.

The models, released as part of Cogito’s v2 family, range from 70 billion to 671 billion parameters and are available for AI developers and enterprises to use under a mix of limited and fully open licensing terms. They include:

Cogito v2-70B (Dense)

Cogito v2-109B (Mixture-of-experts)

Cogito v2-405B (Dense)

Cogito v2-671B (MoE)

Dense and MoE models are each suited to different needs. Dense 70B and 405B variant models activate all parameters on every forward pass, making them more predictable and easier to deploy across a wide range of hardware.

They’re ideal for low-latency applications, fine-tuning and environments with limited GPU capacity. MoE models, such as the 109B and 671B versions, use a sparse routing mechanism to activate only a few specialized “expert” subnetworks at a time, allowing for much larger total model sizes without proportional increases in compute cost.

The AI Impact Series Returns to San Francisco – August 5

The next phase of AI is here – are you ready? Join leaders from Block, GSK, and SAP for an exclusive look at how autonomous agents are reshaping enterprise workflows – from real-time decision-making to end-to-end automation.

Secure your spot now – space is limited: https://bit.ly/3GuuPLF

This makes them well-suited for high-performance inference tasks, research into complex reasoning or serving frontier-level accuracy at lower runtime expense. In Cogito v2, the 671B MoE model serves as the flagship, leveraging its scale and routing efficiency to match or exceed leading open models on benchmarks — while using significantly shorter reasoning chains.

The models are available now on Hugging Face for download and usage by enterprises and on Unsloth for local usage, or, for those who can’t host the model inferences on their own hardware, through application programming interfaces (APIs) from Together AI, Baseten and RunPod.

There’s also a quantized “8-bit floating point (FP8)” version of the 671B model, which reduces the size of the numbers used to represent the model’s parameters from 16-bits to 8-bits, helping users run massive models faster, cheaper and on more accessible hardware — sometimes with only a negligible hit to performance (95 to 99%). However, this can slightly degrade model accuracy, especially for tasks requiring fine-grained precision (some math or reasoning problems).

All four Cogito v2 models are designed as hybrid reasoning systems: They can respond immediately to a query, or, when needed, reflect internally before answering.

Crucially, that reflection is not just runtime behavior — it’s baked into the training process itself.

These models are trained to internalize their own reasoning. That means the very paths they take to arrive at answers — the mental steps, so to speak — are distilled back into the models’ weights.

Over time, they learn which lines of thinking actually matter and which don’t.

As Deep Cogito’s blog post notes, the researchers “disincentivize the model from ‘meandering more’ to be able to arrive at the answer, and instead develop a stronger intuition for the right search trajectory for the reasoning process.”

The result, Deep Cogito claims, is faster, more efficient reasoning and a general improvement in performance, even in so-called “standard” mode.

Self-improving AI

While many in the AI community are just encountering the company, Deep Cogito has been quietly building for over a year.

It emerged from stealth in April 2025 with a series of open-source models trained on Meta’s Llama 3.2. Those early releases showed promising results.

As VentureBeat previously reported, the smallest Cogito v1 models (3B and 8B) outperformed Llama 3 counterparts across several benchmarks — sometimes by wide margins.

Deep Cogito CEO and co-founder Drishan Arora — previously a lead LLM engineer at Google — described the company’s long-term goal as building models that can reason and improve with each iteration, much like how AlphaGo refined its strategy through self-play.

Deep Cogito’s core method, iterated distillation and amplification (IDA), replaces hand-written prompts or static teachers with the model’s own evolving insights.

What is ‘machine intuition’?

With Cogito v2, the team took that loop to a much larger scale. The central idea is simple: Reasoning shouldn’t just be an inference-time tool; it should be part of the model’s core intelligence.

So, the company implemented a system where the model runs reasoning chains during training, and then is trained on its intermediate thoughts.

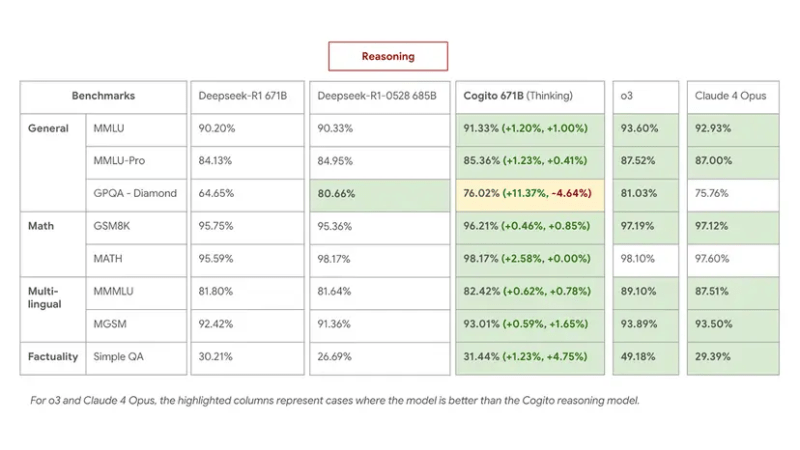

This process yields concrete improvements, according to internal benchmarks. The flagship 671B MoE model outperforms DeepSeek R1 in reasoning tasks, matching or beating its latest 0528 model while using 60% shorter reasoning chains.

On MMLU, GSM8K and MGSM, Cogito 671B MoE’s performance was roughly on par with top open models like Qwen1.5-72B and DeepSeek v3, and approached the performance tier of closed models like Claude 4 Opus and o3.

Specifically:

Cogito 671B MoE (reasoning mode) matched DeepSeek R1 0528 across multilingual QA and general knowledge tasks, and outperformed it on strategy and logical deduction.

In non-reasoning mode, it exceeded DeepSeek v3 0324, suggesting that the distilled intuition carried real performance weight even without an extended reasoning path.

The model’s ability to complete reasoning in fewer steps also had downstream effects: Lower inference costs and faster response times on complex prompts.

Arora explains this as a difference between searching for a path versus already knowing roughly where the destination lies.

“Since the Cogito models develop a better intuition of the trajectory to take while searching at inference time, they have 60% shorter reasoning chains than Deepseek R1,” he wrote in a thread on X.

What kinds of tasks do Deep Cogito’s new models excel at when using their machine intuition?

Some of the most compelling examples from Cogito v2’s internal testing highlight exactly how this manifests in use.

In one math-heavy prompt, a user asks whether a train traveling at 80 mph can reach a city 240 miles away in under 2.5 hours.

While many models simulate the calculation step-by-step and occasionally make unit conversion errors, Cogito 671B reflects internally, determines that 240 ÷ 80 = 3 hours, and correctly concludes that the train cannot arrive in time. It does so with only a short internal reasoning trace — under 100 tokens — compared to the 200-plus used by DeepSeek R1 to reach the same answer.

In another example involving legal reasoning, a user asks whether a specific U.S. Supreme Court ruling would apply to a hypothetical case involving search and seizure. Cogito’s reasoning mode highlights a two-step logic: Dirst determining whether the hypothetical matches the precedent, then explaining why it does or doesn’t. The model reaches a nuanced answer with clear justification — a kind of interpretive reasoning that many LLMs still struggle with.

Other tasks show improvements in handling ambiguity. On a classic multi-hop question — “If Alice is Bob’s mother, and Bob is Charlie’s father, what is Alice to Charlie?” — models often get tangled in pronouns. Cogito v2’s models correctly identify Alice as Charlie’s grandmother, even in slightly reworded variants where other open models falter.

Efficiency at scale

Despite the massive size of the new models, Deep Cogito claims to have trained all eight of its Cogito models — including smaller v1 checkpoints — for under $3.5 million in total, compared to the reported $100 million plus for some of OpenAI’s leading models.

That includes data generation, synthetic reinforcement, infrastructure and more than 1,000 training experiments. Compared to the nine-figure budgets of other frontier models, it’s a fraction of the typical spend.

Arora attributes this frugality to the company’s core thesis: Smarter models need better priors, not more tokens.

By teaching the model to skip redundant or misleading reasoning paths, Cogito v2 delivers stronger performance without ballooning inference time.

That’s a meaningful tradeoff for users running models on API infrastructure or edge devices where latency and cost matter.

What’s next for Deep Cogito and v2?

The release of Cogito v2 is not a final product, but an iterative step. Arora describes the company’s roadmap as “hill climbing” — running models, learning from their reasoning traces, distilling them and repeating the loop. Over time, each model becomes a stepping stone for the next.

Every model Deep Cogito has released is open source, and the company says that will remain true for future iterations.

Already, its work has attracted attention and support from backers like Benchmark’s Eric Vishria and South Park Commons’ Aditya Agarwal.

Infrastructure partners include Hugging Face, Together AI, RunPod, Baseten, Meta’s Llama team and Unsloth.

For developers, researchers, and enterprise teams, the models are available now. Developers can run them locally, compare modes or fine-tune for specific use cases.

And, for the broader open-source AI community, Cogito v2 offers more than just a new benchmark winner — it proposes a different way to build intelligence. Not by thinking harder, but by learning how to think better.