This post is co-written with Payal Singh from Cohere.

The Cohere Embed 4 multimodal embeddings model is now generally available on Amazon SageMaker JumpStart. The Embed 4 model is built for multimodal business documents, has leading multilingual capabilities, and offers notable improvement over Embed 3 across key benchmarks.

In this post, we discuss the benefits and capabilities of this new model. We also walk you through how to deploy and use the Embed 4 model using SageMaker JumpStart.

Cohere Embed 4 overview

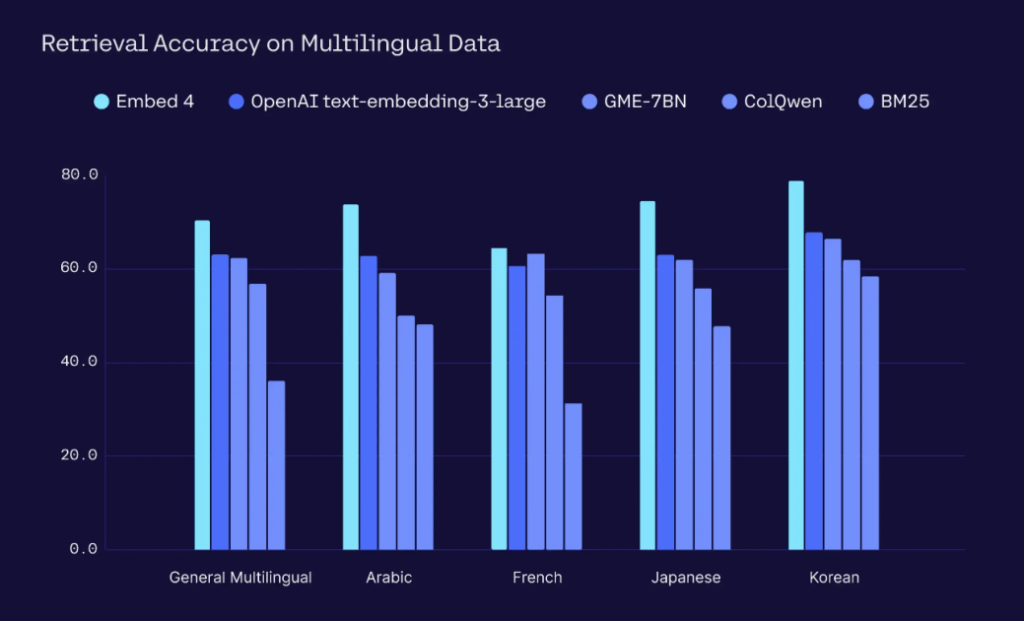

Embed 4 is the most recent addition to the Cohere Embed family of enterprise-focused large language models (LLMs). It delivers state-of-the-art multimodality. This is useful because businesses continue to store the majority of important data in an unstructured format. Document formats include intricate PDF reports, presentation slides, as well as text-based documents or design files that might include images, tables, graphs, code, and diagrams. Without the ability to natively understand complex multimodal documents, these types of documents become repositories of unsearchable information. With Embed 4, enterprises and their employees can search across text, image, and multimodal documents. Embed 4 also offers leading multilingual capabilities, understanding over 100 languages, including Arabic, French, Japanese, and Korean. This capability is useful to global enterprises that handle documents in multiple languages. Employees can also find critical data even if the information isn’t stored using a language they speak. Overall, Embed 4 empowers global enterprises to break down language barriers and manage information in the languages most familiar to their customers.

In the following diagram (source), each language category represents a blend of public and proprietary benchmarks (see more details). Tasks ranged from monolingual to cross-lingual (English as the query language and the respective monolingual non-English language as the corpus). Dataset performance metrics are measured by NDCG@10.

Embeddings models are already being used to handle documents with both text and images. However, optimal performance usually requires additional complexity because a multimodal generative model must preprocess documents into a format that is suitable for the embeddings model. Embed 4 can transform different modalities such as images, texts, and interleaved images and texts into a single vector representation. Processing a single payload of images and text decreases the operational burden associated with handling documents.

Embed 4 can also generate embeddings for documents up to 128,000 tokens, approximately 200 pages in length. This extended capacity alleviates the need for custom logic to split lengthy documents, making it straightforward to process financial reports, product manuals, and detailed legal contracts. In contrast, models with shorter context lengths force developers to create complex workflows to split documents while preserving their logical structure. With Embed 4, as long as a document fits within the 128,000-token limit, it can be converted into a high-quality, unified vector representation.

Embed 4 also has enhancements for security-minded industries such as finance, healthcare, and manufacturing. Businesses in these regulated industries need models that have both strong general business knowledge as well as domain-specific understanding. Business data also tends to be imperfect. Documents often come with spelling mistakes and formatting issues such as improper portrait and landscape orientation. Embed 4 was trained to be robust against noisy real-world data that also includes scanned documents and handwriting. This further alleviates the need for complex and expensive data preprocessing pipelines.

Use cases

In this section, we discuss several possible use cases for Embed 4. Embed 4 unlocks a range of capabilities for enterprises seeking to streamline information discovery, enhance generative AI workflows, and optimize storage efficiency. Below, we highlight several keys use cases that demonstrate the versatility of Embed 4 in a range of regulated industries.

Simplifying multimodal search

You can take advantage of Embed 4 multimodal capabilities in use cases that require point semantic search. For example, in the retail industry, it might be helpful to search with both text and image. An example search phrase can even include a modifier (for example, “Same style pants but with no stripes”). The same logic can be applied to an analyst’s workflow where users might need to find the right charts and diagrams to explain trends. This is traditionally a time-consuming process that requires manual effort to sift through documents and contextualize information. Because Embed 4 has enhancements for finance, healthcare, and manufacturing, users in these industries can take advantage of built-in domain-specific understanding as well as strong general knowledge to accelerate the time to value. With Embed 4, it’s straightforward to conduct research and turn data into actionable insights.

Powering Retrieval Augmented Generation workflows

Another use case is Retrieval Augmented Generation (RAG) applications that require access to internal information. With the 128,000 context length of Embed 4, businesses can use existing long-form documents that include images without the need to implement complex preprocessing pipelines. An example might be a generative AI application built to assist analysts with M&A due diligence. Having access to a broader repository of information increases the likelihood of making informed decisions.

Optimizing agentic AI workflows with compressed embeddings

Businesses can use intelligent AI agents to reduce unnecessary costs, automate repetitive tasks, and reduce human errors. AI agents need relevant and contextual information to perform tasks accurately. This is done through RAG. The generative model as part of RAG that powers the conversational experience relies on a search engine that is connected to company data sources to retrieve relevant information before providing the final response. For example, an agent might need to extract relevant conversation logs to analyze customer sentiment about a specific product and deduce the most effective next step in a customer interaction.

Embed 4 is the optimal search engine for enterprise AI assistants and agents, which improves the accuracy of responses and mitigates against hallucinations.

At scale, storing embeddings can lead to high storage costs. Embed 4 is designed to output compressed embeddings, where users can choose their own dimension size (example: 256, 512, 1024, and 1536). This helps organizations to save up to 83% on storage costs while maintaining search accuracy.

The following diagram illustrates retrieval quality vs. storage cost across different models (source). Compression can occur on the format precision of the vectors (binary, int8, and fp32) and the dimension of the vectors. Dataset performance metrics are measured by NDCG@10.

Using domain-specific understanding for regulated industries

With Embed 4 enhancements, you can surface relevant insights from complex financial documents like investor presentations, annual reports, and M&A due diligence files. Embed 4 can also extract key information from healthcare documents such as medical records, procedural charts, and clinical trial reports. For manufacturing use cases, Embed 4 can handle product specifications, repair guides, and supply chain plans. These capabilities unlock a broader range of use cases because enterprises can use models out of the box without costly fine-tuning efforts.

Solution overview

SageMaker JumpStart onboards and maintains foundation models (FMs) for you to access and integrate into machine learning (ML) lifecycles. The FMs available in SageMaker JumpStart include publicly available FMs as well as proprietary FMs from third-party providers.

Amazon SageMaker AI is a fully managed ML service. It helps data scientists and developers quickly and confidently build, train, and deploy ML models into a production-ready hosted environment. Amazon SageMaker Studio is a single web-based experience for running ML workflows. It provides access to your SageMaker AI resources in one interface.

In the following sections, we show how to get started with Cohere Embed 4.

Prerequisites

Make sure you meet the following prerequisites:

Make sure your SageMaker AWS Identity and Access Management (IAM) role has the AmazonSageMakerFullAccess permission policy attached.

To deploy Cohere Embed 4 successfully, confirm that your IAM role has the following permissions and you have the authority to make AWS Marketplace subscriptions in the AWS account used:

aws-marketplace:ViewSubscriptions

aws-marketplace:Unsubscribe

aws-marketplace:Subscribe

Alternatively, confirm your AWS account has a subscription to the model. If so, skip to the next section in this post.

Subscribe to the model package

To subscribe to the model package, complete the following steps:

In the AWS Marketplace listing, choose Continue to Subscribe.

On the Subscribe to this software page, review and choose Accept Offer if you and your organization agree with EULA, pricing, and support terms.

Choose Continue to configuration and then choose an AWS Region.

You will see a product Amazon Resource Name (ARN) displayed. This is the model package ARN that you must specify while creating a deployable model using Boto3.

Deploy Cohere Embed 4 for inference through SageMaker JumpStart

If you want to start using Embed 4 immediately, you can choose from one of three available launch methods. Either use the AWS CloudFormation template, the SageMaker console, or the AWS Command Line Interface (AWS CLI). You will incur costs for software use based on hourly pricing as long as your endpoint is running. You will also incur costs for infrastructure use independent and in addition to the costs of software.

Choose the appropriate model package ARN for your Region. For example, the ARN for Cohere Embed 4 is:

Alternatively, in SageMaker Studio, open JumpStart. Search for Cohere Embed 4. If you don’t yet have a domain, refer to Guide to getting set up with Amazon SageMaker AI to create a domain. Search for the Cohere Embed 4 model. Deployment starts when you choose Deploy.

When deployment is complete, an endpoint is created. You can test the endpoint by passing a sample inference request payload or by selecting the testing option using the SDK.

To use the Python SDK example code, choose Test inference and Open in JupyterLab. If you don’t have a JupyterLab space yet, refer to Create a space.

Clean up

After you finish running the notebook and experimenting with the Embed 4 model, it’s crucial to clean up the resources you have provisioned. Failing to do so might result in unnecessary charges accruing on your account. To use the SageMaker AI console, complete the following steps:

On the SageMaker AI console, under Inference in the navigation pane, choose Endpoints.

Choose the endpoint you created.

On the endpoint details page, choose Delete.

Choose Delete again to confirm.

Conclusion

In this post, we explored how Cohere Embed 4, now available on SageMaker JumpStart, delivers state-of-the-art multimodal embedding capabilities. These capabilities make it particularly valuable for enterprises working with unstructured data across finance, healthcare, manufacturing, and other regulated industries.

Interested in diving deeper? Check out the Cohere on AWS GitHub repo.

About the authors

James Yi is a Senior AI/ML Partner Solutions Architect at AWS. He spearheads AWS’s strategic partnerships in Emerging Technologies, guiding engineering teams to design and develop cutting-edge joint solutions in generative AI. He enables field and technical teams to seamlessly deploy, operate, secure, and integrate partner solutions on AWS. James collaborates closely with business leaders to define and execute joint Go-To-Market strategies, driving cloud-based business growth. Outside of work, he enjoys playing soccer, traveling, and spending time with his family.

James Yi is a Senior AI/ML Partner Solutions Architect at AWS. He spearheads AWS’s strategic partnerships in Emerging Technologies, guiding engineering teams to design and develop cutting-edge joint solutions in generative AI. He enables field and technical teams to seamlessly deploy, operate, secure, and integrate partner solutions on AWS. James collaborates closely with business leaders to define and execute joint Go-To-Market strategies, driving cloud-based business growth. Outside of work, he enjoys playing soccer, traveling, and spending time with his family.

Karan Singh is a Generative AI Specialist at AWS, where he works with top-tier third-party foundation model and agentic frameworks providers to develop and execute joint go-to-market strategies, enabling customers to effectively deploy and scale solutions to solve enterprise generative AI challenges.

Karan Singh is a Generative AI Specialist at AWS, where he works with top-tier third-party foundation model and agentic frameworks providers to develop and execute joint go-to-market strategies, enabling customers to effectively deploy and scale solutions to solve enterprise generative AI challenges.

Mehran Najafi, PhD, serves as AWS Principal Solutions Architect and leads the Generative AI Solution Architects team for AWS Canada. His expertise lies in ensuring the scalability, optimization, and production deployment of multi-tenant generative AI solutions for enterprise customers.

Mehran Najafi, PhD, serves as AWS Principal Solutions Architect and leads the Generative AI Solution Architects team for AWS Canada. His expertise lies in ensuring the scalability, optimization, and production deployment of multi-tenant generative AI solutions for enterprise customers.

John Liu has 15 years of experience as a product executive and 9 years of experience as a portfolio manager. At AWS, John is a Principal Product Manager for Amazon Bedrock. Previously, he was the Head of Product for AWS Web3 / Blockchain. Prior to AWS, John held various product leadership roles at public blockchain protocols, fintech companies and also spent 9 years as a portfolio manager at various hedge funds.

John Liu has 15 years of experience as a product executive and 9 years of experience as a portfolio manager. At AWS, John is a Principal Product Manager for Amazon Bedrock. Previously, he was the Head of Product for AWS Web3 / Blockchain. Prior to AWS, John held various product leadership roles at public blockchain protocols, fintech companies and also spent 9 years as a portfolio manager at various hedge funds.

Hugo Tse is a Solutions Architect at AWS, with a focus on Generative AI and Storage solutions. He is dedicated to empowering customers to overcome challenges and unlock new business opportunities using technology. He holds a Bachelor of Arts in Economics from the University of Chicago and a Master of Science in Information Technology from Arizona State University.

Hugo Tse is a Solutions Architect at AWS, with a focus on Generative AI and Storage solutions. He is dedicated to empowering customers to overcome challenges and unlock new business opportunities using technology. He holds a Bachelor of Arts in Economics from the University of Chicago and a Master of Science in Information Technology from Arizona State University.

Payal Singh is a Solutions Architect at Cohere with over 15 years of cross-domain expertise in DevOps, Cloud, Security, SDN, Data Center Architecture, and Virtualization. She drives partnerships at Cohere and helps customers with complex GenAI solution integrations.

Payal Singh is a Solutions Architect at Cohere with over 15 years of cross-domain expertise in DevOps, Cloud, Security, SDN, Data Center Architecture, and Virtualization. She drives partnerships at Cohere and helps customers with complex GenAI solution integrations.