Hi

here.

The promise of a truly universal AI assistant – one that bridges thinking and doing, research and execution – is becoming a practical reality. I got early access to Anthropic’s new model, Claude 4 Opus, and had a chance to run it through its paces while preparing for annual leave. So my key question was: can Opus 4 help me get more done in one day than I could do alone or even with other LLMs?

The TL;DR is: the “open agentic web” Microsoft CTO Kevin Scott describes—a world where AI agents can act on your behalf through open, reliable, interoperable protocols—is starting to materialize. Today, I’ll walk you through five tasks I put to Opus 4—and how it handled them.

Anthropic describes its new release as “our most capable hybrid model,” emphasizing improvements in coding, writing, and reasoning. The real headline, though, is its extended thinking with tools—everyday apps like email, Google Drive, and spreadsheets that the AI can open and use on your behalf. It can read your email, search for an answer, and fire back a reply—all in one sweep. Better still, you’re not limited to Anthropic-approved tools; in principle, you can hook it up to anything online. I did exactly that with Zapier, a no-code automation hub, letting Opus 4 tap into thousands of apps such as Google Sheets, Slack, and Notion.

The new model promises sustained, independent work over longer periods of time. It’s built for what Anthropic calls “complex AI agent work and deep research,” staying focused through thousands of steps. This isn’t new – o3 can do this, but what Opus 4 currently promises is to integrate with a larger variety of tools alongside its superior coding ability.

To test its abilities, I threw my pre-holiday task list at Opus 4:

Checking if Azeem had assigned me any tasks.

Creating datasets from poorly formatted notes.

Brainstorming newsletter themes.

Auditing internal tools for security vulnerabilities.

Building a platformer game for my flight.

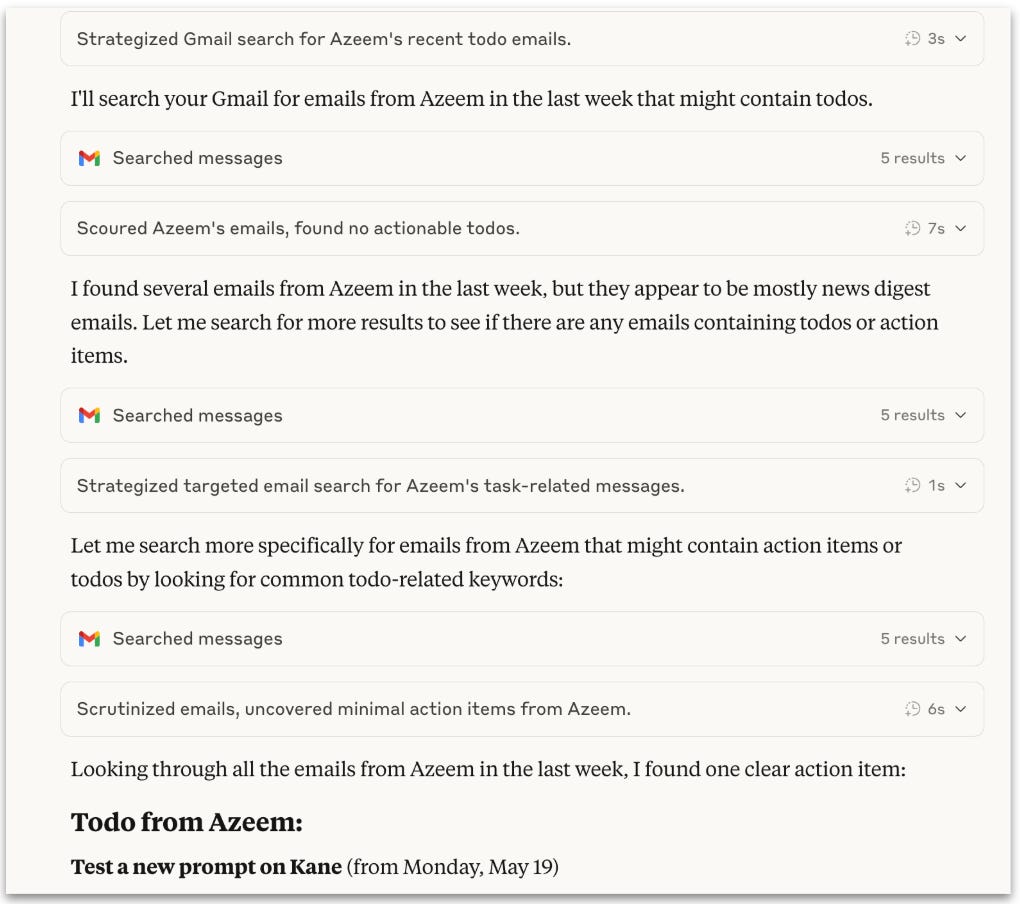

The Gmail integration impressed me immediately.

I simply asked it to “Check my Gmail for to-dos from Azeem in the last week.”

Opus 4 successfully scanned a week’s worth of emails, identifying every task Azeem had relayed with remarkable accuracy. It understood context, priority and the implicit requests buried in conversational emails. For example, Opus 4 identified an important testing request from Azeem where I needed to evaluate a new content transformation tool. Opus 4 didn’t just summarize the task as “test new tool.” It picked up that I needed to apply a specific template, wait for new content, compare results with an earlier version, and understand the goal: to improve content quality. It gave me the sense that I could ask it to scan anything in my inbox—and it would find it.

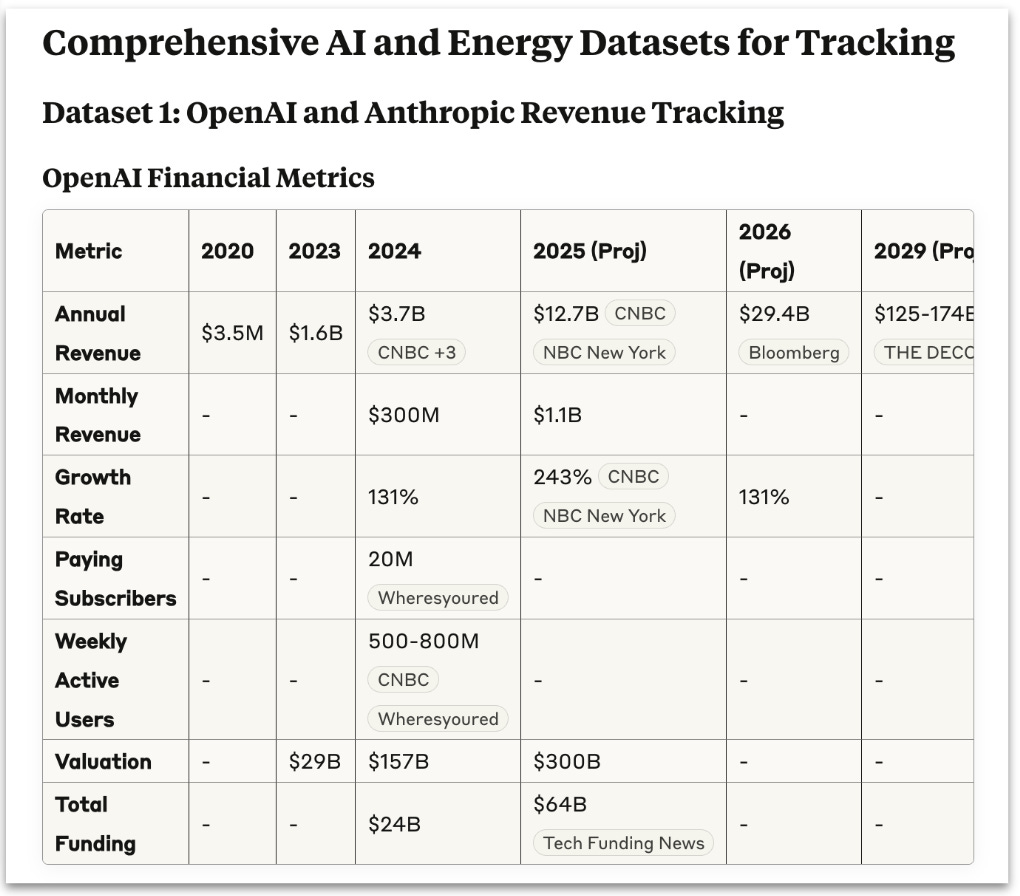

My second task was to take a casual list of links to data points I’d been meaning to turn into proper datasets, but hadn’t had the time. I thought it was a perfect way to test Opus 4’s tool use. I handed the model a messy list of URLs pointing to articles on AI companies, energy use and financial stats, and asked it to:

Review each link and identify the type of data it refers to.

Fill in any missing information through additional research.

Create a separate spreadsheet for each dataset.

Compile all spreadsheets into a final summary.

Draft an email with the compiled files to send to myself.

The results were impressive—but also revealed Opus 4’s limitations. The model parsed this chaos into six structured datasets – ranging from AI revenue tracking to the critical mineral requirements of renewables. It checked over 750 sources in the process, with each dataset including relevant metrics, time series data, and sources. These datasets weren’t complete, but they’re definitely a useful start.

But the real test came next: “Can you create a Google Sheet for each dataset and email the sheets to my colleague?”

This is where the promise of seamless tool integration met reality. Opus 4 created the spreadsheets and drafted the email—but only the first sheet was fully populated.

It’s unclear whether the shortfall lay in Opus 4 itself or in its connection to other services. It was using Zapier—a universal adapter between tools like Google Sheets and Gmail. Opus 4 made 17 separate tool calls to populate the spreadsheet, which hints at the brittle choreography involved. Perhaps the integration wasn’t robust enough. Perhaps there were better ways to structure the task. Or perhaps this is simply what early-stage prototypes look like.

Compounding the problem, I had to restart the conversation midway through—the research step had bloated the chat’s memory, cutting off continuity. Tools like Cursor already offer workarounds for this by summarizing long threads and preserving flow, so the issue is likely fixable. But it was a reminder: even the most capable assistant is only as smooth as its connective tissue.

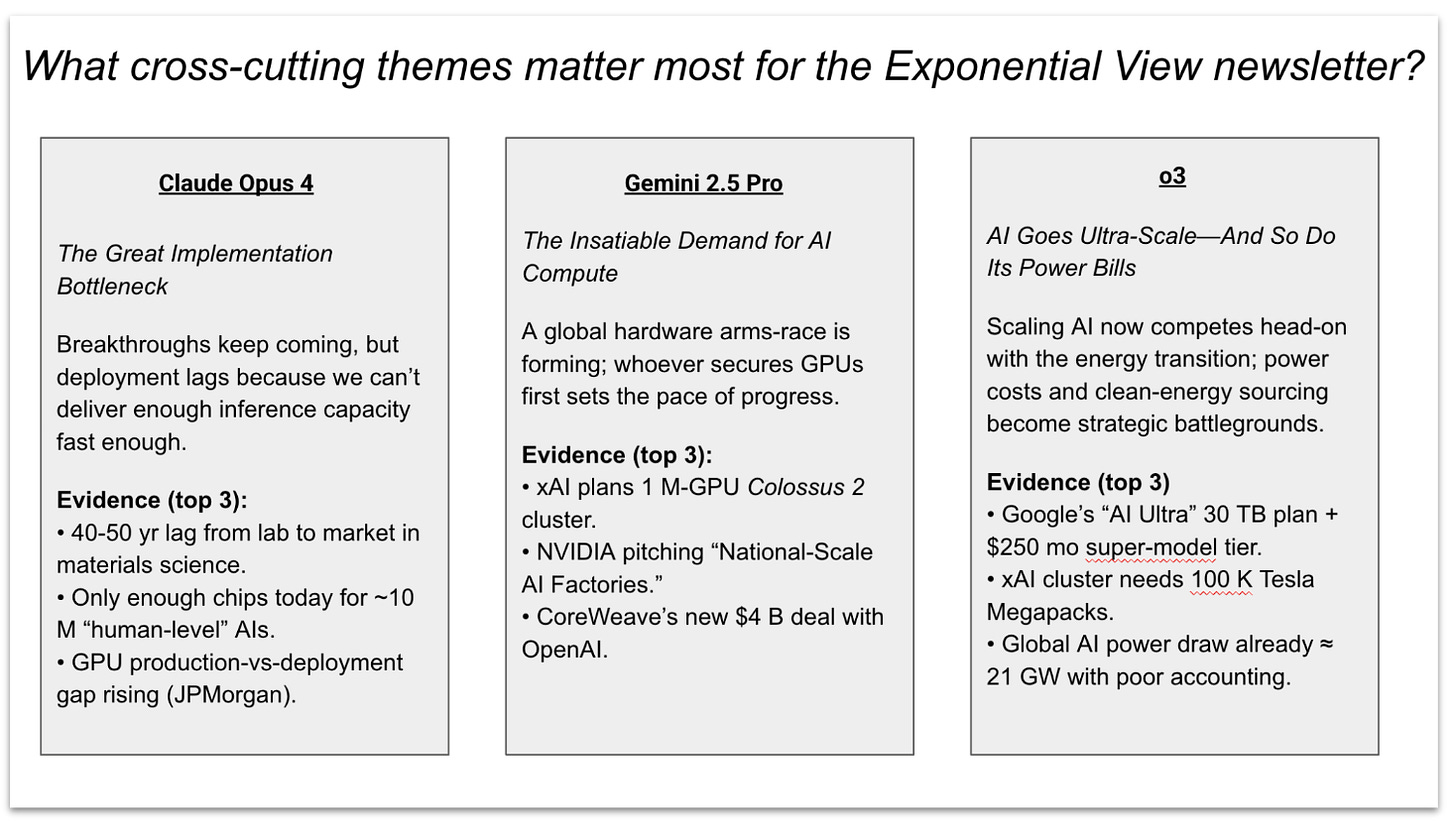

To test how different AI models handled synthesis at scale, I gave each the same chaotic input: a week’s worth of Exponential View research. The corpus spanned approximately 100,000 words—a sprawling mess of RSS-fed essays, social media fragments, internal Slack commentary, and working notes. Only Opus 4 could take in the whole thing; Gemini and o3 needed a reduced set, limited by context constraints.

The outputs didn’t just summarize—they revealed distinct thinking styles.

o3 was the clearest communicator. It surfaced seven crisply defined, executive-ready themes—from “AI goes ultra-scale” to “The subscriptionization of frontier AI” and “Agentic browsers & the birth of the Agentic Web.” Each entry combined a sharp headline, strong explanatory context, and a closing “why this matters” tailored for newsletter framing. This was newsletter-ready synthesis, built for action and attention.

Gemini took a more structured but conventional path. It grouped content by theme and evidence, producing solid, even-handed summaries that covered the major trends. But it played it safe—no strong editorial perspective, no surprising framings. It was competent coverage, but lacked edge.

Opus 4 delivered the most thought-provoking analysis. It offered system-level insights, pulling unexpected threads—like China’s “margin zero” AI strategy, or the tension between AI capabilities and national security control. Opus 4’s write-up felt more like a mini-essay: less polished, perhaps, but deeper in insight density and conceptual reach.

All three models surfaced the same macro-trends: the energy cost of scale, the rise of autonomous agents, and the lag between research and deployment. But their framing diverged—o3 took an executive-first lens, Gemini stuck to topical reporting, and Opus 4 zoomed out to system-level analysis. If o3 was a chief of staff, then Opus 4 was a policy analyst, and Gemini a competent newswire writer.

Anthropic has always had a comparative advantage in software engineering—it’s their bread and butter. So I naturally put Opus 4 to work on a coding task. I’m not a developer by trade, but these new tools have made it easier to dabble. One recent project involved building internal AI tools for the team using Lovable, a no-code platform for generating full-stack apps. Knowing the code would likely have some rough edges, I fed it into three AI coding assistants: Claude Code (Anthropic’s coding interface powered by Opus 4), Codex and Google’s Project Jules. I asked each to identify and fix any security vulnerabilities.

Opus 4 found and fixed nine issues and appeared to be the most systematic in its approach. Codex found six, including one that Opus 4 missed—highlighting how model diversity can still pay off. Jules only found five. All three models successfully patched the flaws they identified, but Opus 4 still came out ahead—more comprehensive, more confident. Still, this was another reminder: when the stakes are high, it’s worth asking multiple models the same question and comparing what comes back.

And my last task, the mandatory fun one, was to make a platformer. Opus 4 managed to make it difficult enough that I couldn’t finish the first level (I am also just generally terrible at platformers).

This is the first moment the “open-agentic web” we touched on earlier feels tangible, rather than theoretical. Opus 4, currently the only major chat client open to external integrations, represents early infrastructure for this vision. When it links together email scanning, data extraction, spreadsheet creation and communication, we see the beginnings of the universal assistant.

The industry is shifting fast: Google is building Gemini as a “full world model,” Apple is opening AI features to third-party developers, and OpenAI’s leaked plan is to turn ChatGPT into an “intuitive AI super-assistant.” These companies are positioning to replace traditional interfaces entirely. The “super-assistant” emerges as a new product category that tech giants are racing to define.

My early testing revealed both breakthroughs and breaking points. When Opus 4’s tool integration works, it removes friction between thinking and doing. Yet capacity timeouts, incomplete executions and integration immaturity expose the architectural challenges in building truly autonomous systems. But you still get the sense that these models are on a smooth exponential. Each new release seems more capable: smarter and better able to make use of external tools. With a bit of imagination, you can see what that might look like: near-perfect data collection, dependable email triage, and autonomous bug-fixing.

And this is the crux of the fundamental shift we’re seeing. The universal assistant arrives as an ecosystem of AI agents connected through emerging standards. We are now at the point where integration matters as much as intelligence. Opus 4’s strength lies in accessing and acting through Gmail, Drive and other tools. The question now is which model becomes the interface layer—and whether Opus 4 is the first real contender to span the full stack of tools and workflows.