#wikipedia #reinforcementlearning #languagemodels

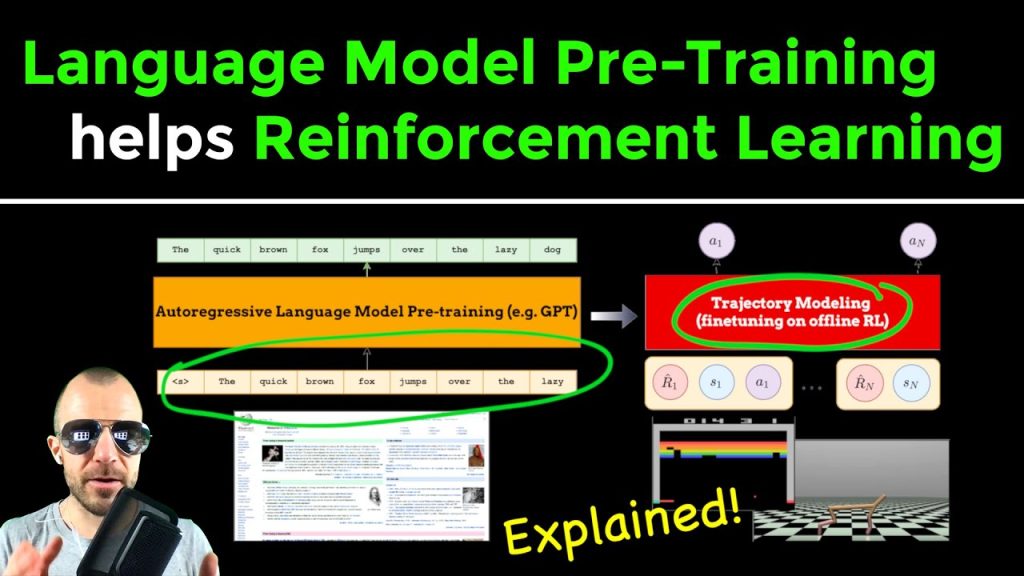

Transformers have come to overtake many domain-targeted custom models in a wide variety of fields, such as Natural Language Processing, Computer Vision, Generative Modelling, and recently also Reinforcement Learning. This paper looks at the Decision Transformer and shows that, surprisingly, pre-training the model on a language-modelling task significantly boosts its performance on Offline Reinforcement Learning. The resulting model achieves higher scores, can get away with less parameters, and exhibits superior scaling properties. This raises many questions about the fundamental connection between the domains of language and RL.

OUTLINE:

0:00 – Intro

1:35 – Paper Overview

7:35 – Offline Reinforcement Learning as Sequence Modelling

12:00 – Input Embedding Alignment & other additions

16:50 – Main experimental results

20:45 – Analysis of the attention patterns across models

32:25 – More experimental results (scaling properties, ablations, etc.)

37:30 – Final thoughts

Paper:

Code:

My Video on Decision Transformer:

Abstract:

Fine-tuning reinforcement learning (RL) models has been challenging because of a lack of large scale off-the-shelf datasets as well as high variance in transferability among different environments. Recent work has looked at tackling offline RL from the perspective of sequence modeling with improved results as result of the introduction of the Transformer architecture. However, when the model is trained from scratch, it suffers from slow convergence speeds. In this paper, we look to take advantage of this formulation of reinforcement learning as sequence modeling and investigate the transferability of pre-trained sequence models on other domains (vision, language) when finetuned on offline RL tasks (control, games). To this end, we also propose techniques to improve transfer between these domains. Results show consistent performance gains in terms of both convergence speed and reward on a variety of environments, accelerating training by 3-6x and achieving state-of-the-art performance in a variety of tasks using Wikipedia-pretrained and GPT2 language models. We hope that this work not only brings light to the potentials of leveraging generic sequence modeling techniques and pre-trained models for RL, but also inspires future work on sharing knowledge between generative modeling tasks of completely different domains.

Authors: Machel Reid, Yutaro Yamada, Shixiang Shane Gu

Links:

Merch:

TabNine Code Completion (Referral):

YouTube:

Twitter:

Discord:

BitChute:

LinkedIn:

BiliBili:

If you want to support me, the best thing to do is to share out the content 🙂

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar:

Patreon:

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

source

6 Comments

доставка из китая цена карго перевозки из китая

русское порно инцест порно русских мам

Новые актуальные промокод на первый заказ iherb для выгодных покупок! Скидки на витамины, БАДы, косметику и товары для здоровья. Экономьте до 30% на заказах, используйте проверенные купоны и наслаждайтесь выгодным шопингом.

курсовые под заказ срочные курсовые работы

быстрый займ онлайн займ онлайн срочно

взять займ онлайн без займы онлайн на карту