Generative artificial intelligence (AI) applications are commonly built using a technique called Retrieval Augmented Generation (RAG) that provides foundation models (FMs) access to additional data they didn’t have during training. This data is used to enrich the generative AI prompt to deliver more context-specific and accurate responses without continuously retraining the FM, while also improving transparency and minimizing hallucinations.

In this post, we demonstrate a solution using Amazon Elastic Kubernetes Service (EKS) with Amazon Bedrock to build scalable and containerized RAG solutions for your generative AI applications on AWS while bringing your unstructured user file data to Amazon Bedrock in a straightforward, fast, and secure way.

Amazon EKS provides a scalable, secure, and cost-efficient environment for building RAG applications with Amazon Bedrock and also enables efficient deployment and monitoring of AI-driven workloads while leveraging Bedrock’s FMs for inference. It enhances performance with optimized compute instances, auto-scales GPU workloads while reducing costs via Amazon EC2 Spot Instances and AWS Fargate and provides enterprise-grade security via native AWS mechanisms such as Amazon VPC networking and AWS IAM.

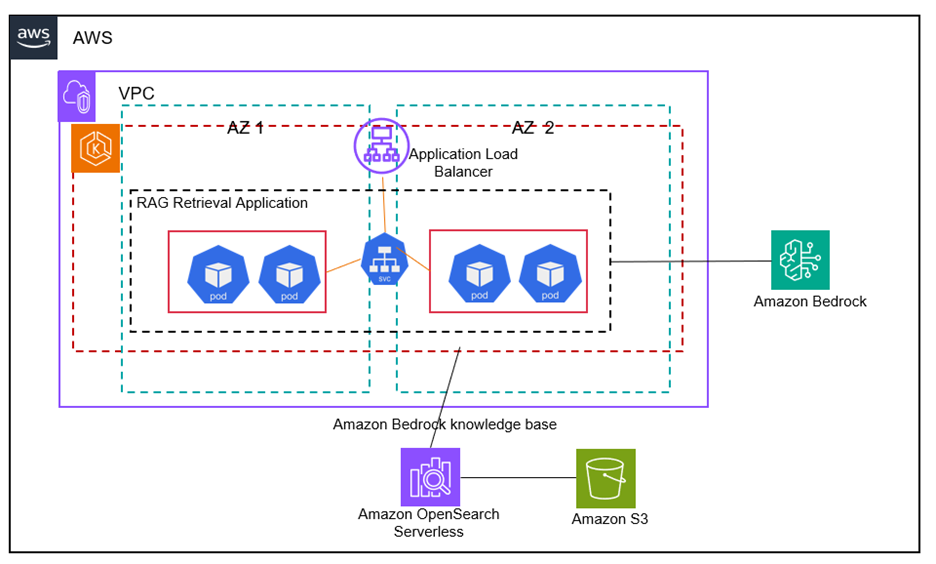

Our solution uses Amazon S3 as the source of unstructured data and populates an Amazon OpenSearch Serverless vector database via the use of Amazon Bedrock Knowledge Bases with the user’s existing files and folders and associated metadata. This enables a RAG scenario with Amazon Bedrock by enriching the generative AI prompt using Amazon Bedrock APIs with your company-specific data retrieved from the OpenSearch Serverless vector database.

Solution overview

The solution uses Amazon EKS managed node groups to automate the provisioning and lifecycle management of nodes (Amazon EC2 instances) for the Amazon EKS Kubernetes cluster. Every managed node in the cluster is provisioned as part of an Amazon EC2 Auto Scaling group that’s managed for you by EKS.

The EKS cluster consists of a Kubernetes deployment that runs across two Availability Zones for high availability where each node in the deployment hosts multiple replicas of a Bedrock RAG container image registered and pulled from Amazon Elastic Container Registry (ECR). This setup makes sure that resources are used efficiently, scaling up or down based on the demand. The Horizontal Pod Autoscaler (HPA) is set up to further scale the number of pods in our deployment based on their CPU utilization.

The RAG Retrieval Application container uses Bedrock Knowledge Bases APIs and Anthropic’s Claude 3.5 Sonnet LLM hosted on Bedrock to implement a RAG workflow. The solution provides the end user with a scalable endpoint to access the RAG workflow using a Kubernetes service that is fronted by an Amazon Application Load Balancer (ALB) provisioned via an EKS ingress controller.

The RAG Retrieval Application container orchestrated by EKS enables RAG with Amazon Bedrock by enriching the generative AI prompt received from the ALB endpoint with data retrieved from an OpenSearch Serverless index that is synced via Bedrock Knowledge Bases from your company-specific data uploaded to Amazon S3.

The following architecture diagram illustrates the various components of our solution:

Prerequisites

Complete the following prerequisites:

Ensure model access in Amazon Bedrock. In this solution, we use Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock.

Install the AWS Command Line Interface (AWS CLI).

Install Docker.

Install Kubectl.

Install Terraform.

Deploy the solution

The solution is available for download on the GitHub repo. Cloning the repository and using the Terraform template will provision the components with their required configurations:

Clone the Git repository:

From the terraform folder, deploy the solution using Terraform:

Configure EKS

Configure a secret for the ECR registry:

Navigate to the kubernetes/ingress folder:

Make sure that the AWS_Region variable in the bedrockragconfigmap.yaml file points to your AWS region.

Replace the image URI in line 20 of the bedrockragdeployment.yaml file with the image URI of your bedrockrag image from your ECR repository.

Provision the EKS deployment, service and ingress:

Create a knowledge base and upload data

To create a knowledge base and upload data, follow these steps:

Create an S3 bucket and upload your data into the bucket. In our blog post, we uploaded these two files, Amazon Bedrock User Guide and the Amazon FSx for ONTAP User Guide, into our S3 bucket.

Create an Amazon Bedrock knowledge base. Follow the steps here to create a knowledge base. Accept all the defaults including using the Quick create a new vector store option in Step 7 of the instructions that creates an Amazon OpenSearch Serverless vector search collection as your knowledge base.

In Step 5c of the instructions to create a knowledge base, provide the S3 URI of the object containing the files for the data source for the knowledge base

Once the knowledge base is provisioned, obtain the Knowledge Base ID from the Bedrock Knowledge Bases console for your newly created knowledge base.

Query using the Application Load Balancer

You can query the model directly using the API front end provided by the AWS ALB provisioned by the Kubernetes (EKS) Ingress Controller. Navigate to the AWS ALB console and obtain the DNS name for your ALB to use as your API:

Cleanup

To avoid recurring charges, clean up your account after trying the solution:

From the terraform folder, delete the Terraform template for the solution:

terraform apply –destroy

Delete the Amazon Bedrock knowledge base. From the Amazon Bedrock console, select the knowledge base you created in this solution, select Delete, and follow the steps to delete the knowledge base.

Conclusion

In this post, we demonstrated a solution that uses Amazon EKS with Amazon Bedrock and provides you with a framework to build your own containerized, automated, scalable, and highly available RAG-based generative AI applications on AWS. Using Amazon S3 and Amazon Bedrock Knowledge Bases, our solution automates bringing your unstructured user file data to Amazon Bedrock within the containerized framework. You can use the approach demonstrated in this solution to automate and containerize your AI-driven workloads while using Amazon Bedrock FMs for inference with built-in efficient deployment, scalability, and availability from a Kubernetes-based containerized deployment.

For more information about how to get started building with Amazon Bedrock and EKS for RAG scenarios, refer to the following resources:

About the Authors

Kanishk Mahajan is Principal, Solutions Architecture at AWS. He leads cloud transformation and solution architecture for AWS customers and partners. Kanishk specializes in containers, cloud operations, migrations and modernizations, AI/ML, resilience and security and compliance. He is a Technical Field Community (TFC) member in each of those domains at AWS.

Kanishk Mahajan is Principal, Solutions Architecture at AWS. He leads cloud transformation and solution architecture for AWS customers and partners. Kanishk specializes in containers, cloud operations, migrations and modernizations, AI/ML, resilience and security and compliance. He is a Technical Field Community (TFC) member in each of those domains at AWS.

Sandeep Batchu is a Senior Security Architect at Amazon Web Services, with extensive experience in software engineering, solutions architecture, and cybersecurity. Passionate about bridging business outcomes with technological innovation, Sandeep guides customers through their cloud journey, helping them design and implement secure, scalable, flexible, and resilient cloud architectures.

Sandeep Batchu is a Senior Security Architect at Amazon Web Services, with extensive experience in software engineering, solutions architecture, and cybersecurity. Passionate about bridging business outcomes with technological innovation, Sandeep guides customers through their cloud journey, helping them design and implement secure, scalable, flexible, and resilient cloud architectures.