In these days, it is more common to companies adopting AI-first strategy to stay competitive and more efficient. As generative AI adoption grows, the technology’s ability to solve problems is also improving (an example is the use case to generate comprehensive market report). One way to simplify the growing complexity of problems to be solved is through graphs, which excel at modeling relationships and extracting meaningful insights from interconnected data and entities.

In this post, we explore how to use Graph-based Retrieval-Augmented Generation (GraphRAG) in Amazon Bedrock Knowledge Bases to build intelligent applications. Unlike traditional vector search, which retrieves documents based on similarity scores, knowledge graphs encode relationships between entities, allowing large language models (LLMs) to retrieve information with context-aware reasoning. This means that instead of only finding the most relevant document, the system can infer connections between entities and concepts, improving response accuracy and reducing hallucinations. To inspect the graph built, Graph Explorer is a great tool.

Introduction to GraphRAG

Traditional Retrieval-Augmented Generation (RAG) approaches improve generative AI by fetching relevant documents from a knowledge source, but they often struggle with context fragmentation, when relevant information is spread across multiple documents or sources.

This is where GraphRAG comes in. GraphRAG was created to enhance knowledge retrieval and reasoning by leveraging knowledge graphs, which structure information as entities and their relationships. Unlike traditional RAG methods that rely solely on vector search or keyword matching, GraphRAG enables multi-hop reasoning (logical connections between different pieces of context), better entity linking, and contextual retrieval. This makes it particularly valuable for complex document interpretation, such as legal contracts, research papers, compliance guidelines, and technical documentation.

Amazon Bedrock Knowledge Bases GraphRAG

Amazon Bedrock Knowledge Bases is a managed service for storing, retrieving, and structuring enterprise knowledge. It seamlessly integrates with the foundation models available through Amazon Bedrock, enabling AI applications to generate more informed and trustworthy responses. Amazon Bedrock Knowledge Bases now supports GraphRAG, an advanced feature that enhances traditional RAG by integrating graph-based retrieval. This allows LLMs to understand relationships between entities, facts, and concepts, making responses more contextually relevant and explainable.

How Amazon Bedrock Knowledge Bases GraphRAG works

Graphs are generated by creating a structured representation of data as nodes (entities) and edges (relationships) between those nodes. The process typically involves identifying key entities within the data, determining how these entities relate to each other, and then modeling these relationships as connections in the graph. After the traditional RAG process, Amazon Bedrock Knowledge Bases GraphRAG performs additional steps to improve the quality of the generated response:

It identifies and retrieves related graph nodes or chunk identifiers that are linked to the initially retrieved document chunks.

The system then expands on this information by traversing the graph structure, retrieving additional details about these related chunks from the vector store.

By using this enriched context, which includes relevant entities and their key connections, GraphRAG can generate more comprehensive responses.

How graphs are constructed

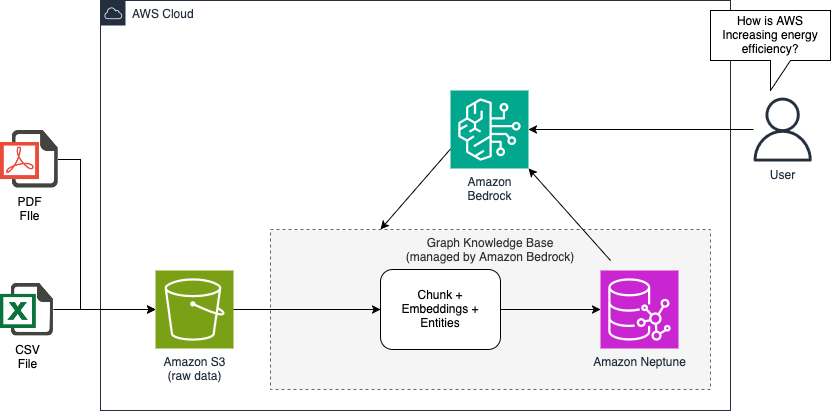

Imagine extracting information from unstructured data such as PDF files. In Amazon Bedrock Knowledge Bases, graphs are constructed through a process that extends traditional PDF ingestion. The system creates three types of nodes: chunk, document, and entity. The ingestion pipeline begins by splitting documents from an Amazon Simple Storage Service (Amazon S3) folder into chunks using customizable methods (you can choose between basic fixed-size chunking to more complex LLM-based chunking mechanisms). Each chunk is then embedded, and an ExtractChunkEntity step uses an LLM to identify key entities within the chunk. This information, along with the chunk’s embedding, text, and document ID, is sent to Amazon Neptune Analytics for storage. The insertion process creates interconnected nodes and edges, linking chunks to their source documents and extracted entities using the bulk load API in Amazon Neptune. The following figure illustrates this process.

Use case

Consider a company that needs to analyze a large range of documents, and needs to correlate entities that are spread across those documents to answer some questions (for example, Which companies has Amazon invested in or acquired in recent years?). Extracting meaningful insights from this unstructured data and connecting it with other internal and external information poses a significant challenge. To address this, the company decides to build a GraphRAG application using Amazon Bedrock Knowledge Bases, usign the graph databases to represent complex relationships within the data.

One business requirement for the company is to generate a comprehensive market report that provides a detailed analysis of how internal and external information are correlated with industry trends, the company’s actions, and performance metrics. By using Amazon Bedrock Knowledge Bases, the company can create a knowledge graph that represents the intricate connections between press releases, products, companies, people, financial data, external documents and industry events. The Graph Explorer tool becomes invaluable in this process, helping data scientists and analysts to visualize those connections, export relevant subgraphs, and seamlessly integrate them with the LLMs in Amazon Bedrock. After the graph is well structured, anyone in the company can ask questions in natural language using Amazon Bedrock LLMs and generate deeper insights from a knowledge base with correlated information across multiple documents and entities.

Solution overview

In this GraphRAG application using Amazon Bedrock Knowledge Bases, we’ve designed a streamlined process to transform raw documents into a rich, interconnected graph of knowledge. Here’s how it works:

Document ingestion: Users can upload documents manually to Amazon S3 or set up automatic ingestion pipelines.

Chunk, entity extraction, and embeddings generation: In the knowledge base, documents are first split into chunks using fixed size chunking or customizable methods, then embeddings are computed for each chunk. Finally, an LLM is prompted to extract key entities from each chunk, creating a GraphDocument that includes the entity list, chunk embedding, chunked text, and document ID.

Graph construction: The embeddings, along with the extracted entities and their relationships, are used to construct a knowledge graph. The constructed graph data, including nodes (entities) and edges (relationships), is automatically inserted into Amazon Neptune.

Data exploration: With the graph database populated, users can quickly explore the data using Graph Explorer. This intuitive interface allows for visual navigation of the knowledge graph, helping users understand relationships and connections within the data.

LLM-powered application: Finally, users can leverage LLMs through Amazon Bedrock to query the graph and retrieve correlated information across documents. This enables powerful, context-aware responses that draw insights from the entire corpus of ingested documents.

The following figure illustrates this solution.

Prerequisites

The example solution in this post uses datasets from the following websites:

Also, you need to:

Create an S3 bucket to store the files on AWS. In this example, we named this bucket: blog-graphrag-s3.

Download and upload the PDF and XLS files from the websites into the S3 bucket.

Building the Graph RAG Application

Open the AWS Management Console for Amazon Bedrock.

In the navigation pane, under Knowledge Bases, choose Create.

Select Knowledge Base with vector store, and choose Create.

Enter a name for Knowledge Base name (for example: knowledge-base-graphrag-demo) and optional description.

Select Create and use a new service role.

Select Data source as Amazon S3.

Leave everything else as default and choose Next to continue.

Enter a Data source name (for example: knowledge-base-graphrag-data-source).

Select an S3 bucket by choosing Browse S3. (If you don’t have an S3 bucket in your account, create one. Make sure to upload all the necessary files.)

After the S3 bucket is created and files are uploaded, choose blog-graphrag-s3 bucket.

Leave everything else as default and choose Next.

Choose Select model and then select an embeddings model (in this example, we chose the Titan Text Embeddings V2 model).

In the Vector database section, under Vector store creation method select Quick create a new vector store, for the Vector store select Amazon Neptune Analytics (GraphRAG),and choose Next to continue.

Review all the details.

Choose Create Knowledge Base after reviewing all the details.

Creating a knowledge base on Amazon Bedrock might take several minutes to complete depending on the size of the data present in the data source. You should see the status of the knowledge base as Available after it is created successfully.

Update and sync the graph with your data

Select the Data source name (in this example, knowledge-base-graphrag-data-source ) to view the synchronization history.

Choose Sync to update the data source.

Visualize the graph using Graph Explorer

Let’s look at the graph created by the knowledge base by navigating to the Amazon Neptune console. Make sure that you’re in the same AWS Region where you created the knowledge base.

Open the Amazon Neptune console.

In the navigation pane, choose Analytics and then Graphs.

You should see the graph created by the knowledge base.

To view the graph in Graph Explorer, you need to create a notebook by going to the Notebooks section.

You can create the notebook instance manually or by using an AWS CloudFormation template. In this post, we will show you how to do it using the Amazon Neptune console (manual).

To create a notebook instance:

Choose Notebooks.

Choose Create notebook.

Select the Analytics as the Neptune Service

Associate the notebook with the graph you just created (in this case: bedrock-knowledge-base-imwhqu).

Select the notebook instance type.

Enter a name for the notebook instance in the Notebook name

Create an AWS Identity and Access Management (IAM) role and use the Neptune default configuration.

Select VPC, Subnet, and Security group.

Leave Internet access as default and choose Create notebook.

Notebook instance creation might take a few minutes. After the Notebook is created, you should see the status as Ready.

To see the Graph Explorer:

Go to Actions and choose Open Graph Explorer.

By default, public connectivity is disabled for the graph database. To connect to the graph, you must either have a private graph endpoint or enable public connectivity. For this post, you will enable public connectivity for this graph.

To set up a public connection to view the graph (optional):

Go back to the graph you created earlier (under Analytics, Graphs).

Select your graph by choosing the round button to the left of the Graph Identifier.

Choose Modify.

Select the check box Enable public connectivity in the Network

Choose Next.

Review changes and choose Submit.

To open the Graph Explorer:

Go back to Notebooks.

After the the Notebook Instance is created, click on in the instance name (in this case: aws-neptune-analytics-neptune-analytics-demo-notebook).

Then, choose Actions and then choose Open Graph Explore

You should now see Graph Explorer. To see the graph, add a node to the canvas, then explore and navigate into the graph.

Playground: Working with LLMs to extract insights from the knowledge base using GraphRAG

You’re ready to test the knowledge base.

Choose the knowledge base, select a model, and choose Apply.

Choose Run after adding the prompt. In the example shown in the following screenshot, we asked How is AWS Increasing energy efficiency?).

Choose Show details to see the Source chunk.

Choose Metadata associated with this chunk to view the chunk ID, data source ID, and source URI.

In the next example, we asked a more complex question: Which companies has AMAZON invested in or acquired in recent years?

Another way to improve the relevance of query responses is to use a reranker model. Using the reranker model in GraphRAG involves providing a query and a list of documents to be reordered based on relevance. The reranker calculates relevance scores for each document in relation to the query, improving the accuracy and pertinence of retrieved results for subsequent use in generating responses or prompts. In the Amazon Bedrock Playgrounds, you can see the results generated by the reranking model in two ways: the data ranked by the reranking solitary (the following figure), or a combination of the reranking model and the LLM to generate new insights.

To use the reranker model:

Check the availability of the reranker model

Go to AWS Management Console for Amazon Bedrock.

From the navigation pane, under Builder tools, choose Knowledge Bases

Choose the same knowledge base we created in the steps before knowledge-base-graphrag-demo.

Click on Test Knowledge Base.

Choose Configurations, expand the Reranking section, choose Select model, and select a reranker model (in this post, we choose Cohere Rerank 3.5).

Clean up

To clean up your resources, complete the following tasks:

Delete the Neptune notebooks: aws-neptune-graphrag.

Delete the Amazon Bedrock Knowledge Bases: knowledge-base-graphrag-demo.

Delete content from the Amazon S3 bucket blog-graphrag-s3.

Conclusion

Using Graph Explorer in combination with Amazon Neptune and Amazon Bedrock LLMs provides a solution for building sophisticated GraphRAG applications. Graph Explorer offers intuitive visualization and exploration of complex relationships within data, making it straightforward to understand and analyze company connections and investments. You can use Amazon Neptune graph database capabilities to set up efficient querying of interconnected data, allowing for rapid correlation of information across various entities and relationships.

By using this approach to analyze Amazon’s investment and acquisition history of Amazon, we can quickly identify patterns and insights that might otherwise be overlooked. For instance, when examining the questions “Which companies has Amazon invested in or acquired in recent years?” or “How is AWS increasing energy efficiency?” The GraphRAG application can cross the knowledge graph, correlating press releases, investor relations information, entities, and financial data to provide a comprehensive overview of Amazon’s strategic moves.

The integration of Amazon Bedrock LLMs further enhances the accuracy and relevance of generated results. These models can contextualize the graph data, helping you to understand the nuances in company relationships and investment trends, and be supportive in generating comprehensive market reports. This combination of graph-based knowledge and natural language processing enables more precise answers and data interpretation, going beyond basic fact retrieval to offer analysis of Amazon’s investment strategy.

In summary, the synergy between Graph Explorer, Amazon Neptune, and Amazon Bedrock LLMs creates a framework for building GraphRAG applications that can extract meaningful insights from complex datasets. This approach streamlines the process of analyzing corporate investments and create new ways to analyze unstructured data across various industries and use cases.

About the authors

Ruan Roloff is a ProServe Cloud Architect specializing in Data & AI at AWS. During his time at AWS, he was responsible for the data journey and data product strategy of customers across a range of industries, including finance, oil and gas, manufacturing, digital natives and public sector — helping these organizations achieve multi-million dollar use cases. Outside of work, Ruan likes to assemble and disassemble things, fish on the beach with friends, play SFII, and go hiking in the woods with his family.

Ruan Roloff is a ProServe Cloud Architect specializing in Data & AI at AWS. During his time at AWS, he was responsible for the data journey and data product strategy of customers across a range of industries, including finance, oil and gas, manufacturing, digital natives and public sector — helping these organizations achieve multi-million dollar use cases. Outside of work, Ruan likes to assemble and disassemble things, fish on the beach with friends, play SFII, and go hiking in the woods with his family.

Sai Devisetty is a Technical Account Manager at AWS. He helps customers in the Financial Services industry with their operations in AWS. Outside of work, Sai cherishes family time and enjoys exploring new destinations.

Sai Devisetty is a Technical Account Manager at AWS. He helps customers in the Financial Services industry with their operations in AWS. Outside of work, Sai cherishes family time and enjoys exploring new destinations.

Madhur Prashant is a Generative AI Solutions Architect at Amazon Web Services. He is passionate about the intersection of human thinking and generative AI. His interests lie in generative AI, specifically building solutions that are helpful and harmless, and most of all optimal for customers. Outside of work, he loves doing yoga, hiking, spending time with his twin, and playing the guitar.

Madhur Prashant is a Generative AI Solutions Architect at Amazon Web Services. He is passionate about the intersection of human thinking and generative AI. His interests lie in generative AI, specifically building solutions that are helpful and harmless, and most of all optimal for customers. Outside of work, he loves doing yoga, hiking, spending time with his twin, and playing the guitar.

Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in the financial service and insurance industry build machine learning solutions on AWS. In his spare time, he likes reading and teaching.

Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in the financial service and insurance industry build machine learning solutions on AWS. In his spare time, he likes reading and teaching.