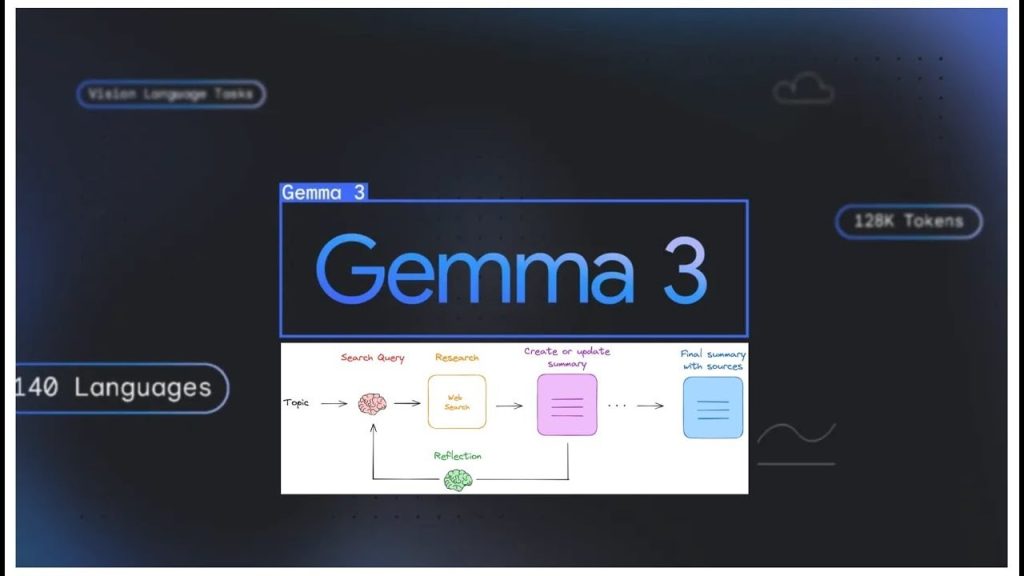

If you are interested in building your very own local deep research AI assistant, you might be interested in Google’s Gemma 3 AI models. They represent a significant advancement in artificial intelligence, offering a compact yet robust solution tailored for local deployment. Derived from the larger Gemini series, these models combine high performance with a strong emphasis on privacy and accessibility. Featuring multimodal capabilities, support for 140 languages, and the ability to generate structured outputs, Gemma 3 is engineered to meet diverse AI research assistant and productivity demands.

These models are open source and optimized for local use, allowing you to build a powerful AI research assistant without relying on external servers or cloud-based systems. Gemma 3 transforms the approach to research and productivity by delivering comprehensive functionality in a local environment.

Local AI Research Assistant

TL;DR Key Takeaways :

Gemma 3 AI models are compact, multimodal, and support 140 languages, offering high performance while prioritizing privacy and accessibility for local use.

Available in four sizes (1B, 4B, 12B, 27B parameters), these open source models are optimized for local deployment, balancing cost efficiency and competitive performance.

Key features include support for up to 120k tokens, structured output generation (e.g., valid JSON), advanced math and coding capabilities, and multilingual proficiency.

Local processing ensures data privacy and cost-effectiveness, with smaller models running on high-end laptops and the 27B model requiring GPU support.

Gemma 3 excels in research and productivity tasks, offering iterative search workflows, document summarization, and seamless integration with tools like Llama libraries and search engines.

Gemma 3 AI models are available in four distinct sizes—1B, 4B, 12B, and 27B parameters—providing flexibility to suit various use cases. These open source models are optimized for local deployment, making them ideal for users who prioritize data privacy and cost efficiency. Despite their compact size, they deliver competitive performance, with the 27B model achieving an impressive ELO score of 1339, which rivals much larger AI systems.

What sets Gemma 3 apart is its ability to balance performance with accessibility. The smaller models are lightweight enough to run on high-end consumer laptops, while the larger 27B model performs optimally with GPU support. This scalability ensures that users can select a model that aligns with their specific needs, whether for personal projects or professional applications.

Core Features and Capabilities

Gemma 3 models are equipped with advanced features that make them highly effective across a variety of tasks. Their architecture and multimodal functionality enable them to excel in areas such as data processing, language understanding, and structured output generation. Key features include:

Extensive Token Support: The ability to handle up to 120k tokens allows for the processing of large datasets and lengthy documents, making them ideal for complex research tasks.

Multilingual Proficiency: With support for 140 languages, these models are accessible to a global audience, breaking down language barriers in research and communication.

Structured Output Generation: Outputs can be generated in formats like valid JSON, making sure seamless integration into workflows and applications.

Advanced Math and Coding: Using RHF, RMF, and RF methodologies, the models are capable of performing sophisticated computational tasks with precision.

These capabilities make Gemma 3 particularly effective for tasks such as iterative search, summarization, and structured data generation. Whether you’re analyzing complex datasets or generating concise summaries, the models provide reliable and efficient solutions.

Google Gemma 3 AI

Here are more detailed guides and articles that you may find helpful on Gemma 3 AI models.

Local Processing: A Focus on Privacy and Accessibility

One of the standout aspects of Gemma 3 is its ability to operate entirely locally. This feature is especially relevant for users who value data privacy and wish to maintain full control over their information. Smaller models, such as the 1B, 4B, and 12B variants, can run efficiently on high-end consumer laptops, while the 27B model is optimized for systems with GPU support.

By eliminating the need for external servers, Gemma 3 reduces reliance on cloud-based solutions, offering a more secure and cost-effective alternative. This local processing capability not only enhances privacy but also ensures that users can access powerful AI tools without incurring the high costs associated with cloud services. For organizations and individuals alike, this represents a practical and scalable solution for AI-driven tasks.

Applications in Research and Productivity

Gemma 3 models are particularly well-suited for research and productivity applications, offering tools that streamline workflows and improve efficiency. Their iterative search capabilities allow users to refine queries and extract relevant information quickly, making them invaluable for deep research. Integration with search engines like Tavali and DuckDuckGo further enhances their utility, providing seamless access to a wealth of information.

For professionals working with complex documents, Gemma 3 simplifies the process of summarization and structured output generation. Whether you’re creating concise reports, analyzing datasets, or generating valid JSON outputs, these models are designed to handle demanding tasks with ease. Their ability to adapt to diverse workflows makes them a versatile tool for both individual and organizational use.

Ease of Integration and Practical Use

Setting up and integrating Gemma 3 models is straightforward, thanks to their compatibility with tools like Llama libraries. These libraries simplify the configuration process, allowing users to customize the models to meet their specific requirements. Whether you’re an AI researcher exploring new methodologies or a professional seeking to enhance productivity, Gemma 3 offers a user-friendly platform for experimentation and application.

The models’ adaptability extends to their deployment options. Smaller variants can be implemented on consumer-grade hardware, while the larger 27B model is designed for systems with advanced GPU capabilities. This flexibility ensures that users can use the power of Gemma 3 regardless of their technical infrastructure.

Performance and Cost Efficiency

Gemma 3 models deliver a compelling combination of speed, accuracy, and cost-effectiveness. Their compact design and local processing capabilities make them a practical alternative to larger, resource-intensive AI systems. By offering high-quality outputs without the need for extensive computational resources, these models cater to users seeking reliable yet economical AI solutions.

For organizations looking to reduce operational costs, Gemma 3 provides a scalable option that balances performance with affordability. The ability to operate locally not only minimizes expenses but also ensures that users retain full control over their data, making these models an attractive choice for privacy-conscious applications.

Empowering Research and Innovation

Google’s Gemma 3 AI models provide a versatile platform for local AI applications, addressing a wide range of research and productivity needs. With their multimodal functionality, extensive language support, and structured output generation, they empower users to tackle complex tasks with confidence. By prioritizing privacy, accessibility, and cost efficiency, Gemma 3 offers a practical solution for individuals and organizations alike.

Whether you’re conducting in-depth research, exploring innovative applications, or streamlining workflows, Gemma 3 equips you with the tools to succeed. Its combination of advanced features and user-friendly design ensures that it remains a valuable asset in the evolving landscape of artificial intelligence.

Media Credit: LangChain

Filed Under: AI, Top News

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.