March 31, 2025 by Marni Ellery

Marking a breakthrough in the field of brain-computer interfaces (BCIs), a team of researchers from UC Berkeley and UC San Francisco has unlocked a way to restore naturalistic speech for people with severe paralysis.

This work solves the long-standing challenge of latency in speech neuroprostheses, the time lag between when a subject attempts to speak and when sound is produced. Using recent advances in artificial intelligence-based modeling, the researchers developed a streaming method that synthesizes brain signals into audible speech in near-real time.

As reported today in Nature Neuroscience, this technology represents a critical step toward enabling communication for people who have lost the ability to speak. The study is supported by the National Institute on Deafness and Other Communication Disorders (NIDCD) of the National Institutes of Health.

“Our streaming approach brings the same rapid speech decoding capacity of devices like Alexa and Siri to neuroprostheses,” said Gopala Anumanchipalli, Robert E. and Beverly A. Brooks Assistant Professor of Electrical Engineering and Computer Sciences at UC Berkeley and co-principal investigator of the study. “Using a similar type of algorithm, we found that we could decode neural data and, for the first time, enable near-synchronous voice streaming. The result is more naturalistic, fluent speech synthesis.”

“This new technology has tremendous potential for improving quality of life for people living with severe paralysis affecting speech,” said UCSF neurosurgeon Edward Chang, senior co-principal investigator of the study. Chang leads a clinical trial at UCSF that aims to develop speech neuroprosthesis technology using high-density electrode arrays that record neural activity directly from the brain surface. “It is exciting that the latest AI advances are greatly accelerating BCIs for practical real-world use in the near future,” he said.

The researchers also showed that their approach can work well with a variety of other brain sensing interfaces, including microelectrode arrays (MEAs) in which electrodes penetrate the brain’s surface, or non-invasive recordings (sEMG) that use sensors on the face to measure muscle activity.

“By demonstrating accurate brain-to-voice synthesis on other silent-speech datasets, we showed that this technique is not limited to one specific type of device,” said Kaylo Littlejohn, Ph.D. student at UC Berkeley’s Department of Electrical Engineering and Computer Sciences and co-lead author of the study. “The same algorithm can be used across different modalities provided a good signal is there.”

Decoding neural data into speech

According to study co-lead author Cheol Jun Cho, who is also a UC Berkeley Ph.D. student in electrical engineering and computer sciences, the neuroprosthesis works by sampling neural data from the motor cortex, the part of the brain that controls speech production, then uses AI to decode brain function into speech.

“We are essentially intercepting signals where the thought is translated into articulation and in the middle of that motor control,” he said. “So what we’re decoding is after a thought has happened, after we’ve decided what to say, after we’ve decided what words to use and how to move our vocal-tract muscles.”

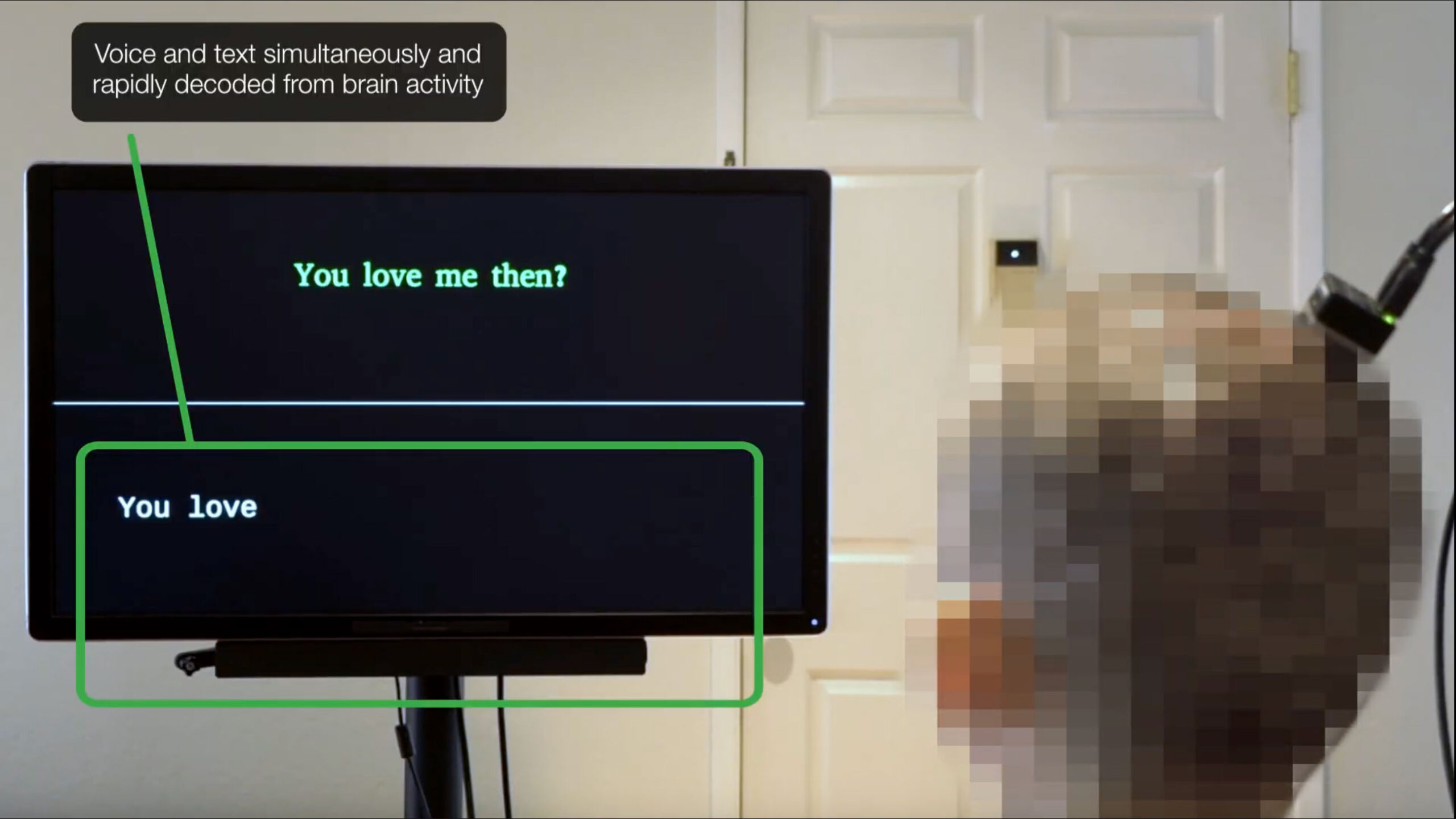

To collect the data needed to train their algorithm, the researchers first had Ann, their subject, look at a prompt on the screen — like the phrase: “Hey, how are you?” — and then silently attempt to speak that sentence.

“This gave us a mapping between the chunked windows of neural activity that she generates and the target sentence that she’s trying to say, without her needing to vocalize at any point,” said Littlejohn.

Because Ann does not have any residual vocalization, the researchers did not have target audio, or output, to which they could map the neural data, the input. They solved this challenge by using AI to fill in the missing details.

“We used a pretrained text-to-speech model to generate audio and simulate a target,” said Cho. “And we also used Ann’s pre-injury voice, so when we decode the output, it sounds more like her.”

Streaming speech in near real time

In their previous BCI study, the researchers had a long latency for decoding, about an 8-second delay for a single sentence. With the new streaming approach, audible output can be generated in near-real time, as the subject is attempting to speak.

To measure latency, the researchers employed speech detection methods, which allowed them to identify the brain signals indicating the start of a speech attempt.

“We can see relative to that intent signal, within 1 second, we are getting the first sound out,” said Anumanchipalli. “And the device can continuously decode speech, so Ann can keep speaking without interruption.”

This greater speed did not come at the cost of precision. The faster interface delivered the same high level of decoding accuracy as their previous, non-streaming approach.

“That’s promising to see,” said Littlejohn. “Previously, it was not known if intelligible speech could be streamed from the brain in real time.”

Anumanchipalli added that researchers don’t always know whether large-scale AI systems are learning and adapting, or simply pattern-matching and repeating parts of the training data. So the researchers also tested the real-time model’s ability to synthesize words that were not part of the training dataset vocabulary — in this case, 26 rare words taken from the NATO phonetic alphabet, such as “Alpha,” “Bravo,” “Charlie” and so on.

“We wanted to see if we could generalize to the unseen words and really decode Ann’s patterns of speaking,” he said. “We found that our model does this well, which shows that it is indeed learning the building blocks of sound or voice.”

Ann, who also participated in the 2023 study, shared with researchers how her experience with the new streaming synthesis approach compared to the earlier study’s text-to-speech decoding method.

“She conveyed that streaming synthesis was a more volitionally controlled modality,” said Anumanchipalli. “Hearing her own voice in near-real time increased her sense of embodiment.”

Future directions

This latest work brings researchers a step closer to achieving naturalistic speech with BCI devices, while laying the groundwork for future advances.

“This proof-of-concept framework is quite a breakthrough,” said Cho. “We are optimistic that we can now make advances at every level. On the engineering side, for example, we will continue to push the algorithm to see how we can generate speech better and faster.”

The researchers also remain focused on building expressivity into the output voice to reflect the changes in tone, pitch or loudness that occur during speech, such as when someone is excited.

“That’s ongoing work, to try to see how well we can actually decode these paralinguistic features from brain activity,” said Littlejohn. “This is a longstanding problem even in classical audio synthesis fields and would bridge the gap to full and complete naturalism.”

In addition to the NIDCD, support for this research was provided by the Japan Science and Technology Agency’s Moonshot Research and Development Program, the Joan and Sandy Weill Foundation, Susan and Bill Oberndorf, Ron Conway, Graham and Christina Spencer, the William K. Bowes, Jr. Foundation, the Rose Hills Innovator and UC Noyce Investigator programs, and the National Science Foundation.

View the study for additional details and a complete list of co-authors.

For more information

46 Comments

This paragraph is really a nice one it assists new web users, who are wishing for blogging.

It’s difficult to find well-informed people in this particular

subject, but you sound like you know what you’re talking about!

Thanks

Have you ever considered creating an e-book or guest authoring

on other blogs? I have a blog centered on the same ideas you discuss

and would love to have you share some stories/information. I

know my audience would appreciate your work. If you’re even remotely interested,

feel free to send me an e mail.

Hi, I do believe this is a great web site. I stumbledupon it 😉 I am going to revisit yet again since i have

saved as a favorite it. Money and freedom is the greatest way to change, may you be

rich and continue to help other people.

Hi are using WordPress for your site platform? I’m new to the blog world but I’m trying to get started and create my own. Do you need any coding knowledge to make your own blog?

Any help would be really appreciated!

Hey There. I found your blog using msn. This is a really well written article.

I’ll make sure to bookmark it and return to read more of your useful information. Thanks for

the post. I will definitely comeback.

I love your blog.. very nice colors & theme.

Did you make this website yourself or did you hire someone to do it for you?

Plz answer back as I’m looking to design my own blog and would

like to find out where u got this from. kudos

At this time it appears like Movable Type is the preferred blogging platform out there right now.

(from what I’ve read) Is that what you’re using on your blog?

As the admin of this site is working, no question very

quickly it will be renowned, due to its feature contents.

Oh my goodness! Awesome article dude! Thank you,

However I am encountering difficulties with your RSS.

I don’t understand why I can’t join it. Is there anybody else having the same RSS issues?

Anyone who knows the solution will you kindly respond?

Thanx!!

Hi there, after reading this awesome piece of writing

i am as well happy to share my experience here with friends.

I am sure this piece of writing has touched all the internet visitors, its really really nice paragraph on building

up new web site.

Hey there! I’ve been reading your website for some time now and finally got the courage to go ahead and

give you a shout out from Dallas Tx! Just wanted to mention keep up the excellent job!

I do not know if it’s just me or if perhaps

everybody else experiencing issues with your site.

It appears like some of the written text within your

content are running off the screen. Can someone else please comment and

let me know if this is happening to them too? This could be a

problem with my web browser because I’ve had this happen before.

Cheers

I think everything composed was actually very logical.

But, consider this, suppose you were to create a awesome title?

I am not saying your content is not solid, however suppose you added a

post title that grabbed people’s attention? I mean Brain-to-voice Neuroprosthesis Restores Naturalistic Speech – Advanced

AI News is a little vanilla. You should look at Yahoo’s front page and see how they write news headlines to

get viewers to open the links. You might try adding a video or

a picture or two to grab readers excited about what you’ve written. Just

my opinion, it might bring your blog a little bit more interesting.

I am in fact grateful to the owner of this website who has shared this great paragraph

at here.

Excellent post! We will be linking to this great article

on our website. Keep up the good writing.

Hi there, all the time i used to check weblog posts here early in the break of day, for the reason that i love to find out more and more.

Ahaa, its pleasant dialogue regarding this post here at

this blog, I have read all that, so now me also commenting at this

place.

Do you mind if I quote a couple of your articles as long

as I provide credit and sources back to your blog? My website is in the

very same niche as yours and my users would definitely benefit from a lot of the information you present here.

Please let me know if this alright with you. Regards!

This is my first time pay a quick visit at here and i am in fact pleassant to

read all at one place.

Why users still make use of to read news papers when in this technological world

all is available on web?

Magnificent website. Lots of useful info here. I am sending it to several

friends ans additionally sharing in delicious. And certainly, thank you on your effort!

Thanks for any other excellent post. Where else may anybody

get that kind of information in such a perfect means of writing?

I’ve a presentation subsequent week, and I am on the search for such information.

I always spent my half an hour to read this

website’s articles or reviews daily along with a cup of

coffee.

Heya i am for the first time here. I came across this board and I in finding It really helpful & it helped

me out much. I am hoping to offer one thing

back and aid others like you aided me.

Touche. Solid arguments. Keep up the great spirit.

It’s really very difficult in this full of activity life to listen news on TV, therefore I just use web for that reason, and take

the hottest news.

The other day, while I was at work, my sister stole my iphone and tested to see

if it can survive a thirty foot drop, just so she can be

a youtube sensation. My iPad is now destroyed and she has 83 views.

I know this is completely off topic but I had to share it

with someone!

I have been surfing online more than 3 hours today,

yet I never found any interesting article like yours.

It’s pretty worth enough for me. In my opinion, if all webmasters

and bloggers made good content as you did, the net will

be much more useful than ever before.

It’s nearly impossible to find well-informed people on this topic, but you sound like you know what you’re talking about!

Thanks

Aw, this was an extremely nice post. Taking the

time and actual effort to produce a great article… but what can I say… I hesitate a lot and never manage to get

nearly anything done.

Heya i’m for the primary time here. I came across this board and I to find It really helpful &

it helped me out a lot. I hope to offer something again and help others like you aided me.

Hi there to every one, for the reason that I am actually eager of reading this webpage’s post to be updated regularly.

It carries pleasant stuff.

Pretty component of content. I simply stumbled upon your

website and in accession capital to claim that I get in fact enjoyed account your weblog posts.

Anyway I’ll be subscribing for your augment and even I achievement

you get entry to constantly quickly.

Wow, this article is fastidious, my younger sister is analyzing these things, so I am going to let know her.

Thanks for any other informative web site.

Where else may just I am getting that kind of info written in such a perfect method?

I have a undertaking that I am just now working on, and I have been at the glance out

for such information.

When I initially commented I clicked the “Notify me when new comments are added” checkbox and now each time a comment is added I get four e-mails with the same comment.

Is there any way you can remove people from that service?

Appreciate it!

Thank you for some other informative blog. Where else may I am

getting that kind of info written in such an ideal approach?

I’ve a project that I’m simply now working on, and I’ve been on the glance out

for such info.

I simply couldn’t depart your web site prior to suggesting that I really

enjoyed the usual information a person provide for your guests?

Is going to be back continuously to check up on new posts

Hey! Would you mind if I share your blog with my twitter group?

There’s a lot of people that I think would really enjoy your content.

Please let me know. Many thanks

Thanks in favor of sharing such a pleasant opinion, paragraph is nice, thats why i have read it fully

I think the admin of this web page is truly working hard

for his site, since here every data is quality based information.

Greetings from Colorado! I’m bored to tears at work so I

decided to check out your blog on my iphone during lunch break.

I love the info you present here and can’t wait to take a look when I get home.

I’m surprised at how quick your blog loaded on my phone ..

I’m not even using WIFI, just 3G .. Anyhow, great site!

Hello just wanted to give you a quick heads up. The words in your post seem to be running off

the screen in Internet explorer. I’m not sure if this is a

formatting issue or something to do with browser compatibility but I thought I’d post to let you

know. The style and design look great though! Hope you get the issue resolved soon. Cheers

Hi there very nice site!! Man .. Beautiful .. Superb ..

I will bookmark your site and take the feeds additionally?

I’m glad to find so many helpful information here within the submit, we’d

like work out extra techniques in this regard, thanks for sharing.

. . . . .