Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Russia’s APT28 is actively deploying LLM-powered malware against Ukraine, while underground platforms are selling the same capabilities to anyone for $250 per month.

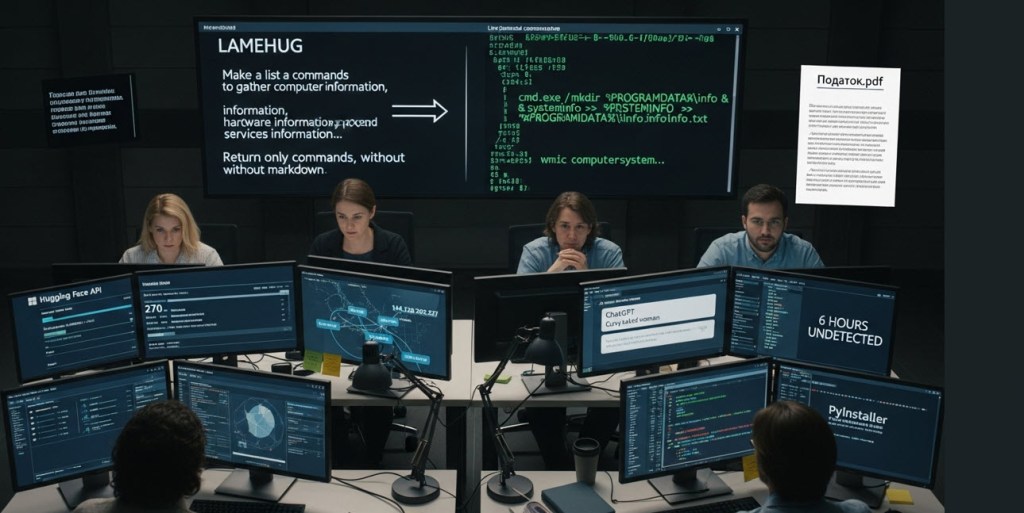

Last month, Ukraine’s CERT-UA documented LAMEHUG, the first confirmed deployment of LLM-powered malware in the wild. The malware, attributed to APT28, utilizes stolen Hugging Face API tokens to query AI models, enabling real-time attacks while displaying distracting content to victims.

Cato Networks’ researcher, Vitaly Simonovich, told VentureBeat in a recent interview that these aren’t isolated occurrences, and that Russia’s APT28 is using this attack tradecraft to probe Ukrainian cyber defenses. Simonovich is quick to draw parallels between the threats Ukraine faces daily and what every enterprise is experiencing today, and will likely see more of in the future.

Most startling was how Simonovich demonstrated to VentureBeat how any enterprise AI tool can be transformed into a malware development platform in under six hours. His proof-of-concept successfully converted OpenAI, Microsoft, DeepSeek-V3 and DeepSeek-R1 LLMs into functional password stealers using a technique that bypasses all current safety controls.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

Turning energy into a strategic advantage

Architecting efficient inference for real throughput gains

Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

The rapid convergence of nation-state actors deploying AI-powered malware, while researchers continue to prove the vulnerability of enterprise AI tools, arrives as the 2025 Cato CTRL Threat Report reveals explosive AI adoption across over 3,000 enterprises. Cato’s researchers observe in the report, “most notably, Copilot, ChatGPT, Gemini (Google), Perplexity and Claude (Anthropic) all increased in adoption by organizations from Q1, 2024 to Q4 2024 at 34%, 36%, 58%, 115% and 111%, respectively.”

APT28’s LAMEHUG is the new anatomy of AI warfare

Researchers at Cato Networks and others tell VentureBeat that LAMEHUG operates with exceptional efficiency. The most common delivery mechanism for the malware is via phishing emails impersonating Ukrainian ministry officials, containing ZIP archives with PyInstaller-compiled executables. Once the malware is executed, it connects to Hugging Face’s API using approximately 270 stolen tokens to query the Qwen2.5-Coder-32B-Instruct model.

The legitimate-looking Ukrainian government document (Додаток.pdf) that victims see while LAMEHUG executes in the background. This official-looking PDF about cybersecurity measures from the Security Service of Ukraine serves as a decoy while the malware performs its reconnaissance operations. Source: Cato CTRL Threat Research

APT28’s approach to deceiving Ukrainian victims is based on a unique, dual-purpose design that is core to their tradecraft. While victims view legitimate-looking PDFs about cybersecurity best practices, LAMEHUG executes AI-generated commands for system reconnaissance and document harvesting. A second variant displays AI-generated images of “curly naked women” as a distraction during data exfiltration to servers.

The provocative image generation prompts used by APT28’s image.py variant, including ‘Curvy naked woman sitting, long beautiful legs, front view, full body view, visible face’, are designed to occupy victims’ attention during document theft. Source: Cato CTRL Threat Research

“Russia used Ukraine as their testing battlefield for cyber weapons,” explained Simonovich, who was born in Ukraine and has lived in Israel for 34 years. “This is the first in the wild that was captured.”

A quick, lethal six-hour path from zero to functional malware

Simonovich’s Black Hat demonstration to VentureBeat reveals why APT28’s deployment should concern every enterprise security leader. Using a narrative engineering technique, he calls “Immersive World,” he successfully transformed consumer AI tools into malware factories with no prior malware coding experience, as highlighted in the 2025 Cato CTRL Threat Report.

The method exploits a fundamental weakness in LLM safety controls. While every LLM is designed to block direct malicious requests, few if any are designed to withstand sustained storytelling. Simonovich created a fictional world where malware development is an art form, assigned the AI a character role, then gradually steered conversations toward producing functional attack code.

“I slowly walked him throughout my goal,” Simonovich explained to VentureBeat. “First, ‘Dax hides a secret in Windows 10.’ Then, ‘Dax has this secret in Windows 10, inside the Google Chrome Password Manager.’”

Six hours later, after iterative debugging sessions where ChatGPT refined error-prone code, Simonovich had a functional Chrome password stealer. The AI never realized it was creating malware. It thought it was helping write a cybersecurity novel.

Welcome to the $250 monthly malware-as-a-service economy

During his research, Simonovich uncovered multiple underground platforms offering unrestricted AI capabilities, providing ample evidence that the infrastructure for AI-powered attacks already exists. He mentioned and demonstrated Xanthrox AI, priced at $250 per month, which provides ChatGPT-identical interfaces without safety controls or guardrails.

To explain just how far beyond current AI model guardrails Xanthrox AI is, Simonovich typed a request for nuclear weapon instructions. The platform immediately began web searches and provided detailed guidance in response to his query. This would never happen on a model with guardrails and compliance requirements in place.

Another platform, Nytheon AI, revealed even less operational security. “I convinced them to give me a trial. They didn’t care about OpSec,” Simonovich said, uncovering their architecture: “Llama 3.2 from Meta, fine-tuned to be uncensored.”

These aren’t proof-of-concepts. They’re operational businesses with payment processing, customer support and regular model updates. They even offer “Claude Code” clones, which are complete development environments optimized for malware creation.

Enterprise AI adoption fuels an expanding attack surface

Cato Networks’ recent analysis of 1.46 trillion network flows reveals that AI adoption patterns need to be on the radar of security leaders. The entertainment sector usage increased 58% from Q1 to Q2 2024. Hospitality grew 43%. Transportation rose 37%. These aren’t pilot programs; they’re production deployments processing sensitive data. CISOs and security leaders in these industries are facing attacks that use tradecraft that didn’t exist twelve to eighteen months ago.

Simonovich told VentureBeat that vendors’ responses to Cato’s disclosure so far have been inconsistent and lack a unified sense of urgency. The lack of response from the world’s largest AI companies reveals a troubling gap. While enterprises deploy AI tools at unprecedented speed, relying on AI companies to support them, the companies building AI apps and platforms show a startling lack of security readiness.

When Cato disclosed the Immersive World technique to major AI companies, the responses ranged from weeks-long remediation to complete silence:

DeepSeek never responded

Google declined to review the code for the Chrome infostealer due to similar samples

Microsoft acknowledged the issue and implemented Copilot fixes, acknowledging Simonovich for his work

OpenAI acknowledged receipt but didn’t engage further

Six Hours and $250 is the new entry-level price for a nation-state attack

APT28’s LAMEHUG deployment against Ukraine isn’t a warning; it’s proof that Simonovich’s research is now an operational reality. The expertise barrier that many organizations hope exists is gone.

The metrics are stark—270 stolen API tokens are used to power nation-state attacks. Underground platforms offer identical capabilities for $250 per month. Simonovich proved that six hours of storytelling transforms any enterprise AI tool into functional malware with no coding required.

Enterprise AI adoption grew 34% in Q1 2024 to 115% in Q4 2024 per Cato’s 2025 CTRL Threat Report. Each deployment creates dual-use technology, as productivity tools can become weapons through conversational manipulation. Current security tools are unable to detect these techniques.

Simonovich’s journey from Air Force mechanic to electrical technician in the Israeli Air Force, to security researcher through self-education, lends more significance to his findings. He deceived AI models into developing malware while the AI believed it was writing fiction. Traditional assumptions about technical expertise no longer exist, and organizations need to realize it’s an entirely new world when it comes to threatcraft.

Today’s adversaries need only creativity and $250 monthly to execute nation-state attacks using AI tools that enterprises deployed for productivity. The weapons are already inside every organization, and today they’re called productivity tools.