Generative AI applications seem simple—invoke a foundation model (FM) with the right context to generate a response. In reality, it’s a much more complex system involving workflows that invoke FMs, tools, and APIs and that use domain-specific data to ground responses with patterns such as Retrieval Augmented Generation (RAG) and workflows involving agents. Safety controls need to be applied to input and output to prevent harmful content, and foundational elements have to be established such as monitoring, automation, and continuous integration and delivery (CI/CD), which are needed to operationalize these systems in production.

Many organizations have siloed generative AI initiatives, with development managed independently by various departments and lines of businesses (LOBs). This often results in fragmented efforts, redundant processes, and the emergence of inconsistent governance frameworks and policies. Inefficiencies in resource allocation and utilization drive up costs.

To address these challenges, organizations are increasingly adopting a unified approach to build applications where foundational building blocks are offered as services to LOBs and teams for developing generative AI applications. This approach facilitates centralized governance and operations. Some organizations use the term “generative AI platform” to describe this approach. This can be adapted to different operating models of an organization: centralized, decentralized, and federated. A generative AI foundation offers core services, reusable components, and blueprints, while applying standardized security and governance policies.

This approach gives organizations many key benefits, such as streamlined development, the ability to scale generative AI development and operations across organization, mitigated risk as central management simplifies implementation of governance frameworks, optimized costs because of reuse, and accelerated innovation as teams can quickly build and ship use cases.

In this post, we give an overview of a well-established generative AI foundation, dive into its components, and present an end-to-end perspective. We look at different operating models and explore how such a foundation can operate within those boundaries. Lastly, we present a maturity model that helps enterprises assess their evolution path.

Overview

Laying out a strong generative AI foundation includes offering a comprehensive set of components to support the end-to-end generative AI application lifecycle. The following diagram illustrates these components.

In this section, we discuss the key components in more detail.

Hub

At the core of the foundation are multiple hubs that include:

Model hub – Provides access to enterprise FMs. As a system matures, a broad range of off-the-shelf or customized models can be supported. Most organizations conduct thorough security and legal reviews before models are approved for use. The model hub acts as a central place to access approved models.

Tool/Agent hub – Enables discovery and connectivity to tool catalog and agents. This could be enabled via protocols such as MCP, Agent2Agent (A2A).

Gateway

A model gateway offers secure access to the model hub through standardized APIs. Gateway is built as a multi-tenant component to provide isolation across teams and business units that are onboarded. Key features of a gateway include:

Access and authorization – The gateway facilitates authentication, authorization, and secure communication between users and the system. It helps verify that only authorized users can use specific models, and can also enforce fine-grained access control.

Unified API – The gateway provides unified APIs to models and features such as guardrails and evaluation. It can also support automated prompt translation to different prompt templates across different models.

Rate limiting and throttling – It handles API requests efficiently by controlling the number of requests allowed in a given time period, preventing overload and managing traffic spikes.

Cost attribution – The gateway monitors usage across the organization and allocates costs to the teams. Because these models can be resource-intensive, tracking model usage helps allocate costs properly, optimize resources, and avoid overspending.

Scaling and load balancing – The gateway can handle load balancing across different servers, model instances, or AWS Regions so that applications remain responsive.

Guardrails – The gateway applies content filters to requests and responses through guardrails and helps adhere to organizational security and compliance standards.

Caching – The cache layer stores prompts and responses that can help improve performance and reduce costs.

The AWS Solutions Library offers solution guidance to set up a multi-provider generative AI gateway. The solution uses an open source LiteLLM proxy wrapped in a container that can be deployed on Amazon Elastic Container Service (Amazon ECS) or Amazon Elastic Kubernetes Service (Amazon EKS). This offers organizations a building block to develop an enterprise wide model hub and gateway. The generative AI foundation can start with the gateway and offer additional features as it matures.

The gateway pattern for tool/agent hub are still evolving. The model gateway can be a universal gateway to all the hubs or alternatively individual hubs could have their own purpose-built gateways.

Orchestration

Orchestration encapsulates generative AI workflows, which are usually a multi-step process. The steps could involve invocation of models, integrating data sources, using tools, or calling APIs. Workflows can be deterministic, where they are created as predefined templates. An example of a deterministic flow is a RAG pattern. In this pattern, a search engine is used to retrieve relevant sources and augment the data into the prompt context, before the model attempts to generate the response for the user prompt. This aims to reduce hallucination and encourage the generation of responses grounded in verified content.

Alternatively, complex workflows can be designed using agents where a large language model (LLM) decides the flow by planning and reasoning. During reasoning, the agent can decide when to continue thinking, call external tools (such as APIs or search engines), or submit its final response. Multi-agent orchestration is used to tackle even more complex problem domains by defining multiple specialized subagents, which can interact with each other to decompose and complete a task requiring different knowledge or skills. A generative AI foundation can provide primitives such as models, vector databases, and guardrails as a service and higher-level services for defining AI workflows, agents and multi-agents, tools, and also a catalog to encourage reuse.

Model customization

A key foundational capability that can be offered is model customization, including the following techniques:

Continued pre-training – Domain-adaptive pre-training, where existing models are further trained on domain-specific data. This approach can offer a balance between customization depth and resource requirements, necessitating fewer resources than training from scratch.

Fine-tuning – Model adaptation techniques such as instruction fine-tuning and supervised fine-tuning to learn task-specific capabilities. Though less intensive than pre-training, this approach still requires significant computational resources.

Alignment – Training models with user-generated data using techniques such as Reinforcement Learning with Human Feedback (RLHF) and Direct Preference Optimization (DPO).

For the preceding techniques, the foundation should provide scalable infrastructure for data storage and training, a mechanism to orchestrate tuning and training pipelines, a model registry to centrally register and govern the model, and infrastructure to host the model.

Data management

Organizations typically have multiple data sources, and data from these sources is mostly aggregated in data lakes and data warehouses. Common datasets can be made available as a foundational offering to different teams. The following are additional foundational components that can be offered:

Integration with enterprise data sources and external sources to bring in the data needed for patterns such as RAG or model customization

Fully managed or pre-built templates and blueprints for RAG that include a choice of vector databases, chunking data, converting data into embeddings, and indexing them in vector databases

Data processing pipelines for model customization, including tools to create labeled and synthetic datasets

Tools to catalog data, making it quick to search, discover, access, and govern data

GenAIOps

Generative AI operations (GenAIOps) encompasses overarching practices of managing and automating operations of generative AI systems. The following diagram illustrates its components.

Fundamentally, GenAIOps falls into two broad categories:

Operationalizing applications that consume FMs – Although operationalizing RAG or agentic applications shares core principles with DevOps, it requires additional, AI-specific considerations and practices. RAGOps addresses operational practices for managing the lifecycle of RAG systems, which combine generative models with information retrieval mechanisms. Considerations here are choice of vector database, optimizing indexing pipelines, and retrieval strategies. AgentOps helps facilitate efficient operation of autonomous agentic systems. The key concerns here are tool management, agent coordination using state machines, and short-term and long-term memory management.

Operationalizing FM training and tuning – ModelOps is a category under GenAIOps, which is focused on governance and lifecycle management of models, including model selection, continuous tuning and training of models, experiments tracking, central model registry, prompt management and evaluation, model deployment, and model governance. FMOps, which is operationalizing FMs, and LLMOps, which is specifically operationalizing LLMs, fall under this category.

In addition, operationalization involves implementing CI/CD processes for automating deployments, integrating evaluation and prompt management systems, and collecting logs, traces, and metrics to optimize operations.

Observability

Observability for generative AI needs to account for the probabilistic nature of these systems—models might hallucinate, responses can be subjective, and troubleshooting is harder. Like other software systems, logs, metrics, and traces should be collected and centrally aggregated. There should be tools to generate insights out of this data that can be used to optimize the applications even further. In addition to component-level monitoring, as generative AI applications mature, deeper observability should be implemented, such as instrumenting traces, collecting real-world feedback, and looping it back to improve models and systems. Evaluation should be offered as a core foundational component, and this includes automated and human evaluation and LLM-as-a-judge pipelines along with storage of ground truth data.

Responsible AI

To balance the benefits of generative AI with the challenges that arise from it, it’s important to incorporate tools, techniques, and mechanisms that align to a broad set of responsible AI dimensions. At AWS, these Responsible AI dimensions include privacy and security, safety, transparency, explainability, veracity and robustness, fairness, controllability, and governance. Each organization would have its own governing set of responsible AI dimensions that can be centrally incorporated as best practices through the generative AI foundation.

Security and privacy

Communication should be over TLS, and private network access should be supported. User access should be secure, and a system should support fine-grained access control. Rate limiting and throttling should be in place to help prevent abuse. For data security, data should be encrypted at rest and transit, and tenant data isolation patterns should be implemented. Embeddings stored in vector stores should be encrypted. For model security, custom model weights should be encrypted and isolated for different tenants. Guardrails should be applied to input and output to filter topics and harmful content. Telemetry should be collected for actions that users take on the central system. Data quality is ownership of the consuming applications or data producers. The consuming applications should integrate observability into applications.

Governance

The two key areas of governance are model and data:

Model governance – Monitor model for performance, robustness, and fairness. Model versions should be managed centrally in a model registry. Appropriate permissions and policies should be in place for model deployments. Access controls to models should be established.

Data governance – Apply fine-grained access control to data managed by the system, including training data, vector stores, evaluation data, prompt templates, workflow, and agent definitions. Establish data privacy policies such as managing sensitive data (for example, personally identifiable information (PII) redaction), for the data managed by the system, protecting prompts and data and not using them to improve models.

Tools landscape

A variety of AWS services, AWS partner solutions, and third-party tools and frameworks are available to architect a comprehensive generative AI foundation. The following figure might not cover the entire gamut of tools, but we have created a landscape based on our experience with these tools.

Operational boundaries

One of the challenges to solve for is who owns the foundational components and how do they operate within the organization’s operating model. Let’s look at three common operating models:

Centralized – Operations are centralized to one team. Some organizations refer to this team as the platform team or platform engineering team. In this model, foundational components are managed by a central team and offered to LOBs and enterprise teams.

Decentralized – LOBs and teams build their respective systems and operate independently. The central team takes on a role of a Center of Excellence (COE) that defines best practices, standards, and governance frameworks. Logs and metrics can be aggregated in a central place.

Federated – A more flexible model is a hybrid of the two. A central team manages the foundation that offers building blocks for model access, evaluation, guardrails, central logs, and metrics aggregation to teams. LOBs and teams use the foundational components but also build and manage their own components as necessary.

Multi-tenant architecture

Irrespective of the operating model, it’s important to define how tenants are isolated and managed within the system. The multi-tenant pattern depends on a number of factors:

Tenant and data isolation – Data ownership is critical for building generative AI systems. A system should establish clear policies on data ownership and access rights, making sure data is accessible only to authorized users. Tenant data should be securely isolated from others to maintain privacy and confidentiality. This can be through physical isolation of data, for example, setting up isolated vector databases for each tenant for a RAG application, or by logical separation, for example, using different indexes within a shared database. Role-based access control should be set up to make sure users within a tenant can access resources and data specific to their organization.

Scalability and performance – Noisy neighbors can be a real problem, where one tenant is extremely chatty compared to others. Proper resource allocation according to tenant needs should be established. Containerization of workloads can be a good strategy to isolate and scale tenants individually. This also ties into the deployment strategy described later in this section, by means of which a chatty tenant can be completely isolated from others.

Deployment strategy – If strict isolation is required for use cases, then each tenant can have dedicated instances of compute, storage, and model access. This means gateway, data pipelines, data storage, training infrastructure, and other components are deployed on an isolated infrastructure per tenant. For tenants who don’t need strict isolation, shared infrastructure can be used and partitioning of resources can be achieved by a tenant identifier. A hybrid model can also be used, where the core foundation is deployed on shared infrastructure and specific components are isolated by tenant. The following diagram illustrates an example architecture.

Observability – A mature generative AI system should provide detailed visibility into operations at both the central and tenant-specific level. The foundation offers a central place for collecting logs, metrics, and traces, so you can set up reporting based on tenant needs.

Cost Management – A metered billing system should be set up based on usage. This requires establishing cost tracking based on resource usage of different components plus model inference costs. Model inference costs vary by models and by providers, but there should be a common mechanism of allocating costs per tenant. System administrators should be able to track and monitor usage across teams.

Let’s break this down by taking a RAG application as an example. In the hybrid model, the tenant deployment contains instances of a vector database that stores the embeddings, which supports strict data isolation requirements. The deployment will additionally include the application layer that contains the frontend code and orchestration logic to take the user query, augment the prompt with context from the vector database, and invoke FMs on the central system. The foundational components that offer services such as evaluation and guardrails for applications to consume to build a production-ready application are in a separate shared deployment. Logs, metrics, and traces from the applications can be fed into a central aggregation place.

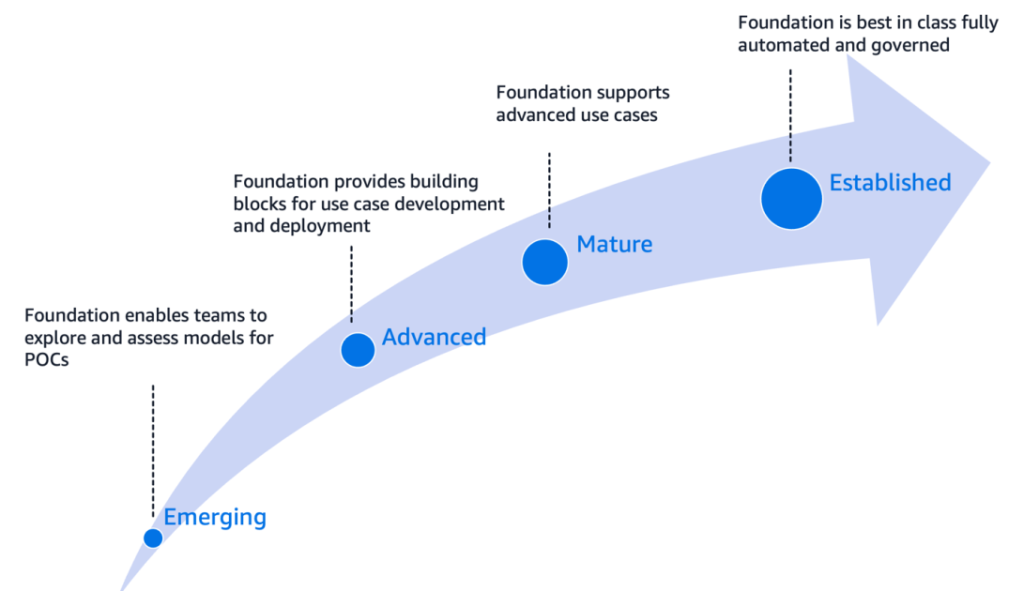

Generative AI foundation maturity model

We have defined a maturity model to track the evolution of the generative AI foundation across different stages of adoption. The maturity model can be used to assess where you are in the development journey and plan for expansion. We define the curve along four stages of adoption: emerging, advanced, mature, and established.

The details for each stage are as follows:

Emerging – The foundation offers a playground for model exploration and assessment. Teams are able to develop proofs of concept using enterprise approved models.

Advanced – The foundation facilitates first production use cases. Multiple environments exist for development, testing, and production deployment. Monitoring and alerts are established.

Mature – Multiple teams are using the foundation and are able to develop complex use cases. CI/CD and infrastructure as code (IaC) practices accelerate the rollout of reusable components. Deeper observability such as tracing is established.

Established – A best-in-class system, fully automated and operating at scale, with governance and responsible AI practices, is established. The foundation enables diverse use cases, and is fully automated and governed. Most of the enterprise teams are onboarded on it.

The evolution might not be exactly linear along the curve in terms of specific capabilities, but certain key performance indicators can be used to evaluate the adoption and growth.

Conclusion

Establishing a comprehensive generative AI foundation can be a critical step in harnessing the power of AI at scale. Enterprise AI development brings unique challenges ranging from agility, reliability, governance, scale, and collaboration. Therefore, a well-constructed foundation with the right components and adapted to the operating model of business aids in building and scaling generative AI applications across the enterprise.

The rapidly evolving generative AI landscape means there might be cutting-edge tools we haven’t covered under the tools landscape. If you’re using or aware of state-of-the art solutions that align with the foundational components, we encourage you to share them in the comments section.

Our team is dedicated to helping customers solve challenges in generative AI development at scale—whether it’s architecting a generative AI foundation, setting up operational best practices, or implementing responsible AI practices. Leave us a comment and we will be glad to collaborate.

About the authors

Chaitra Mathur is as a GenAI Specialist Solutions Architect at AWS. She works with customers across industries in building scalable generative AI platforms and operationalizing them. Throughout her career, she has shared her expertise at numerous conferences and has authored several blogs in the Machine Learning and Generative AI domains.

Chaitra Mathur is as a GenAI Specialist Solutions Architect at AWS. She works with customers across industries in building scalable generative AI platforms and operationalizing them. Throughout her career, she has shared her expertise at numerous conferences and has authored several blogs in the Machine Learning and Generative AI domains.

Dr. Alessandro Cerè is a GenAI Evaluation Specialist and Solutions Architect at AWS. He assists customers across industries and regions in operationalizing and governing their generative AI systems at scale, ensuring they meet the highest standards of performance, safety, and ethical considerations. Bringing a unique perspective to the field of AI, Alessandro has a background in quantum physics and research experience in quantum communications and quantum memories. In his spare time, he pursues his passion for landscape and underwater photography.

Dr. Alessandro Cerè is a GenAI Evaluation Specialist and Solutions Architect at AWS. He assists customers across industries and regions in operationalizing and governing their generative AI systems at scale, ensuring they meet the highest standards of performance, safety, and ethical considerations. Bringing a unique perspective to the field of AI, Alessandro has a background in quantum physics and research experience in quantum communications and quantum memories. In his spare time, he pursues his passion for landscape and underwater photography.

Aamna Najmi is a GenAI and Data Specialist at AWS. She assists customers across industries and regions in operationalizing and governing their generative AI systems at scale, ensuring they meet the highest standards of performance, safety, and ethical considerations, bringing a unique perspective of modern data strategies to complement the field of AI. In her spare time, she pursues her passion of experimenting with food and discovering new places.

Aamna Najmi is a GenAI and Data Specialist at AWS. She assists customers across industries and regions in operationalizing and governing their generative AI systems at scale, ensuring they meet the highest standards of performance, safety, and ethical considerations, bringing a unique perspective of modern data strategies to complement the field of AI. In her spare time, she pursues her passion of experimenting with food and discovering new places.

Dr. Andrew Kane is the WW Tech Leader for Security and Compliance for AWS Generative AI Services, leading the delivery of under-the-hood technical assets for customers around security, as well as working with CISOs around the adoption of generative AI services within their organizations. Before joining AWS at the beginning of 2015, Andrew spent two decades working in the fields of signal processing, financial payments systems, weapons tracking, and editorial and publishing systems. He is a keen karate enthusiast (just one belt away from Black Belt) and is also an avid home-brewer, using automated brewing hardware and other IoT sensors. He was the legal licensee in his ancient (AD 1468) English countryside village pub until early 2020.

Dr. Andrew Kane is the WW Tech Leader for Security and Compliance for AWS Generative AI Services, leading the delivery of under-the-hood technical assets for customers around security, as well as working with CISOs around the adoption of generative AI services within their organizations. Before joining AWS at the beginning of 2015, Andrew spent two decades working in the fields of signal processing, financial payments systems, weapons tracking, and editorial and publishing systems. He is a keen karate enthusiast (just one belt away from Black Belt) and is also an avid home-brewer, using automated brewing hardware and other IoT sensors. He was the legal licensee in his ancient (AD 1468) English countryside village pub until early 2020.

Bharathi Srinivasan is a Generative AI Data Scientist at the AWS Worldwide Specialist Organization. She works on developing solutions for Responsible AI, focusing on algorithmic fairness, veracity of large language models, and explainability. Bharathi guides internal teams and AWS customers on their responsible AI journey. She has presented her work at various learning conferences.

Bharathi Srinivasan is a Generative AI Data Scientist at the AWS Worldwide Specialist Organization. She works on developing solutions for Responsible AI, focusing on algorithmic fairness, veracity of large language models, and explainability. Bharathi guides internal teams and AWS customers on their responsible AI journey. She has presented her work at various learning conferences.

Denis V. Batalov is a 17-year Amazon veteran and a PhD in Machine Learning, Denis worked on such exciting projects as Search Inside the Book, Amazon Mobile apps and Kindle Direct Publishing. Since 2013 he has helped AWS customers adopt AI/ML technology as a Solutions Architect. Currently, Denis is a Worldwide Tech Leader for AI/ML responsible for the functioning of AWS ML Specialist Solutions Architects globally. Denis is a frequent public speaker, you can follow him on Twitter @dbatalov.

Denis V. Batalov is a 17-year Amazon veteran and a PhD in Machine Learning, Denis worked on such exciting projects as Search Inside the Book, Amazon Mobile apps and Kindle Direct Publishing. Since 2013 he has helped AWS customers adopt AI/ML technology as a Solutions Architect. Currently, Denis is a Worldwide Tech Leader for AI/ML responsible for the functioning of AWS ML Specialist Solutions Architects globally. Denis is a frequent public speaker, you can follow him on Twitter @dbatalov.

Nick McCarthy is a Generative AI Specialist at AWS. He has worked with AWS clients across various industries including healthcare, finance, sports, telecoms and energy to accelerate their business outcomes through the use of AI/ML. Outside of work he loves to spend time traveling, trying new cuisines and reading about science and technology. Nick has a Bachelors degree in Astrophysics and a Masters degree in Machine Learning.

Nick McCarthy is a Generative AI Specialist at AWS. He has worked with AWS clients across various industries including healthcare, finance, sports, telecoms and energy to accelerate their business outcomes through the use of AI/ML. Outside of work he loves to spend time traveling, trying new cuisines and reading about science and technology. Nick has a Bachelors degree in Astrophysics and a Masters degree in Machine Learning.

Alex Thewsey is a Generative AI Specialist Solutions Architect at AWS, based in Singapore. Alex helps customers across Southeast Asia to design and implement solutions with ML and Generative AI. He also enjoys karting, working with open source projects, and trying to keep up with new ML research.

Alex Thewsey is a Generative AI Specialist Solutions Architect at AWS, based in Singapore. Alex helps customers across Southeast Asia to design and implement solutions with ML and Generative AI. He also enjoys karting, working with open source projects, and trying to keep up with new ML research.

Willie Lee is a Senior Tech PM for the AWS worldwide specialists team focusing on GenAI. He is passionate about machine learning and the many ways it can impact our lives, especially in the area of language comprehension.

Willie Lee is a Senior Tech PM for the AWS worldwide specialists team focusing on GenAI. He is passionate about machine learning and the many ways it can impact our lives, especially in the area of language comprehension.