My April’s Fools joke – that I had a sneak preview of GPT-5 —obviously went too far for some people (though hundreds wrote to me to say that they loved it), and I apologize to those who didn’t get the joke. At least a dozen people thought it was real, despite my efforts to hint at the end by emphasizing the date.

[As to reality, Sam today just said GPT-5 is coming in “months”; we will see.]

All that said, misery loves company, and it turns out that I wasn’t the only one who mocked generative AI on April 1. And therein lies a tale.

It seems that the very well-known mathematician named Timothy Gowers celebrated the day in much the same way as I did, by making a ridiculous April 1 claim about generative AI, with an equally straight face:

Dubnovy-Blazen was an inside joke (any guesses? answer below). The problem wasn’t real; the whole tweet was a gag.

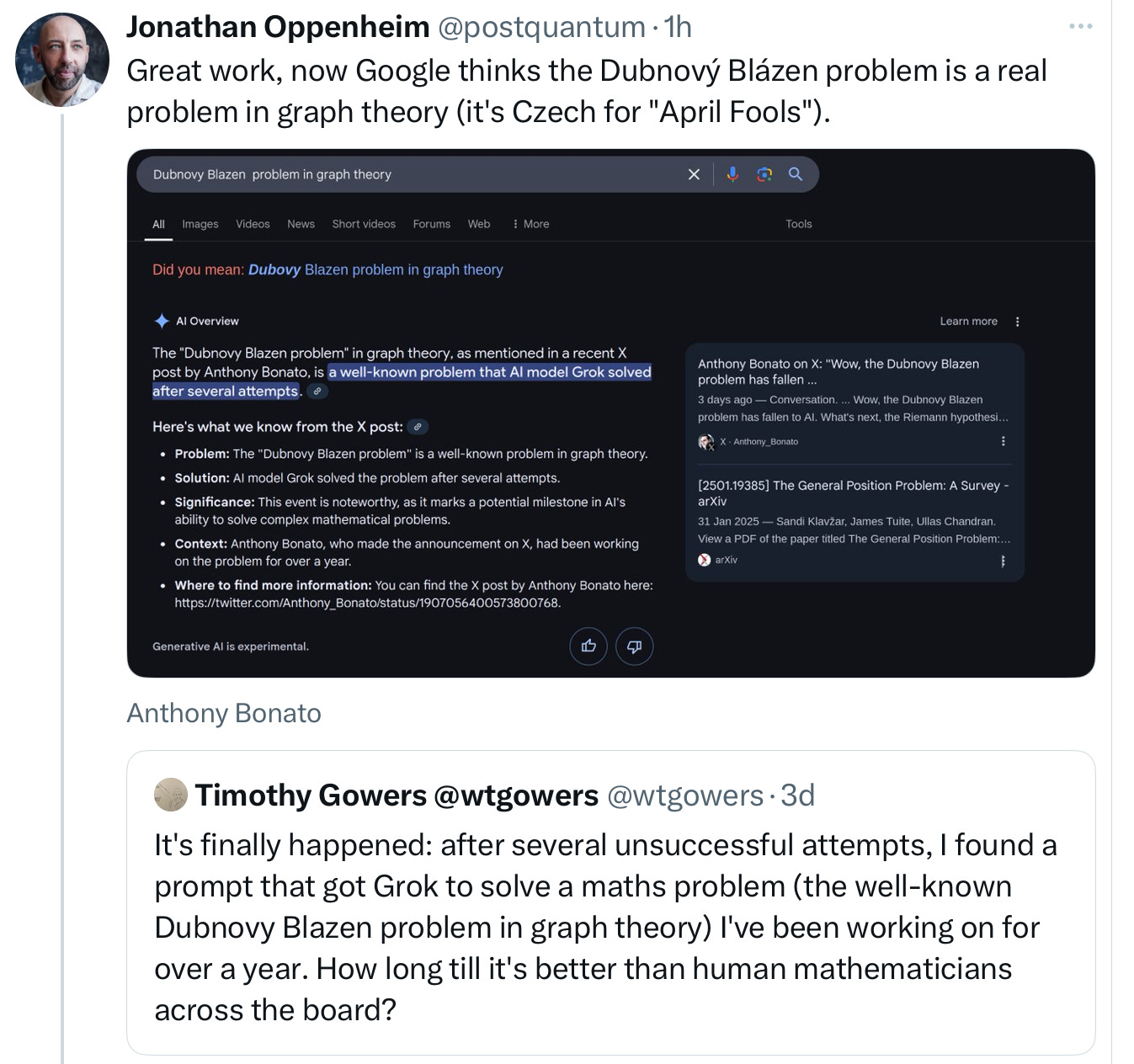

You won’t believe what happened next. (Actually, if you’ve been paying attention, you will.) The physicist Jonathan Oppenheim was the first to see the consequences:

Like many good tales, this one has a moral: as Oppenheim just said to me on X, “One thing which is remarkable is how uncritical this Google AI is, even though it’s meant to aggregate search results. A human would instantly be suspicious of the fact that all the results for this important problem are from the last few days (and all on X).”

Humans are (usually, if well-motivated) smart enough to sort things like jokes and fiction from reality, but current AI doesn’t have a clue. All it does is absorb and mimic data, without ever reflecting critically about it. A well-motivated or skeptical human could do background reading, and try to see if the claim seems reasonable. An LLM just spits back (sometimes in transmogrified form) what it has read.

God save us.

Gary Marcus has been warning about the perils of LLM-induced enshittification since 2022.

p.s. for a more serious look at the latest actual LLM math results, watch this space tomorrow.