As more users start relying on AI for writing tasks like email drafts and document summaries, one common frustration remains: the output often sounds way too generic. Even when models like ChatGPT or Gemini are given detailed prompts, they rarely nail a user’s individual tone or voice without plenty of manual tweaking. Apple is now proposing a solution.

In a new research paper (Aligning LLMs by Predicting Preferences from User Writing Samples) to be presented at the International Conference on Machine Learning (ICML 2025) next month, Apple researchers unveil PROSE, a technique designed to help large language models better infer and adopt a user’s unique writing preferences by learning directly from their past writing samples.

How PROSE works

The central idea behind PROSE (Preference Reasoning by Observing and Synthesizing Examples) is to move beyond today’s typical alignment techniques, like prompt engineering or reinforcement learning from human feedback. Instead, the AI builds an internal and interpretable profile of the user’s actual writing style.

Rather than requiring the user to manually provide style guides or edit countless AI drafts, PROSE works in two stages:

Iterative Refinement: The AI repeatedly compares its own generated responses with real examples from the user, adjusting its internal “preference description” until it outputs something that closely matches the user’s writing.

Consistency Verification: To avoid fixating on just one example, which might not be representative of the user’s overall writing style, the AI double-checks that any inferred preference (e.g., “use short sentences” or “start with a joke”) holds true across multiple writing samples.

Basically, PROSE builds a self-evolving style profile, tests it against multiple user examples, and uses that as the baseline for future generations.

Why this matters for Apple Intelligence

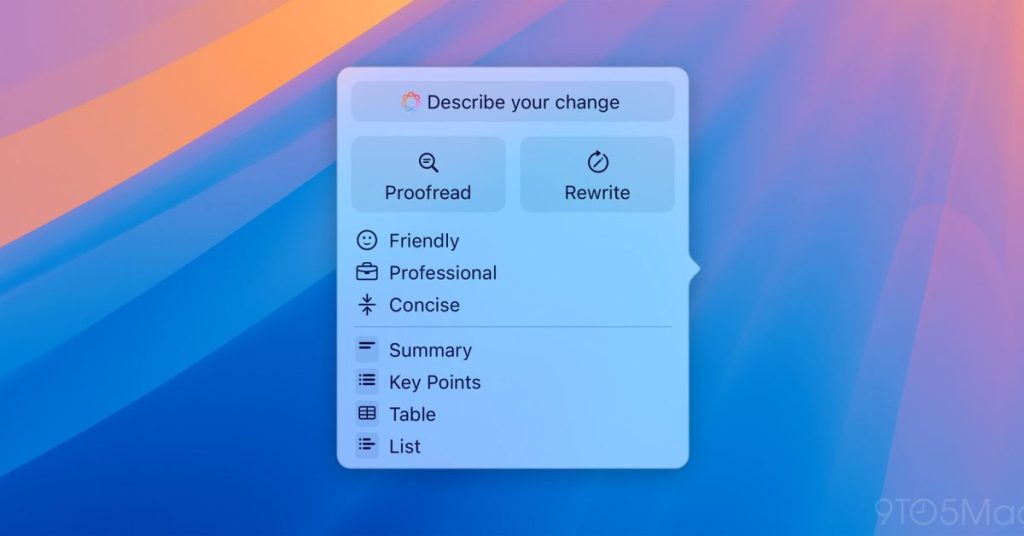

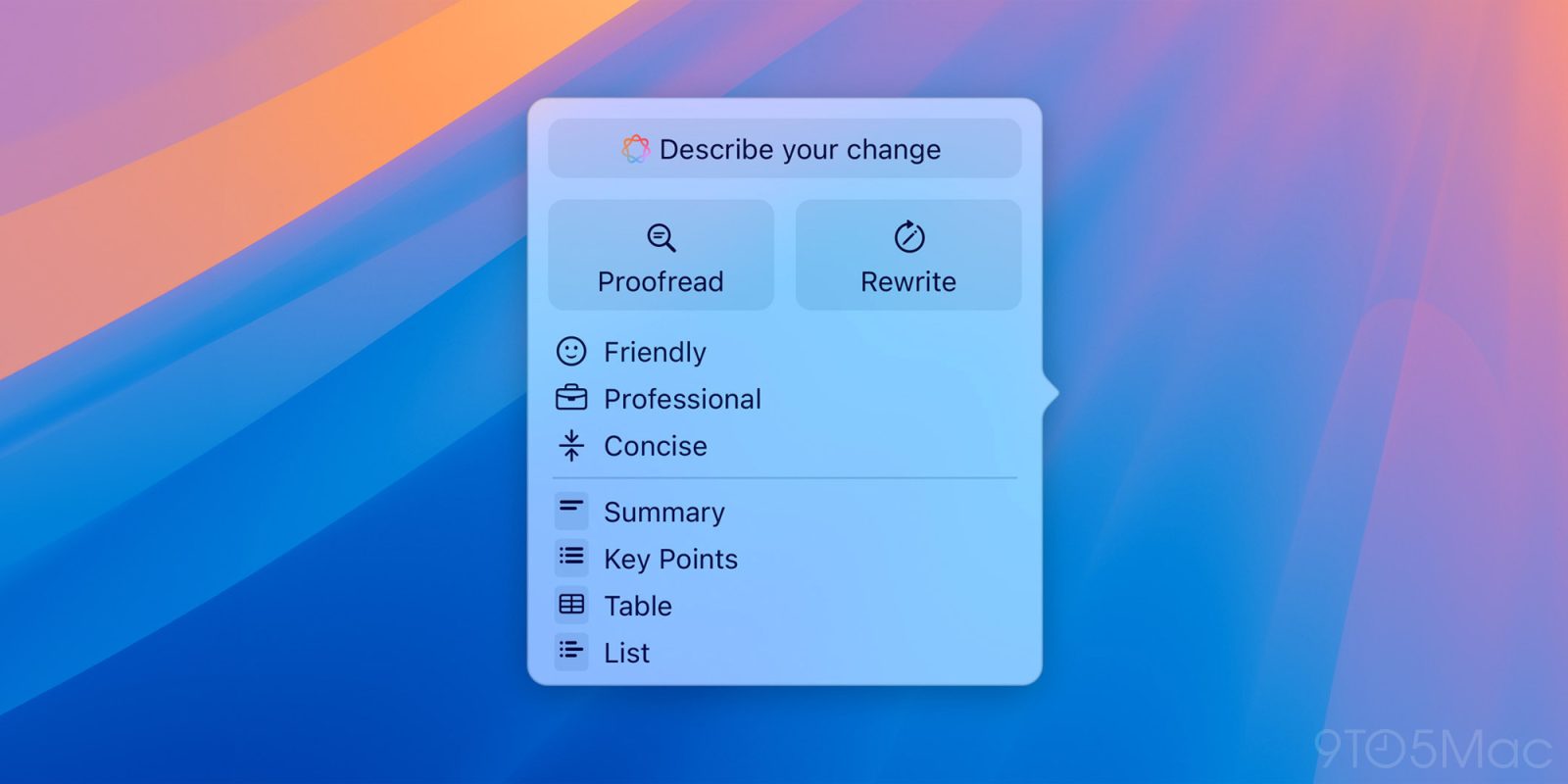

While the paper doesn’t mention Apple products or services by name, the connection is obvious. As Apple pushes deeper into more personalized assistant features, techniques like PROSE could play a big role in making Apple Intelligence write texts that feel more like each individual user.

And with Apple now allowing developers to tap directly into its local models through the newly announced Foundation Models framework, it’s not hard to imagine a future where any app could leverage a system-wide, deeply personalized writing assistant to power its own writing tools.

There’s a new benchmark, too

In the study, Apple also introduces a new benchmark dataset called PLUME (Preference Learning from User Emails and Memos) for evaluating writing-style alignment techniques like PROSE.

This replaces a previous dataset (PRELUDE) and aims to fix common issues with LLM personalization testing, like shallow preference definitions or non-representative tasks.

Using PLUME, the researchers compared PROSE to previous approaches, such as another preference-learning method called CIPHER (I know. So many names and acronyms) and standard in-context learning (ICL) techniques.

The result? PROSE outperformed CIPHER by 33% on key metrics and even beat ICL when paired with high-end models like GPT-4o.

Interestingly, the paper also suggests that combining PROSE with ICL delivers the best of both worlds, with up to a 9% improvement over ICL alone.

The bigger trend: AI that adapts to you, and keeps you coming back

The PROSE project fits into a broader AI research trend: making assistants not just smarter, but more personal. Whether that’s through on-device fine-tuning, preference modeling, or context-aware prompts, the race is on to close the gap between generic LLM output and the unique voice of each user.

Of course, true personalization also comes with huge business incentives, as it also sets the stage for the ultimate platform lock-in. But that’s a subject for another day.

FTC: We use income earning auto affiliate links. More.