For Immediate Release

December 11th, 2024

Media Contact: Chase Hardin, chase@futureoflife.org

+1 (623)986-0161

Major AI Companies Have ‘Significant Gaps’ in Safety Measures Say Leading AI Experts in External Safety Review

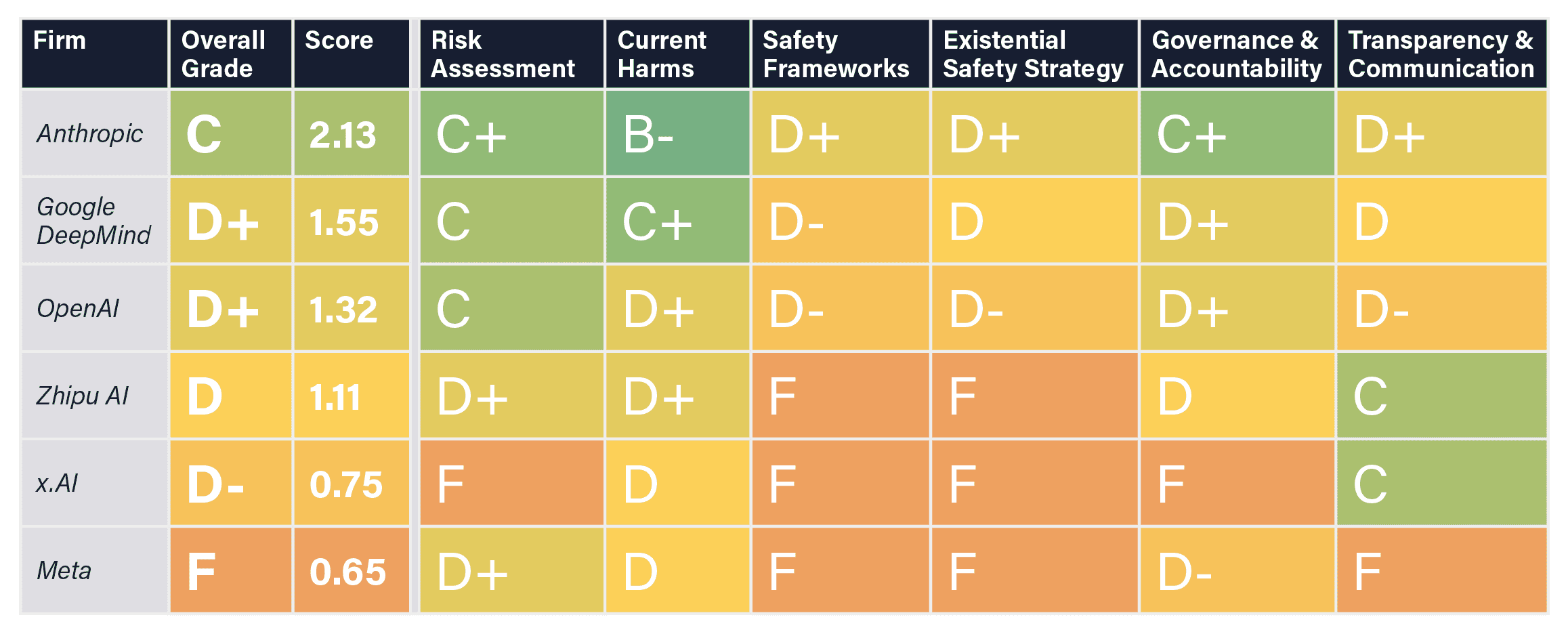

CAMPBELL, CA – Today, the Future of Life Institute (FLI) released its 2024 AI Safety Index, in which several of the world’s foremost AI and governance experts assessed the safety standards of six prominent companies developing AI, including Anthropic, Google DeepMind, Meta, OpenAI, x.AI, and Zhipu AI. The independent panel evaluated each company in six categories: Risk Assessment, Current Harms, Safety Frameworks, Existential Safety Strategy, Governance & Accountability, and Transparency & Communication.

The review panel found that, while some companies demonstrated commendable practices in select domains, there are significant risk management disparities between them. All of the flagship models were found to be vulnerable to adversarial attacks, and despite their explicit ambitions to develop systems that rival or exceed human intelligence, firms have no adequate strategy for ensuring such systems remain beneficial and under human control.

“It’s horrifying that the very companies whose leaders predict AI could end humanity have no strategy to avert such a fate,” said panelist David Krueger, Assistant Professor at Université de Montreal and a core member of Mila.

“The findings of the AI Safety Index project suggest that although there is a lot of activity at AI companies that goes under the heading of ‘safety,’ it is not yet very effective,” said panelist Stuart Russell, a Professor of Computer Science at UC Berkeley. “In particular, none of the current activity provides any kind of quantitative guarantee of safety; nor does it seem possible to provide such guarantees given the current approach to AI via giant black boxes trained on unimaginably vast quantities of data. And it’s only going to get harder as these AI systems get bigger. In other words, it’s possible that the current technology direction can never support the necessary safety guarantees, in which case it’s really a dead end.”

The final report can be viewed here.

“Evaluation initiatives like this Index are very important because they can provide valuable insights into the safety practices of leading AI companies. They are an essential step in holding firms accountable for their safety commitments and can help highlight emerging best practices and encourage competitors to adopt more responsible approaches,” said Professor Yoshua Bengio, Full Professor at Université de Montréal, Founder and Scientific Director of Mila – Quebec AI Institute and 2018 A.M. Turing Award co-winner.

Grades were assessed based on publicly available information as well as the companies’ responses to a survey conducted by FLI. The review raised concerns that ongoing competitive pressures are encouraging companies to ignore or sidestep questions around the risks posed by developing this technology, resulting in significant gaps in safety measures and a serious need for improved accountability.

“We launched the Safety Index to give the public a clear picture of where these AI labs stand on safety issues,” said FLI president Max Tegmark, a professor doing AI research at MIT. “The reviewers have decades of combined experience in AI and risk assessment, so when they speak up about AI safety, we should pay close attention to what they say.”

Review panelists:

Yoshua Bengio, Professor at Université de Montreal and Founder of Mila – Quebec Artificial Intelligence Institute. He is the recipient of the 2018 A.M. Turing Award.

Atoosa Kasirzadeh, an Assistant Professor at Carnegie Mellon University and a 2024 Schmidt Sciences AI2050 Fellow.

David Krueger, Assistant Professor at Université de Montreal and a core member of Mila and the Center for Human-compatible AI.

Tegan Maharaj, Assistant Professor at HEC Montréal and core faculty of Mila. She leads the ERRATA lab on Responsible AI.

Jessica Newman, Director of the AI Security Initiative at UC Berkeley and the Co-Director of the UC Berkeley AI Policy Hub.

Sneha Revanur, founder of youth AI advocacy organization Encode Justice and a Forbes 30 Under 30 honoree.

Stuart Russell is a Professor of Computer Science at UC Berkeley, where he leads the Center for Human-compatible AI. He co-authored the standard AI textbook used in more than 1,500 universities in 135 countries.

The Future of Life Institute is a global non-profit organization working to steer the development of transformative technologies towards benefiting life and away from extreme large-scale risks. To find out more about our mission or explore our work, visit www.futureoflife.org.