A group of top artificial intelligence experts and executives warned the technology poses a “risk of extinction” in an alarming joint statement released Tuesday.

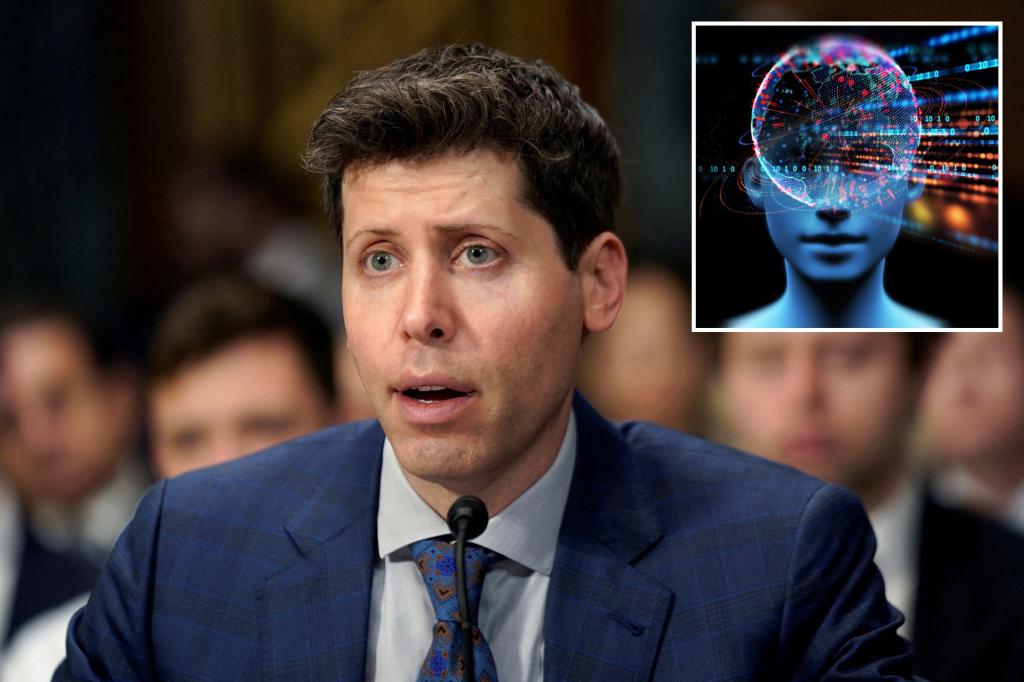

OpenAI boss Sam Altman, whose firm created ChatGPT, and the “Godfather of AI” Geoffrey Hinton were among more than 350 prominent figures who see AI as an existential threat, according to the one-sentence open letter organized by the nonprofit Center for AI Safety.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the experts said in a 22-word statement.

The brief statement is the latest in a series of warnings from leading experts regarding AI’s potential to foment chaos in society — with potential risks including the spread of misinformation, major economic upheaval through job losses or even outright attacks on humanity.

Scrutiny has increased following the runaway popularity of OpenAI’s ChatGPT product.

The potential risks were on display as recently as last week, when a likely AI-generated photo of a fake explosion at the Pentagon triggered a selloff that briefly erased billions in value from the US stock market before it was debunked.

The Center for AI Safety said the brief statement was intended to “open up discussion” about the topic given the “broad spectrum of important and urgent risks from AI.”

Aside from Altman and Hinton, notable signatories included the boss of Google DeepMind Demis Hassabis and another prominent AI lab leader, Anthropic CEO Dario Amodei.

Altman, Hassabis and Amodei were part of a select group of experts who met with President Biden earlier this month to discuss potential AI risks and regulations.

Hinton and another signer, Yoshua Bengio, won the 2018 Turing Award, the computing world’s highest honor, for their work on advancements in neural networks that were described as “major breakthroughs in artificial intelligence.”

“As we grapple with immediate AI risks like malicious use, misinformation, and disempowerment, the AI industry and governments around the world need to also seriously confront the risk that future AIs could pose a threat to human existence,” said Dan Hendrycks, director of the Center for AI Safety.

“Mitigating the risk of extinction from AI will require global action,” Hendrycks added. “The world has successfully cooperated to mitigate risks related to nuclear war. The same level of effort is needed to address the dangers posed by future AI systems.”

Despite his leading role at OpenAI, Altman has been vocal about his concerns regarding the unrestrained development of advanced AI systems.

In testimony on Capitol Hill earlier this month, Altman came out in favor of government regulations for the technology, among other safety guardrails.

At the time, Altman admitted his worst fear is that AI could “cause significant harm to the world” without oversight.

Elsewhere, Hinton recently quit his part-time job as an AI researcher for Google so that he could speak more freely about his concerns.

Hinton said he now partly regrets his life’s work, which could allow “bad actors” to do “bad things” that will be difficult to prevent.

The 22-word statement was noticeably briefer than a previous open letter that generated scrutiny in March.

Billionaire Elon Musk was among hundreds of experts who called for a six-month pause in advanced AI development so that leaders could consider how to safely proceed.

Their lengthy open letter — signed by some of the same experts who backed the Center for AI Safety’s statement — suggested that AI’s dangers included the possible “loss of control of our civilization.”

Musk was even more blunt during an appearance at a Wall Street Journal conference in London last week, stating that he saw a “non-zero chance” of AI “going Terminator” — a reference to the worst-case scenario from James Cameron’s 1984 sci-fi film.

Ex-Google CEO Eric Schmidt echoed Musk’s fears, arguing that AI was not far off from becoming an “existential risk” to humanity that could result in “many, many, many, many people harmed or killed.”