There are so many AI research papers these days, it’s hard to stand out. But one paper has fired up a lot of discussion across the tech industry in recent days.

“This is the most inspiring thing I’ve read in AI in the last two years,” the startup founder Suhail Doshi wrote on X this weekend. Jack Clark, a cofounder of Anthropic, featured the paper in Monday’s edition of his Import AI newsletter, which is read by thousands of industry researchers.

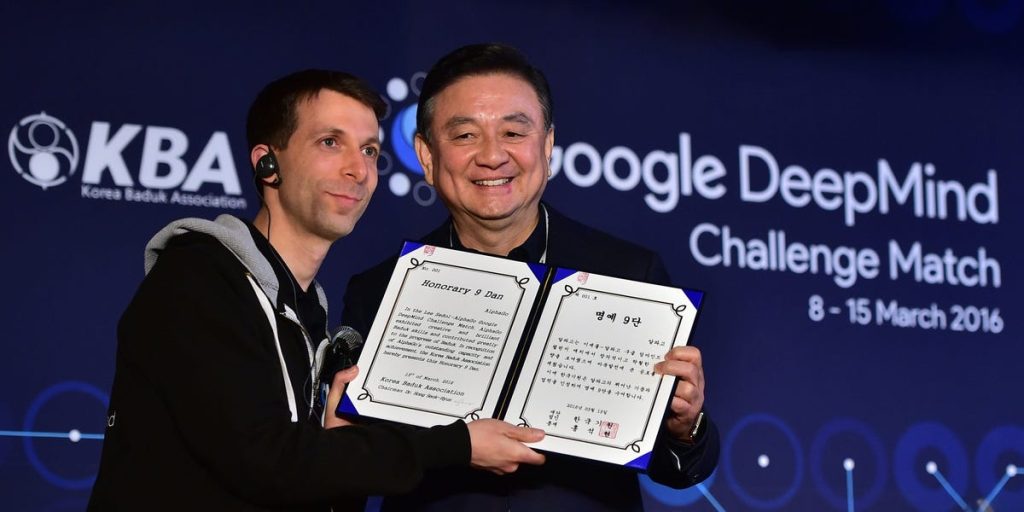

Written by the Google researcher David Silver and the Canadian computer scientist Richard Sutton, the paper boldly announces a new AI era.

The authors identify two previous modern AI eras. The first was epitomized by AlphaGo, a Google AI model that famously learned to play the board game Go better than humans in 2015. The second is the one we’re in right now, defined by OpenAI’s ChatGPT.

Silver and Sutton say we’re now entering a new period called “the Era of Experience.”

David Silver, Richard Sutton

To me, this represents a new attempt by Google to tackle one of AI’s most persistent problems — the scarcity of training data — while moving beyond a technological approach that OpenAI basically won.

The Simulation Era

Let’s start with the first era, which the authors call the “Simulation Era.”

In this period, roughly the mid-2010s, researchers used digital simulations to get AI models to play games repeatedly to learn how to perform like humans. We’re talking millions and millions of games, such as chess, poker, Atari, and “Gran Turismo,” played over and over, with rewards dangled for good results — thus teaching the machines what’s good versus bad and incentivizing them to pursue better strategies.

This method of reinforcement learning, or RL, produced Google’s AlphaGo. And it also helped to create another Google model called AlphaZero, which discovered new strategies for chess and Go, and changed the way that humans play these games.

The problem with this approach: Machines trained this way did well on specific problems with precisely defined rewards, but they couldn’t tackle more general, open-ended problems with vague payoffs, Silver and Sutton wrote. So, probably not really full AI.

The Human Data Era

The next area was kicked off by another Google research paper published in 2017. “Attention Is All You Need” proposed that AI models should be trained on mountains of human-created data from the internet. Just by allowing machines to pay “attention” to all this information, they would learn to behave like humans and perform as well as us on a wide variety of different tasks.

This is the era we’re in now, and it has produced ChatGPT and most of the other powerful generative AI models and tools that are increasingly being used to automate tasks such as graphic design, content creation, and software coding.

The key to this era has been amassing as much high-quality, human-generated data as possible, and using that in massive, compute-intensive training runs to imbue AI models with an understanding of the world.

While Google researchers kicked off this era of human data, most of those people left the company and started their own things. Many went to OpenAI and worked on technology that ultimately produced ChatGPT, which is by far the most successful generative AI product in history. Others went on to start Anthropic, another leading generative AI startup that runs Claude, a powerful chatbot and AI agent.

Related stories

A Google dis?

Many experts in the AI industry, and some investors and analysts on Wall Street, think that Google may have dropped the ball here. Although it came up with this AI approach, OpenAI and ChatGPT have run away with most of the spoils so far.

I think the jury is still out. However, you can’t help but think about this situation when the authors seem to be dissing the era of human data.

“It could be argued that the shift in paradigm has thrown out the baby with the bathwater,” they wrote. “While human-centric RL has enabled an unprecedented breadth of behaviours, it has also imposed a new ceiling on the agent’s performance: agents cannot go beyond existing human knowledge.”

Silver and Sutton are right about one aspect of this. The supply of high-quality human data has been outstripped by the insatiable demand from AI labs and Big Tech companies that need fresh content to train new models and move their abilities forward. As I wrote last year, it has become a lot harder and more expensive to make big leaps at the AI frontier.

The Era of Experience

The authors have a pretty radical solution for this, and it’s at the heart of the new Era of Experience that they propose in this paper.

They suggest that models and agents should just get out there and create their own new data through interactions with the real world.

This will solve the nagging data-supply problem, they argue, while helping the field attain AGI, or artificial general intelligence, a technical holy grail where machines outperform humans in most useful activities.

“Ultimately, experiential data will eclipse the scale and quality of human-generated data,” Silver and Sutton write. “This paradigm shift, accompanied by algorithmic advancements in RL, will unlock in many domains new capabilities that surpass those possessed by any human.”

Any modern parent can think of this as the equivalent of telling their child to get off the couch, stop looking at their phone, and go outside and play with their friends. There are a lot of richer, more satisfying, and more valuable experiences out there to learn from.

Clark, the Anthropic cofounder, was impressed by the chutzpah of this proposal.

“Papers like this are emblematic of the confidence found in the AI industry,” he wrote in his newsletter on Monday, citing “the gumption to give these agents sufficient independence and latitude that they can interact with the world and generate their own data.”

Examples, and a possible final dis

The authors float some theoretical examples of how this might work in the new Era of Experience.

An AI health assistant could ground a person’s health goals into a reward based on a combination of signals such as their resting heart rate, sleep duration, and activity levels. (A reward in AI is a common way to incentivize models and agents to perform better. Just like you might nag your partner to exercise more by saying they’ll get stronger and look better if they go to the gym.)

An educational assistant could use exam results to provide an incentive or reward, based on a grounded reward for a user’s language learning.

A science agent with a goal to reduce global warming might use a reward based on empirical observations of carbon dioxide levels, Silver and Sutton suggested.

In a way, this is a return to the previous Era of Simulation, which Google arguably led. Except this time, AI models and agents are learning from the real world and collecting their own data, rather than existing in a video game or other digital realm.

The key is that, unlike the Era of Human Data, there may be no limit to the information that can be generated and gathered for this new phase of AI development.

In our current human data period, something was lost, the authors argue: an agent’s ability to self-discover its own knowledge.

“Without this grounding, an agent, no matter how sophisticated, will become an echo chamber of existing human knowledge,” Silver and Sutton wrote, in a possible final dis to OpenAI.