They say imitation is the sincerest form of flattery. But if you’re a creator, artist, or brand, having your hard work ripped off doesn’t feel like anything to celebrate.

Smaller artists have been combating having their work stolen and used for commercial purposes for years, but the advent of AI image and video generation tools has allowed for copyrighted material to be abused like never before.

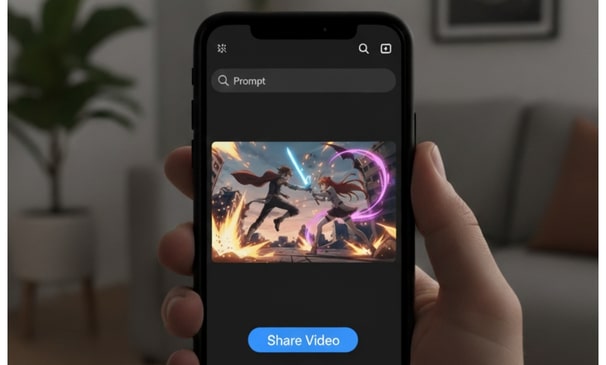

Sora 2 – the copyright abuse machine

Following the release of OpenAI’s Sora 2, users were able to create AI videos with copyrighted characters from South Park, Rick and Morty, Pokémon, and more anime studios than I could possibly mention. It also allowed users to create deepfake videos (including a video of OpenAI CEO Sam Altman shoplifting), which poses even more concerns about how this technology could be used.

Altman was quick to publish a blog post (most likely in an attempt to fend off major lawsuits) after it was clear that ‘training’ data was now being used in the live product, with virtually no restrictions.

His solution? If you don’t want your copyrighted material to be used, you need to explicitly opt out or report an infringement. No apology for the damage that may have been done, by the way.

Not to mention the fact that users can probably get around these restrictions if they tweak their prompts… which may be more difficult to remove, as Altman alludes to.

There may be some edge cases of generations that get through that shouldn’t, and getting our stack to work well will take some iteration.

The next part of his response basically suggests it’s actually a *good* thing that users can create whatever they want with copyrighted materials – and that brands and artists are supposedly *excited* about this.

We are hearing from a lot of rightsholders who are very excited for this new kind of “interactive fan fiction” and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all). We assume different people will try very different approaches and will figure out what works for them. But we want to apply the same standard towards everyone, and let rightsholders decide how to proceed (our aim of course is to make it so compelling that many people want to). There may be some edge cases of generations that get through that shouldn’t, and getting our stack to work well will take some iteration.

I’m personally failing to see how someone creating an AI slop video of, let’s say, Pikachu fighting Ronald McDonald would particularly excite either brand whose intellectual property is being featured, and that the risk of misuse far outweighs any benefit. But no matter what slop people create, these brands are so well known and authoritative that, arguably, not much damage could be done. At least when it comes to licensed characters (more on that later).

But smaller creators, artists, studios, and brands, especially those who don’t have a huge legal team behind them, are more likely to have their copyright infringed, perhaps even without knowing it. And for some of these smaller entities, copyright issues have the potential to destroy them overnight – especially as AI gets more advanced.

Imagine you are an up-and-coming beauty brand. What’s to stop a user from creating an AI video of them putting one of your products on their skin, and then showing their skin breaking out, while screaming about it burning?

Perhaps you’re a small chain of family-friendly restaurants. A user could create a video of your restaurant mascot in a rage, booting a small child across the car park.

And some people *will* believe it.

And that’s where bigger brands could still be caught out. Yes, requesting to opt out of the use of licensing of characters and other intellectual property may be possible, but what about locations, staff uniforms, and other aspects of branding that make them instantly recognisable? As Starbucks, would you want to see a video of what appears to be one of your baristas throwing a scalding hot Americano into someone’s face? And let’s be honest – if they said the barista did it because they didn’t want to write Charlie Kirk’s name on a cup for the customer… how many people would share it thinking it was real?

In the age of boycotts, there is also a huge risk of ‘fake news’ being created around a brand or associated person – and very little way of convincing people that what they are seeing and hearing simply isn’t real.

The second part of Altman’s blog talks about monetisation. But mostly for OpenAI – not the creators and brands whose work has already been plagiarised.

Second, we are going to have to somehow make money for video generation. People are generating much more than we expected per user, and a lot of videos are being generated for very small audiences. We are going to try sharing some of this revenue with rightsholders who want their characters generated by users. The exact model will take some trial and error to figure out, but we plan to start very soon. Our hope is that the new kind of engagement is even more valuable than the revenue share, but of course, we want both to be valuable.

At the time of writing, Sora was #1 in the Apple App Store charts despite being invite-only and obviously, only on iOS. If OpenAI are already admitting that, due to high demand, they need to make money from video generation, and then have to do revenue share, how exactly do they expect to charge users to pay for this once it’s more widely available?

Note the part ‘Our hope is that the new kind of engagement is even more valuable than the revenue share’. This smacks of the type of people who ask artists to work for nothing to ‘increase their exposure. Can you pay bills with exposure? No, didn’t think so.

Overall, unless OpenAI and other AI image and video generators are forced to seriously clamp down on copyright misuse, either due to huge class action lawsuits, or government intervention, I don’t think they will.

I’d imagine there are a lot of anxious marketing, legal, and public relations teams at the moment, and with more and more Sora copycats likely to emerge in the coming months, monitoring brand reputation and sentiment is set to become increasingly difficult.